Robots should be able to grasp and manipulate a variety of objects without dropping or damaging them in order to successfully collaborate with humans on manual tasks. As a result, recent robotics research efforts have concentrated on creating tactile sensors and controllers that could give robots a sense of touch and improve their object manipulation abilities.

Using a tactile-driven system, scientists from Pisa University, IIT, and the Dexterous Robotics group at the Bristol Robotics Laboratory (BRL) have recently improved the way that robots can grasp a variety of objects. This system, described in a paper that was previously pre-published on arXiv, combines a force-sensitive touch control scheme with a robotic hand that has optical tactile sensors on each of its fingertips.

“This work is motivated by a collaboration between the BRL’s Dexterous Robotics group and researchers at Pisa University and IIT,”

Chris Ford, one of the researchers who developed the tactile system,

According to Chris Ford, one of the researchers who developed the tactile system, “the inspiration for this work comes from the collaboration between the Dexterous Robotics group at the BRL and researchers at Pisa University and IIT.”. “Pisa/IIT has developed a novel robot hand (the SoftHand) that is modeled after the human hand. Due to the complementary nature of the two biomimetic technologies, the Pisa/IIT SoftHand and the BRL TacTip tactile sensor, we sought to combine them.”.

The SoftHand is a robotic hand that, in both appearance and operation, resembles human hands. This hand, which was first designed as a prosthetic device, can grasp objects with the same postural harmony as human hands.

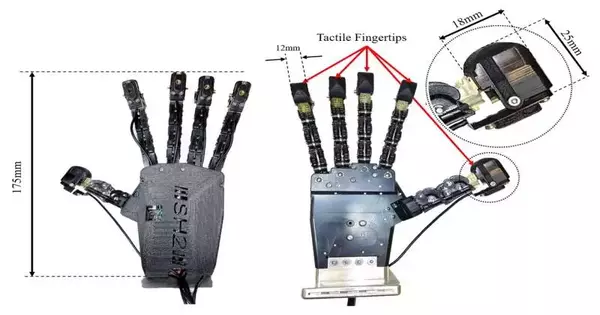

Ford and his colleagues equipped a SoftHand with one optical tactile sensor on each fingertip for the investigation’s purposes. They employed a TacTip sensor, which can gather data from a tactile skin that has been 3D printed and has an internal structure similar to that of human skin.

The combination of these characteristics is, according to Ford, essential for robots to have humanlike dexterity and manipulation abilities. The fingertip sensor was integrated onto one digit of the Pisa/IIT SoftHand and benchmarked in a paper that was published in 2021, which was where we first looked into this possibility. The logical next step in this research was to incorporate sensors into every digit and mount the hand on a robotic arm to perform some grasping and manipulation tasks using tactile feedback from the fingertips as a sensory input.”.

By adding TacTip sensors to the SoftHand-based system they had previously developed, Ford and his colleagues’ most recent work aimed to further explore its potential. They aimed to replicate human-like, force-sensitive, and gentle grasping by integrating this updated version of their system with a cutting-edge control framework.

The new controller that they have developed measures how each SoftHand fingertip’s soft tactile skin deforms. The controller uses this deformation as a feedback signal to modify the amount of force being used to grasp the object.

Ford said, “This is unique compared to more conventional grasp control methods such as controlling motor current, which can be inaccurate when applied to grippers with a “soft” structure, such as the SoftHand. The controller’s use of feedback from five high-resolution optical tactile sensors is another distinctive feature. The advantage of optical tactile sensors is the large amount of tactile information they capture due to their higher resolution, as every pixel of the image is a node containing tactile information. Optical tactile sensors use a camera to monitor changes in the tactile skin. This equates to more than 2 million tactile nodes for a 1080p tactile image.

As a single computer must simultaneously capture high-resolution images from various cameras in order to collect tactile information at a reasonable speed, the use of multiple optical sensors at once would typically require significant computational power. Ford and his colleagues created a parallel-processing hardware “brain” that can gather images from multiple sensors at once to lessen the computational load associated with their system. Their grasp controller’s reaction times were greatly accelerated as a result, giving it human-like performance.

“The results of this work show that we can take complex tactile information with a sophistication close to human touch from multiple fingertips and consolidate it into a simple feedback signal that can be used to successfully apply stable, gentle grasps to a wide range of objects regardless of geometry and stiffness without the need for complex tuning,” Ford said. The creation of a hardware ‘brain’ that can simultaneously collect and process tactile data from several high-resolution sensors is another accomplishment.”.

The SoftHand-based robotic system developed by the researchers now has significantly better tactile and sensing abilities thanks to the integration of multiple sensors. The team also enhanced its capability to grasp various object types in suitable ways and without unfavorable delays related to the processing of sensor data by combining it with their parallel-processing hardware and sophisticated controller.

“We want to capture as much tactile information as possible, so data must be captured at as high a resolution as possible,” Ford said. “However, this quickly becomes process intensive, particularly when you start introducing multiple sensors into the system. When using optical tactile sensors on multi-fingered hands, having a scalable, hardware-based solution that enables us to navigate this issue is very beneficial.

Future humanoid robots could incorporate the new tactile-driven robotic system developed by this research team, enabling them to handle delicate or deformable objects while working alongside humans on various tasks. Ford and his coworkers have so far mainly tested their system on tasks requiring the delicate grasping of objects, but it may soon be used in other grasping and manipulation scenarios.

“The resolution of the tactile data we can capture from these sensors is approaching human tactile resolution, so we believe that there is a lot more information we can extract from the tactile images, which will allow for more complex manipulation tasks,” Ford continued.

“As a result, we are currently working on some more advanced techniques to determine the overall force of the grasp and to accurately understand the type of contact at each fingertip. Our goal is to develop robots with dexterous abilities on par with those of humans by utilizing the full potential of these sensors in combination with anthropomorphic hands.”.

More information: Christopher J. Ford et al, Tactile-Driven Gentle Grasping for Human-Robot Collaborative Tasks, arXiv (2023). DOI: 10.48550/arxiv.2303.09346