Self-driving vehicles, similar to the human drivers that went before them, need to see what’s around them to securely stay away from snags and drive.

The most modern independent vehicles commonly use lidar, a turning radar-type gadget that acts as the eyes of the vehicle. Lidar gives steady data about the distance to objects so the vehicle can conclude what moves are safe to make.

Yet, these eyes, it turns out, can be deceived.

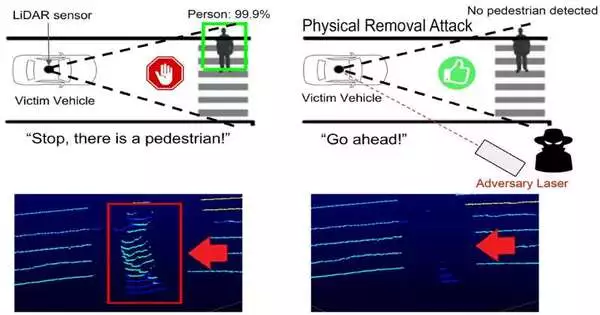

New exploration uncovers that skillfully planned lasers sparkled at an oncoming lidar framework can create a vulnerable side before the vehicle is sufficiently huge to conceal moving walkers and different snags totally. The erased information makes the vehicles think the street is protected to keep moving along, imperiling anything that might be on the assault’s vulnerable side.

The assault erases information in a cone before the vehicle, making a moving walker undetectable to the lidar framework inside that range. Credit: Sara Rampazzi/College of Florida.

This is the first occasion when lidar sensors have been fooled into erasing information about snags.

The weakness was revealed by analysts from the College of Florida, the College of Michigan and the College of Electro-Correspondences in Japan. The researchers also give updates that could eliminate this shortcoming to shield individuals from noxious assaults.

The discoveries will be introduced at the 2023 USENIX Security Discussion and are right now distributed on arXiv.

This present reality tries to show the impact of the assault on a walker moving before a lidar-prepared vehicle. Credit: Sara Rampazzi/College of Florida.

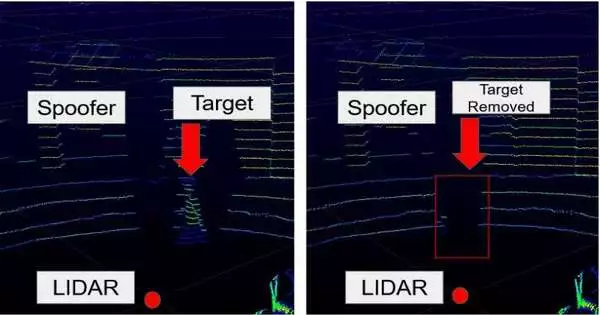

Lidar works by emanating laser light and catching the reflections to compute distances, similar to how a bat’s echolocation utilizes sound reverberations. The assault uses counterfeit reflections to scramble the sensor.

“We copy the lidar reflections with our laser to make the sensor rebate different reflections that are rolling in from certified snags,” said Sara Rampazzi, a UF teacher of PC and data science and design who drove the review. “The lidar is as yet getting authentic information from the snag, yet the information is naturally disposed of on the grounds that our phony reflections are the only ones seen by the sensor.”

The researchers showed the assault on moving vehicles and robots with the aggressor placed around 15 feet away, out and about. Yet, in principle, it could be achieved from farther away with updated gear. The tech required is all genuinely essential, yet the laser should be superbly planned to the lidar sensor and moving vehicles should be painstakingly followed to keep the laser pointing in the correct direction.

A vivified GIF showing how the assault utilizes a laser to infuse mock information focuses on the lidar sensor, which makes it dispose of real information about a snag before the sensor. Credit: Sara Rampazzi/College of Florida.

It’s basically an issue of synchronization of the laser with the lidar gadget. The data you want is normally openly accessible from the source, “said S. Hrushikesh Bhupathiraj, a UF doctoral understudy in Rampazzi’s lab and one of the lead creators of the review.”

Utilizing this method, the researchers had the option to erase information for static snags and moving walkers. They also demonstrated with real-world tests that the assault could track a slow vehicle using basic camera tracking equipment.In recreations of independent vehicle direction, this erasure of information made a vehicle keep advancing toward a walker it could never again see, as opposed to halting as it ought to.

The assault erases information in a cone before the vehicle, making a moving walker undetectable to the lidar framework inside that range. Credit: Sara Rampazzi/College of Florida.

Updates to the lidar sensors or the software that deciphers the crude information could address this weakness. For instance, makers could help the product to search for the obvious marks of the mock reflections added by the laser assault.

“Uncovering this risk permits us to fabricate a more solid framework,” said Yulong Cao, a Michigan doctoral understudy and essential creator of the review. “In our paper, we show that past guard systems aren’t sufficient, and we propose changes that ought to address this shortcoming.”

More information: Yulong Cao et al, You Can’t See Me: Physical Removal Attacks on LiDAR-based Autonomous Vehicles Driving Frameworks, arXiv (2022). DOI: 10.48550/arxiv.2210.09482. arxiv.org/abs/2210.09482

Journal information: arXiv