Researchers and specialists are continually growing new materials with one of a kind properties that can be utilized for 3D printing, yet sorting out some way to print with these materials can be a perplexing, expensive problem.

Frequently, a specialist administrator should utilize manual experimentation — potentially making huge number of prints — to decide ideal boundaries that reliably print another material actually. These boundaries incorporate printing velocity and how much material the printer stores.

MIT analysts have now utilized man-made brainpower to smooth out this system. They fostered an AI framework that utilizes PC vision to watch the assembling system and afterward right blunders by they way it handles the material progressively.

They utilized reenactments to show a brain network how to change printing boundaries to limit mistake, and afterward applied that regulator to a genuine 3D printer. Their framework printed protests more precisely than the wide range of various 3D printing regulators they contrasted it with.

The work dodges the restrictively costly course of printing thousands or millions of genuine items to prepare the brain organization. Also, it could empower architects to additional effectively integrate novel materials into their prints, which could assist them with creating objects with extraordinary electrical or synthetic properties. It could likewise assist professionals with making acclimations to the printing system on-the-fly assuming that material or ecological circumstances change startlingly.

“This undertaking is actually the primary exhibition of building an assembling framework that utilizations AI to become familiar with a perplexing control strategy,” says senior creator Wojciech Matusik, teacher of electrical designing and software engineering at MIT who drives the Computational Design and Fabrication Group (CDFG) inside the Computer Science and Artificial Intelligence Laboratory (CSAIL). “Assuming you have producing machines that are more clever, they can adjust to the changing climate in the working environment continuously, to work on the yields or the precision of the framework. You can extract more from the machine.”

“The goal of this project is to construct a production system that uses machine learning to learn a complex control policy. If you have more intelligent manufacturing machines, they can adapt to the changing workplace environment in real-time, increasing system yields or accuracy. More can be wrung from the machine.”

Wojciech Matusik, professor of electrical engineering and computer science at MIT

The co-lead creators on the examination are Mike Foshey, a mechanical specialist and undertaking chief in the CDFG, and Michal Piovarci, a postdoc at the Institute of Science and Technology in Austria. MIT co-creators incorporate Jie Xu, an alumni understudy in electrical designing and software engineering, and Timothy Erps, a previous specialized partner with the CDFG.

Picking parameters

Deciding the ideal boundaries of a computerized production interaction can be one of the most costly pieces of the cycle in light of the fact that so much experimentation is required. What’s more, when a professional finds a blend that functions admirably, those boundaries are just great for one unambiguous circumstance. She has little information on how the material will act in different conditions, on various equipment, or on the other hand, in the event that another clump shows various properties.

Utilizing an AI framework is laden with difficulties as well. To start with, the analysts expected to quantify what was occurring on the printer continuously.

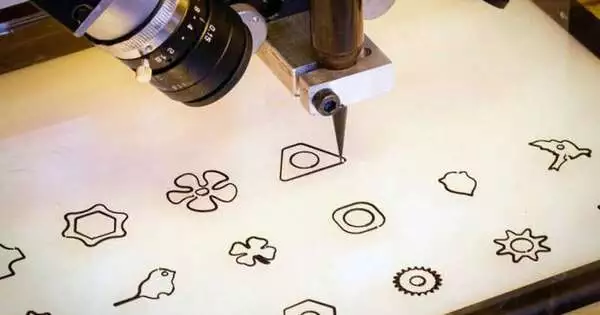

To do this, they created a machine-vision framework utilizing two cameras focused on the spout of the 3D printer. The framework focuses light on the material as it is kept and, in view of how much light goes through, works out the material’s thickness.

“You can consider the vision framework a bunch of eyes watching the cycle continuously,” Foshey says.

The regulator would then deal with the pictures it gets from the vision framework and, in light of any blunder it sees, change the feed rate and the heading of the printer.

Be that as it may, preparing a brain network-based regulator to comprehend this assembling system is information-escalated, and would require making a large number of prints. In this way, the scientists fabricated a test system, all things considered.

Successful simulation

To prepare their regulator, they utilized a cycle known as support learning, in which the model learns through experimentation with a prize. The model was entrusted with choosing printing boundaries that would make a specific item in a recreated climate. Subsequent to being shown the normal result, the model was compensated when the boundaries it picked limited the mistake between its print and the normal result.

For this situation, a “blunder” means the model either administered an excessive amount of material, setting it in regions that ought to have been left open, or didn’t apportion enough, leaving open spots that ought to be filled in. As the model performed more reenacted prints, it refreshed its control strategy to augment the prize, turning out to be increasingly precise.

However, this present reality is more chaotic than a recreation. By and by, conditions normally change because of slight variations or commotion in the printing system. So the scientists made a mathematical model that approximates the commotion from the 3D printer. They utilized this model to add commotion to the reenactment, which prompted more practical outcomes.

“The fascinating thing we found was that, by carrying out this commotion model, we had the option to move the control strategy that was simply prepared in reproduction onto equipment without preparing with any actual trial and error,” Foshey says. “We didn’t have to do any calibrating on the real hardware a short time later.”

When they tried the regulator, it printed protests more precisely than some other control strategy they assessed. It performed particularly well at infill printing, which is printing the inside of an article. A few different regulators saved so much material that the printed object swelled up, but the scientists’ regulator changed the printing way so the item remained level.

Their control strategy could figure out how materials spread in the wake of being kept and change boundaries in a like manner.

“We were likewise ready to configure control strategies that had some control over various sorts of materials on-the-fly. So in the event that you had an assembling cycle out in the field and you needed to change the material, you wouldn’t need to revalidate the assembling system. You could simply stack the new material and the regulator would naturally change, “Foshey says.”

Since they have shown the adequacy of this method for 3D printing, the scientists need to foster regulators for other assembling processes. They’d likewise prefer to see how the methodology can be changed for situations where there are different layers of material or various materials being printed immediately. Moreover, their methodology expects every material to have the proper thickness (“sweetness”), but a future emphasis could utilize AI to perceive and adapt to consistency continuously.

More information: Michal Piovarči et al, Closed-loop control of direct ink writing via reinforcement learning, ACM Transactions on Graphics (2022). DOI: 10.1145/3528223.3530144