Scientists have developed a clever AI system that uses scene depictions in film content to naturally perceive the activities of various characters.Applying the system to many film scripts showed that these activities will generally reflect broad orientation generalizations, some of which are viewed as steady across time. Victor Martinez and partners at the College of Southern California, U.S., present these discoveries in the open-access journal PLOS ONE on December 21.

Films, programs, and other media reliably depict conventional sexual orientation generalizations, some of which might be unsafe. To extend comprehension of this issue, a few scientists have investigated the utilization of computational systems as an effective and exact method for examining a lot of character exchange in scripts. In any case, a few unsafe generalizations may be imparted not through what characters say but rather through their activities.

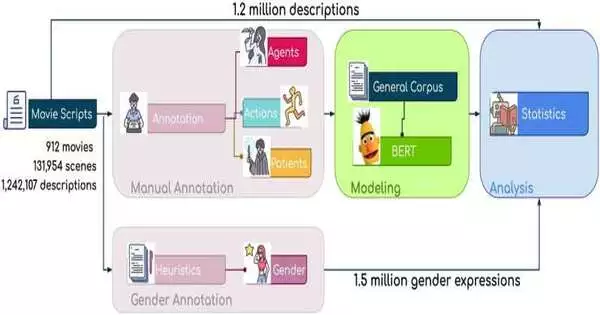

To investigate how characters’ activities could reflect generalizations, Martinez and partners utilized AI to build a computational model that can naturally dissect scene depictions in film scripts and recognize various characters’ activities. Utilizing this model, the analysts examined over 1.2 million scene depictions from 912 film scripts created from 1909 to 2013, recognizing 50,000 activities performed by 20,000 characters.

Then, the analysts led factual examinations to analyze whether there were contrasts between the sorts of activities performed by characters of various sexes. These examinations recognized various contrasts that reflect realized generalizations about orientation.

They discovered, for example, that female characters will generally exhibit less office than male characters, and that female characters are bound to exhibit love. Male characters are less inclined to “cry,” and female characters are bound to be exposed to “gape” or “watching” by different characters, featuring an accentuation on female appearance.

While the analysts’ model is limited in its ability to completely capture nuanced cultural setting relating the content to every scene and the overall story, these findings are consistent with previous research on orientation generalizations in popular media and may help in bringing issues to light about how media can sustain unsafe generalizations and thus impact individuals’ genuine convictions and activities.

Later on, the new AI system could be refined and applied to integrate ideas of diversity, for example, between orientation, age, and race, to extend comprehension of this issue.

The creators add, “Analysts have proposed utilizing AI strategies to recognize generalizations in character exchanges in media, yet these techniques don’t represent unsafe generalizations conveyed through character activities.” To resolve this issue, we fostered a hugely scoped AI system that can recognize character activities from film script depictions. “By gathering 1.2 million scene depictions from 912 film scripts, we had the option to concentrate on precise distinctions in sexual orientation in film depictions at a huge scale.”

More information: Boys don’t cry (or kiss or dance): A computational linguistic lens into gendered actions in film, PLoS ONE (2022). DOI: 10.1371/journal.pone.0278604

Journal information: PLoS ONE