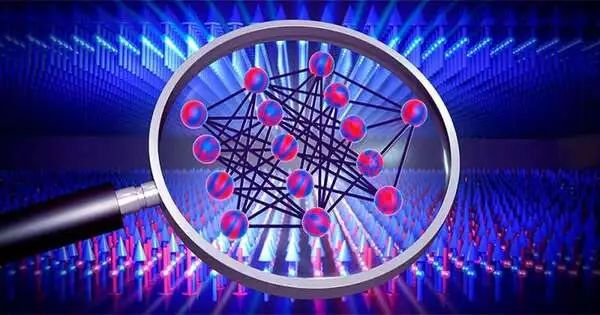

Brain networks are learning calculations that rough the answer to an errand via preparing with accessible information. In any case, it is usually hazy how precisely they achieve this. Two youthful Basel physicists have now inferred numerical articulations that permit one to compute the ideal arrangement without preparing an organization. Their findings not only provide insight into how those learning calculations work, but they may also aid in identifying obscure stage changes in actual frameworks later on.

Brain networks depend on the rules of activity in the mind. Such PC calculations figure out how to tackle issues through continued preparation and can, for instance, recognize items or communicate in language.

For quite a while at this point, physicists have been attempting to involve brain organizations in identifying stage changes too. Stage changes are natural to us from everyday experience, such as when water sticks to ice, but they also occur in more perplexing structures between different periods of appealing materials or quantum frameworks, where they are frequently difficult to identify.

“This shortcut significantly decreases computing time. Our solution is calculated in less time than a single training round of a small network.”

Arnold and Schäfer

Julian Arnold and Plain Schäfer, two Ph.D. understudies in the examination gathering of Prof. Dr. Christoph Bruder at the College of Basel, have now independently inferred numerical articulations with which such stage changes can be found quicker than previously. They recently distributed their outcomes in Actual Audit X.

Skipping preparing saves time.

A brain network advances by efficiently shifting boundaries in many preparation adjustments to make the forecasts determined by the organization match the preparation information taken care of in it increasingly more intently. That preparing information can be the pixels of pictures or, as a matter of fact, the consequences of estimations on an actual framework showing stage changes about which one might want to learn something.

“Brain networks have previously become very great at recognizing stage changes,” says Arnold, “yet how precisely they do it normally remains totally dark.” To change what is happening and focus some light into the “black box” of a brain organization, Arnold and Schäfer checked out the unique instance of organizations with an endless number of boundaries, which, on a basic level, go through a vast number of preparation adjustments.

By and large, it has been known for quite a while that the expectations of such organizations generally tend towards a specific ideal arrangement. Arnold and Schäfer accepted this as a beginning stage for inferring numerical recipes that permit one to straightforwardly compute that ideal arrangement without really preparing the organization. “That easy route hugely lessens the figuring time,” Arnold makes sense of: “The time it takes to compute our answer is just up to a solitary preparation round of a little organization.”

Knowledge in the organization

In addition to saving time, the Basel physicists’ strategy has the added benefit of providing insight into the workings of the brain organizations and, thus, the actual frameworks under consideration.

Arnold and Schäfer have tried their strategy on PC-created information up to this point. They must also apply the strategy to real-world estimation data. Later on, this could make it conceivable to identify at this point obscure stage changes, for example in quantum test systems or in clever materials.

More information: Julian Arnold et al, Replacing Neural Networks by Optimal Analytical Predictors for the Detection of Phase Transitions, Physical Review X (2022). DOI: 10.1103/PhysRevX.12.031044

Journal information: Physical Review X