A group of New York College PC researchers has made a brain network that can make sense of how it arrives at its expectations. The work uncovers what represents the usefulness of brain organizations—the motors that drive man-made consciousness and AI—in this way, enlightening a cycle that has to a great extent been disguised from clients.

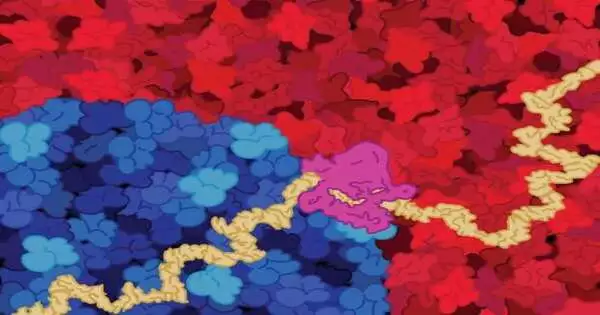

The cutting-edge research focuses on a particular use of brain networks that has become famous lately: handling organic inquiries. Among these are assessments of the complexities of RNA joining—the point of convergence of the review—which assumes a part in moving data from DNA to utilitarian RNA and protein items.

“Numerous brain networks are secret elements; these calculations can’t make sense of how they work, raising worries about their dependability and smothering advancement into understanding the hidden organic cycles of genome encoding,” says Oded Regev, a software engineering teacher at NYU’s Courant Organization of Numerical Sciences and the senior creator of the paper, which was distributed in the Procedures of the Public Foundation of Sciences.

“Many neural networks are black boxes—these algorithms cannot explain how they work, raising concerns about their trustworthiness and stifling progress into understanding the underlying biological processes of genome encoding,”

Oded Regev, a computer science professor at NYU’s Courant Institute of Mathematical Sciences and the senior author of the paper,

“By tackling another methodology that works on both the amount and the nature of the information for AI preparation, we planned an interpretable brain network that can precisely foresee complex results and make sense of how it shows up to its expectations.”

Regev and the paper’s different writers, Susan Liao, a workforce individual at the Courant Foundation, and Mukund Sudarshan, a Courant doctoral understudy at the hour of the review, made a brain network in light of what is now realized about RNA joining.

In particular, they fostered a model—the information-driven likeness of a powerful magnifying lens—that permits researchers to follow and evaluate the RNA joining process, from input grouping to yield grafting expectations.

“Utilizing an ‘interpretable-by-plan’ approach, we’ve fostered a brain network model that gives bits of knowledge into RNA joining—a major cycle in the exchange of genomic data,” notes Regev. “Our model uncovered that a little, hair clip-like construction in RNA can diminish joining.”

The scientists affirmed the bits of knowledge their model gives through a progression of examinations. These outcomes showed a correlation with the model’s disclosure: at whatever point the RNA particle collapsed into a barrette design, joining was ended, and the second the scientists disturbed this hair clip structure, grafting was reestablished.

More information: Susan E. Liao et al, Deciphering RNA splicing logic with interpretable machine learning, Proceedings of the National Academy of Sciences (2023). DOI: 10.1073/pnas.2221165120