Robots should be able to actively participate in both group and one-on-one interactions in busy social contexts such as malls, hospitals, and other public locations to effectively connect with humans. On the other hand, most existing robots have been discovered to communicate with individual users much better than groups of chatting humans.

Two University of North Carolina at Chapel Hill researchers, Hooman Hedayati and Daniel Szafir, have recently created a new data-driven technique that could improve how robots connect with groups of humans. This method, which was presented in a paper at the 2022 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’22), allows robots to predict the positions of humans in conversational groups so that they do not mistakenly ignore a person when their sensors are partially or completely obstructed.

Being in a conversational group is easy for people but difficult for robots, one of the study’s researchers, Hooman Hedayati, told TechXplore. Imagine you’re having a conversation with a bunch of pals, and one of them blinks. She stops talking and asks whether you’re still there. When a robot is in conversational groups, this potentially irritating condition can occur.

“Being in a conversational group is easy for humans but challenging for robots,”

Hooman Hedayati

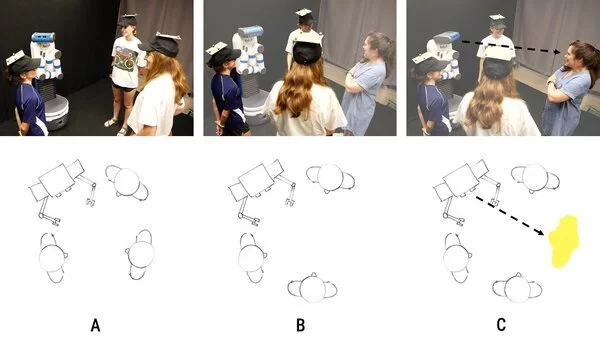

Because their actions are primarily reliant on data collected by their sensors, many robots periodically misbehave while participating in a group chat (i.e., cameras, depth sensors, etc.). Sensors, on the other hand, are prone to inaccuracies and might be hindered by unexpected motions and obstructions in the robot’s environment.

As a result, “if the robot’s camera is obscured by an impediment for a second, similar to when people blink,” Hedayati stated, “the robot may not see that person, and as a result, it ignores the user.”

Users, in my experience, are disturbed by these misbehaviors. Our current project’s main purpose was to assist robots in detecting and predicting the position of an undetected human within a conversational group. “

To anticipate people’s positions in a conversational group, Hedayati and Szafir first created an algorithm that examines a robot’s beliefs about who is and isn’t a member of the group. This algorithm can spot a robot’s mistakes (i.e., if it is ignoring the existence of one or more people in a conversational group). After that, it analyzes the available data to forecast the whereabouts of the undetected user or users.

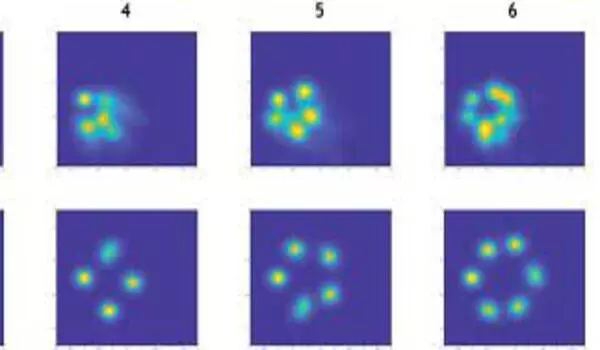

Hedayati added, “Our method is based on one of our previous observations.” “We noticed that people tend to stay in specified places relative to one another while cleaning the “Babble dataset” (a human-human conversational groups dataset). This indicates that if we know the positions of everyone in a conversational group except one, we can guess where he or she will be.

Hedayati and Szafir’s method was trained using a collection of existing datasets that included annotated films of groups of human users interacting with one another. It can reliably anticipate the position of an undetected user by studying the positions of other speakers in a group.

Hedayati remarked, “We demonstrated that we can model human behaviors for robots such that they have a greater understanding of the dynamics of conversational groups.”

In the future, this team of academics’ novel approach could be used to improve the conversational abilities of both existing and newly constructed robots. As a result, they may be easier to install in big public venues such as malls, hospitals, and clinics.

“We are committed to developing human-robot conversational groups, and there are many intriguing outstanding questions in this sector (for example, how to recognize who is the active speaker, where robots should stand in a group, how to join a group, and so on),” Hedayati noted. For us, the next stage will be to improve robot gaze behavior in a conversational group. People consider robots with better gaze behavior to be more intelligent (for example, robots that recognize who is speaking and glance at him). We seek to improve robot gaze behavior and make human-robot conversational groups more engaging for people. “