This week saw a significant advancement in meta with the release of a model that can recognize and isolate objects in an image even if it has never seen them before. A pre-print server article on arXiv introduces and describes the technology.

The AI tool represents a significant advancement in one of technology’s more difficult problems: enabling computers to recognize and understand the components of a previously unseen image and isolate them for user interaction.

It brings to mind a theory put forth by Robert O., the previous leader of the National Security Commission on Artificial Intelligence. As one employee once put it, “AI and machine learning allow you to find the needle in the haystack.”.

“SAM has learned a general concept of what objects are, and it can generate masks for any object in any image or video, even objects and image types that it did not encounter during training,”

Meta AI announced.

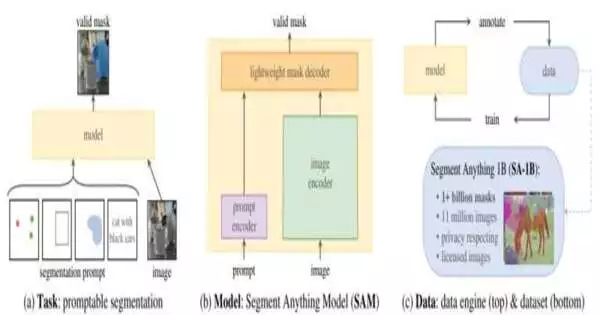

In this case, Meta’s Segment Anything Model (SAM) looks for related pixels in an image and finds the shared elements that connect all the individual parts of the picture.

According to a blog post published on Wednesday by Meta AI, “SAM has learned a general notion of what objects are, and it can generate masks for any object in any image or any video, even including objects and image types that it had not encountered during training.”

Segmentation is the process of recognizing something. Every day, we carry it out mindlessly. Things like smartphones, cables, computer screens, lamps, melting candy bars, and cups of coffee are familiar items on our desks at work.

However, without any prior programming, a computer must work hard to separate every pixel in a two-dimensional image, and the task is made more challenging by overlapping objects, shadows, and irregular or partitioned shapes.

In earlier segmentation methods, defining a mask typically required human involvement. Prior to automated segmentation, objects could be detected, but Meta AI claims that this required “thousands or even tens of thousands of examples” of objects, in addition to “computer resources and technical expertise to train the segmentation model.”. “.

SAM combines the two strategies into a fully automated system. It can recognize new kinds of objects thanks to the more than 1 billion masks it uses.

According to the Meta blog, “this ability to generalize means that, on the whole, practitioners will no longer need to collect their own segmentation data and fine-tune a model for their use case.”

SAM was described as “Photoshop’s ‘Magic Wand’ tool on steroids” by one reviewer.

User clicks or text commands can start SAM. SAM could be used even more in the AR/VR space, according to meta researchers. When users concentrate, an object can be defined, lifted into a 3D image, and then included in a presentation, game, or movie.

Online resources include a free working model. Users have the option of uploading their own photos or choosing from an image gallery. To watch SAM define, for example, the outline of a nose, face, or entire body, users can tap anywhere on the screen or draw a rectangle around an object of interest. Another choice instructs SAM to recognize each object in an image.

Although SAM has not yet been implemented on Facebook, similar technology has been used to implement well-known procedures like photo tagging, moderation and tagging of prohibited content, and the generation of recommended posts on both Facebook and Instagram.

More information: Alexander Kirillov et al, Segment Anything, arXiv (2023). DOI: 10.48550/arxiv.2304.02643