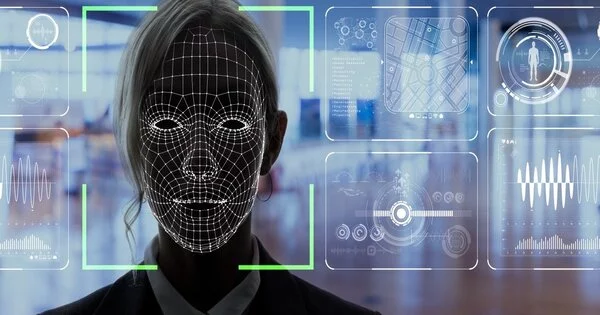

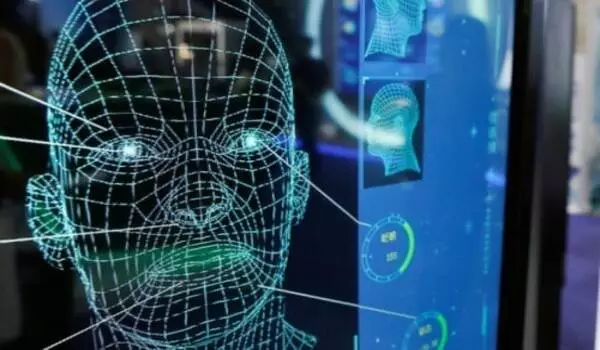

Facial recognition technology has the potential to be a powerful tool for a variety of applications, but it also raises significant privacy and civil liberties concerns. There have been a number of calls for the regulation of facial recognition technology to ensure that it is used responsibly and in a way that respects the rights of individuals.

One approach to regulating facial recognition technology is to establish clear guidelines for its use and to ensure that there are strong oversight mechanisms in place to ensure compliance with these guidelines. This might include the creation of a regulatory body that is responsible for setting standards for the use of facial recognition technology, as well as for enforcing these standards.

Another approach is to focus on the development of technical standards and best practices that can be used to ensure the responsible use of facial recognition technology. This might include the establishment of guidelines for the accuracy and reliability of facial recognition systems, as well as for the security of the data that is collected and used by these systems.

A new report from the Human Technology Institute at the University of Technology Sydney (UTS) outlines a model law for facial recognition technology to protect against harmful use while also encouraging innovation for public benefit.

The law in Australia was not written with the widespread use of facial recognition in mind. The report, led by UTS Industry Professors Edward Santow and Nicholas Davis, suggests reforms to modernize Australian law, particularly to address threats to privacy and other human rights.

When facial recognition applications are well-designed and regulated, they can provide real benefits by assisting in the identification of people on a large scale. People who are blind or have a vision impairment frequently use the technology, making the world more accessible to those groups.

Professor Edward Santow

In recent years, facial recognition and other remote biometric technologies have grown exponentially, raising concerns about privacy, mass surveillance, and unfairness experienced, particularly by people of color and women, when the technology makes mistakes.

An investigation by consumer advocacy group CHOICE in June 2022 revealed that several large Australian retailers were using facial recognition to identify customers entering their stores, causing widespread community outrage and calls for tighter regulation. There have also been widespread calls for facial recognition law reform in Australia and around the world.

This new report is in response to those requests. It acknowledges that our faces are unique in that humans rely heavily on each other’s faces to identify and interact. When this technology is misused or overused, we are especially vulnerable to human rights violations.

“When facial recognition applications are well designed and regulated, they can provide real benefits by assisting in the identification of people on a large scale. People who are blind or have a vision impairment frequently use the technology, making the world more accessible to those groups” Professor Santow, former Australian Human Rights Commissioner and current Co-Director of the Human Technology Institute, made the statement.

“This report proposes a model law for facial recognition that is risk-based. The first step should be to ensure that facial recognition is developed and used in ways that respect people’s fundamental human rights” He stated.

“The gaps in our current legislation have resulted in a type of regulatory market failure. Many well-known companies have backed away from offering facial recognition because consumers are not adequately protected. Companies that continue to provide services in this area are not required to focus on the basic rights of people affected by this technology “Professor Davis, Co-Director of the Human Technology Institute and former member of the executive committee of the World Economic Forum in Geneva, agreed.

“Many civil society organizations, government and inter-governmental bodies, and independent experts have sounded the alarm about dangers associated with current and predicted uses of facial recognition,” he said.

This report calls on Australian Attorney-General Mark Dreyfus to lead a national facial recognition reform process. This should start by introducing a bill into the Australian Parliament based on the model law set out in the report.

The report also suggests that the Office of the Australian Information Commissioner be given regulatory responsibility for regulating the development and use of this technology in federal jurisdiction, with a harmonized approach in state and territory jurisdictions. The model law establishes three levels of human rights risk for individuals affected by the use of a specific facial recognition technology application, as well as risks to the broader community.

Anyone who develops or deploys facial recognition technology must first assess the level of human rights risk that would apply to their application, according to the model law. Members of the public and the regulator can then challenge that assessment. Based on the risk assessment, the model law then sets out a cumulative set of legal requirements, restrictions, and prohibitions.