In the film “Top Firearm,” Tom Cruise portrays Maverick, who is in charge of training young pilots to complete a seemingly impossible task: fly their jets deep into a rocky canyon while remaining so low to the ground that radar cannot detect them, and then rapidly climb out of the canyon at an extreme angle to avoid the rock walls. Maverick is played by Maverick. Warning: This is a spoiler: These human pilots complete their mission with the assistance of Maverick.

On the other hand, it would be difficult for a machine to complete the same intense task. For an autonomous aircraft, for instance, the simplest route to the destination conflicts with what the aircraft must do to stay hidden or avoid hitting canyon walls. This conflict, which is referred to as the stabilize-avoid problem, is insurmountable for many existing AI techniques, and they would be unable to accomplish their objective safely.

A new method that outperforms other approaches to solving complex stabilize-avoid problems has been developed by researchers at MIT. The agent achieves and maintains stability within its goal region thanks to their machine-learning approach, which matches or exceeds existing methods in terms of safety while simultaneously offering a tenfold increase in stability.

“This has been a long-standing and difficult challenge. Many others looked at it but didn’t know how to deal with such high-dimensional and intricate dynamics,”

Chuchu Fan, the Wilson Assistant Professor of Aeronautics and Astronautics,

In an examination that would do right by Dissident, their procedure successfully guided a reproduced flying airplane through a restricted passageway without colliding with the ground.

“This has been a longstanding, testing issue. “Many individuals have seen it yet didn’t have any idea how to deal with such high-layered and complex elements,” says Chuchu Fan, the Wilson Collaborator Teacher of Air Transportation and Astronautics, an individual from the Research Facility for Data and Choice Frameworks (Covers), and senior creator of another paper on this strategy.

Fan is joined by lead creator Oswin Thus, an alumni understudy. The paper will be introduced at the Advanced mechanics conference in Korea, which will host a Science and Systems conference from July 10 to 14. The paper is accessible on the arXiv pre-print server.

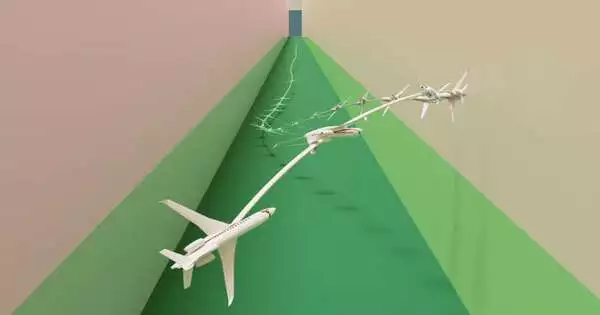

This video demonstrates how the researchers successfully piloted a simulated jet aircraft in a situation in which it had to stabilize near a target, maintain a very low altitude, and stay within a limited flight path.

The stabilize-avoid challenge

There are numerous methods for solving complex stabilize-avoid problems by simplifying the system so that simple math can solve it. However, the simplified results frequently do not hold up to the dynamics of the real world.

Reinforcement learning is a type of machine learning in which an agent learns by trial and error and receives a reward for behavior that brings it closer to a goal. This makes the techniques more effective. However, achieving the ideal balance between the two objectives—maintaining stability and avoiding obstacles—is time-consuming.

The MIT scientists separated the issue into two stages. The stabilize-avoid problem is first reframed as a constrained optimization problem. In this arrangement, settling the enhancement empowers the specialist to reach and balance out to its objective, meaning it stays inside a specific locale. So it explains that constraints ensure the agent avoids obstacles.

Then, at that point, for the subsequent step, they reformulate that obliged streamlining issue into a numerical portrayal known as the epigraph structure and tackle it utilizing a profound support learning calculation. They are able to get around the challenges that other methods face when using reinforcement learning thanks to the epigraph format.

“In any case, profound support learning isn’t intended to settle the epigraph type of an advancement issue, so we couldn’t simply plug it into our concern. We needed to determine the numerical articulations that work for our framework.” We combined those new derivations with some existing engineering techniques used in other methods once we had those new derivations,” So states.

No points for second place

Second place gets no points because they designed a number of control experiments with different starting conditions to test their method. In some simulations, for instance, the autonomous agent must make drastic maneuvers to avoid obstacles that are on the verge of colliding with it while simultaneously reaching and remaining within a goal region.

Their method was the only one that could maintain safety while stabilizing all trajectories when compared to several baselines. They used it to fly a simulated jet aircraft in a scenario that would be seen in a “Top Gun” movie to push their method even further. The aircraft had to maintain a very low altitude and stay within a constrained flight path while stabilizing near a target close to the ground.

In 2018, this open-source model of a simulated jet was created by experts in flight control as a test challenge. Could researchers come up with a scenario in which their controller was unable to fly? However, Fan claims that the model was still unable to handle complex scenarios because it was so complicated that it was difficult to work with.

The MIT specialists’ regulator had the option to keep the fly from crashing or slowing down while settling to the objective, which is obviously better than any of the baselines.

Later on, this strategy could be a beginning stage for planning regulators for exceptionally powerful robots that should meet security and solidity prerequisites as independent conveyance drones. Alternately, it could be used as part of a larger system. It’s possible that the algorithm is only turned on when a car skids on a snowy road to assist the driver in safely retracing their steps to a more stable course.

So says that their method really shines when it comes to navigating extreme situations that a human would be unable to handle.

“We believe that providing reinforcement learning with the safety and stability guarantees we will need to provide us with assurance when we deploy these controllers on mission-critical systems is a goal we should strive for as a field.” According to him, “We believe that this is a promising first step toward achieving that goal.”

Pushing ahead, the scientists need to upgrade their strategy so it is better prepared to consider vulnerability while addressing the enhancement. Since there will be mismatches between the dynamics of the model and those in the real world, they also want to investigate how well the algorithm works when applied to hardware.

“The performance of Professor Fan’s team in reinforcement learning for dynamical systems where safety is important has improved. Rather than simply hitting an objective, they make regulators that guarantee the framework can arrive at its objective securely and remain there endlessly,” says Stanley Bak, an associate teacher in the Division of Software Engineering at Stony Creek College who was not engaged with this examination. ” “A 17-state nonlinear jet aircraft model that incorporates nonlinear differential equations with lift and drag tables was designed in part by researchers from the Air Force Research Lab (AFRL) and is made possible by their improved formulation.”

More information: Oswin So et al, Solving Stabilize-Avoid Optimal Control via Epigraph Form and Deep Reinforcement Learning, arXiv (2023). DOI: 10.48550/arxiv.2305.14154