Profound brain organizations (DNNs) have ended up being exceptionally encouraging apparatuses for dissecting a lot of information, which could accelerate research in different logical fields. For example, throughout the course of recent years, some PC researchers have prepared models in view of these organizations to dissect substance information and distinguish promising synthetics for different applications.

Scientists at the Massachusetts Establishment of Innovation (MIT) have, as of late, done a review researching the brain scaling conduct of enormous DNN-based models prepared to create invaluable compound structures and learn interatomic possibilities. Their paper, distributed in Nature Machine Knowledge, demonstrates the way that rapidly the exhibition of these models can work as their size and the pool of information they are prepared on are expanded.

“The paper ‘Scaling Regulations for Brain Language Models’ by Kaplan et al. was the primary motivation for our examination,” Nathan Frey, one of the specialists who completed the review, told Tech Xplore. “That paper showed that rising the size of a brain organization and how much information it’s prepared on prompts surprising enhancements in model preparation. We needed to perceive how ‘brain scaling’ applies to models prepared on science information for applications like medication disclosure.”

“We investigated two distinct types of models: an autoregressive, GPT-style language model we developed called ‘ChemGPT,’ and a family of GNNs. ChemGPT was trained in the same manner as ChatGPT, except that in our case, ChemGPT is attempting to predict the next token in a string representing a molecule. GNNs are trained to predict a molecule’s energy and forces.”

Nathan Frey, one of the researchers who carried out the study,

Frey and his partners began chipping away at this exploration project back in 2021, hence before the arrival of the famous simulated intelligence-based stages ChatGPT and Dall-E 2. At that point, the future upscaling of DNNs was seen as especially applicable to certain fields, and studies investigating their scaling in the physical or life sciences were scant.

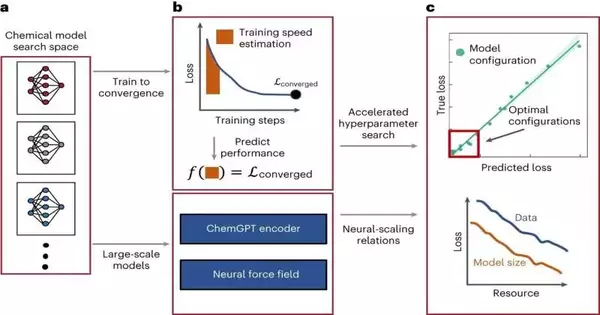

The specialists’ review investigates the brain scaling of two particular sorts of models for substance information examination: an enormous language model (LLM) and a chart brain organization (GNN)-based model. These two unique sorts of models can be utilized to create compound pieces and get familiar with the possibilities between various particles in synthetic substances separately.

“We concentrated on two totally different sorts of models: an autoregressive, GPT-style language model we constructed called ‘ChemGPT’ and a group of GNNs,” Frey made sense of. “ChemGPT was prepared similarly to ChatGPT; however, for our situation, ChemGPT is attempting to anticipate the following token in a string that addresses a particle. The GNNs are prepared to foresee the energy and powers of a particle.”

To investigate the versatility of the ChemGPT model and of GNNs, Frey and his partners investigated the impacts of a model’s size and the size of the dataset used to prepare it on different significant measurements. This permitted them to infer a rate at which these models improve as they become bigger and take care of additional information.

“We do find ‘brain scaling conduct’ for synthetic models suggestive of the scaling conduct found in LLM and vision models for different applications,” Frey said.

“We likewise showed that we are not close to any sort of major breaking point for scaling substance models, so there is still a great deal of space to examine further with more figures and greater datasets. Integrating material science into GNNs by means of a property called ‘equivariance’ decisively affects further developing scaling productivity, which is an intriguing outcome since it’s quite challenging to track down calculations that change scaling conduct.”

By and large, the discoveries accumulated by this group of analysts shed new light on the capability of two sorts of man-made intelligence models for leading science research, showing the degree to which their presentation can improve as they are increased. This work could before long illuminate extra investigations investigating the commitment and edge for development of these models, as well as that of other DNN-based strategies for explicit logical applications.

“Since our work initially showed up, there has proactively been invigorating subsequent work examining the capacities and limits of scaling for synthetic models,” Frey added. “All the more, as of late, I have likewise been dealing with generative models for protein plans and pondering what scaling means for models for natural information.”

More information: Nathan C. Frey et al, Neural scaling of deep chemical models, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00740-3