Computer-generated reality (VR) and expanded reality (AR) head-mounted shows permit clients to encounter advanced content in additional vivid and engaging ways. To keep the clients as drenched in the substance as could be expected, PC researchers have been attempting to foster route and text choice connection points that don’t require the utilization of their hands.

Rather than squeezing buttons on a manual regulator, these connection points would permit clients to choose texts or perform orders just by moving their heads or squinting their eyes. In spite of the commitment of these methodologies, today’s most head-mounted shows still vigorously depend on handheld regulators or hand and finger motions.

Scientists at Xi’an Jiaotong-Liverpool College and Birmingham City College have as of late done a review pointed toward examining various sans-hands text choice methodologies for VR and AR headsets. Their discoveries, distributed in a pre-distributed paper on arXiv, feature the advantages of a portion of these methodologies, especially those that empower connections through eye squints.

“Text selection, like many other interactions in VR, necessitates the use of a pointing device to identify the objects to be selected prior to interacting with them, followed by another method to signify the selection. We chose head-based pointing as our pointing method in this study, which means that the cursor will move with the user’s head motions.”

Hai-Ning Liang, one of the researchers who carried out the study,

“My group has been taking part in further developing the text section for VR/AR throughout recent years,” Hai-Ning Liang, one of the analysts who did the review, told TechXplore. “Text passage is a significant component in the environment of text sections and altering.”

The new work by Liang and his partners expands on a portion of their past exploration, zeroing in on sans-hands text section methods for VR. In their past examinations, the group found that sans-hands methods could work on client connections with VR frameworks, making entering text more natural.

“The primary objective of our work is to investigate what kinds of elements are reasonable for sans-hand text choice in VR,” Liang made sense of. “In this new review, we explored the capability of sans-hands text choice methodologies in a controlled lab environment to try different things with 24 members utilizing an inside subject try plan (i.e., where the members encountered all test conditions).”

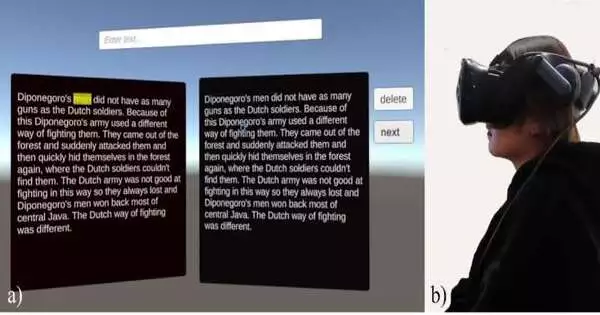

In their trials, Liang and his partners requested that members test different text-choice strategies while playing out a particular errand. This errand copied what the clients could experience in true settings while utilizing VR and was isolated into three circumstances that shifted in view of the length of the message introduced to clients (i.e., short: single word; medium: 2-3 lines of message; and long: 6-8 lines of message).

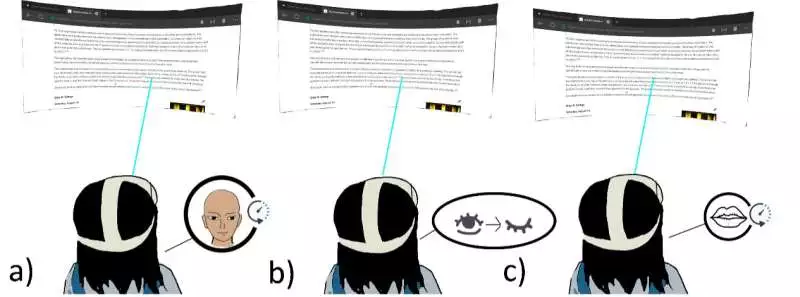

The three sans-hands text determination methods investigated in this exploration were gathered by three choice systems: (a) Stay, (b) Eye squint, and (c) Voice. Credits: Meng, Xu, and Liang.

The members were approached to utilize various sans-serif text choice techniques while in a VR perusing climate that the group had explicitly made for the trial. After they finished these tests, the members got some information about their encounters.

“Text choice, in the same way as other different connections in VR, requires a guiding system for the ID of the items to be chosen before cooperating with them, and afterward, one more component to show the determination,” Liang said. “In this review, we chose head-based pointing as our pointing system, and that implies the cursor will follow the client’s head development.”

Liang and his partners chose to explicitly survey the capability of three unique text determination techniques, alluded to as “Stay,” “Eye squints,” and “Voice.” Stay expects clients to float the pointer in the region where the text they need to choose is situated for a particular time frame (e.g., 1 second).

While utilizing the eye squints for choice, clients were asked to deliberately flicker their eyes to choose a particular text. Their framework perceives these deliberate eye squints since they are normally longer than regular ones (around 400ms rather than 100-200ms).

Finally, the voice approach expected clients to create a sound over 60 dB. In their tests, the scientists requested that their subjects make a murmuring sound when they wished to choose a text part.

Liang made sense of it all. “These determination systems, including their boundaries, were picked in view of discoveries from the writing and a progression of pilot tests we did.” “Once more, the discoveries assembled in our trial affirmed that sans-hands approaches could be reasonable for text choice in VR. Likewise, we showed that eye squints are an effective and helpful choice system for sans-hands connection.

The new work by Liang and his partners features the immense capability of sans-hand text choice methods for making VR frameworks more natural and helpful to utilize. Later on, their discoveries could move more examination groups to create and assess squint-based methods for text choice and different kinds of connections.

“Our goal for future examination in this space will be to zero in on making text choice much more effective and usable and coordinating it into the environment for content editing and record creation in VR/AR,” Liang added. “We will likewise be planning text choice strategies that can be utilized by various impeded clients and investigating different methodologies, including eye gaze for cursor development rather than head development.”

More information: Xuanru Meng, Wenge Xu, Hai-Ning Liang, An exploration of hands-free text selection for virtual reality head-mounted displays. arXiv:2209.06825v1 [cs.HC], arxiv.org/abs/2209.06825

Xueshi Lu et al, Exploration of Hands-free Text Entry Techniques For Virtual Reality, 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (2020). DOI: 10.1109/ISMAR50242.2020.00061

Xueshi Lu et al, iText: Hands-free Text Entry on an Imaginary Keyboard for Augmented Reality Systems, The 34th Annual ACM Symposium on User Interface Software and Technology (2021). DOI: 10.1145/3472749.3474788