The human hearable pathway is a profoundly refined organic framework that incorporates both actual designs and cerebrum locales and has some expertise in the discernment and handling of sounds. The sounds that people get through their ears are handled in different cerebrum locales, including the cochlear and prevalent olivary cores, the sidelong lemniscus, the sub-par colliculus, and the hear-able cortex.

Throughout the course of many years, PC researchers have progressively developed computational models that can interact with sounds and discourse, in this way falsely reproducing the capability of the human hear-able pathway. A portion of these models have accomplished exceptional outcomes and are presently generally utilized around the world, for example, permitting voice colleagues (i.e., Alexa, Siri, and so on) to figure out the solicitations of clients.

Analysts at the College of California, San Francisco, as of late have embarked to contrast these models with the human hear-able pathway. Their paper, distributed in Nature Neuroscience, uncovered striking similarities between how profound brain organizations are and how the natural hear-able pathway processes discourse.

“AI speech models have improved dramatically in recent years as a result of deep learning in computers. We wanted to see if what the models learned is similar to how the human brain processes speech.”

Edward F. Chang, one of the authors of the paper,

“Computer-based intelligence discourse models have become awesome as of late in view of profound learning in PCs,” Edward F. Chang, one of the creators of the paper, told Clinical Xpress. “We were intrigued to check whether what the models realize is like the way that the human cerebrum processes discourse.”

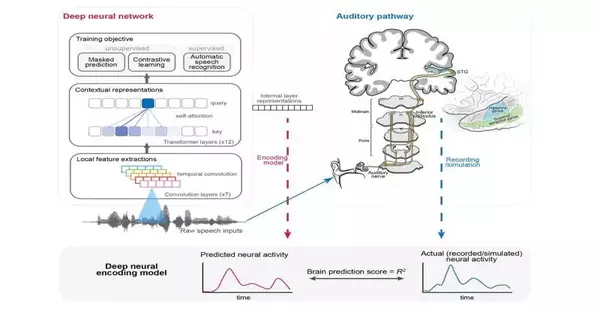

To contrast profound brain networks with the human hear-able pathway, the scientists first and foremost took a gander at the discourse portrayals delivered by the models. These are basically the manners by which these models encode discourse in their various layers.

Accordingly, Chang and his partners contrasted these portrayals with the movement that happens in the various pieces of the mind related to the handling of sounds. Surprisingly, they found a relationship between the two, uncovering potential likenesses between fake and natural discourse handling.

“We utilized a few business-profound learning models of discourse and looked at how the counterfeit neurons in those models contrasted with genuine neurons in the mind,” Chang made sense of. “We thought about how discourse signals are handled across the various layers, or handling stations, in the brain organization and straightforwardly contrasted those with handling across various cerebrum regions.”

Strangely, the specialists additionally found that models prepared to deal with discourse in one or the other English or Mandarin could anticipate the reactions in the minds of local speakers of the comparing language. This recommends that profound learning strategies process discourse in much the same way as the human mind, likewise encoding language-explicit data.

“Computer-based intelligence models that catch setting and become familiar with the significant measurable properties of discourse sounds get along admirably at foreseeing mind reactions,” Chang said. “They are superior to customary etymological models, as a matter of fact. The ramifications are that there is colossal potential for artificial intelligence to grasp the human cerebrum before long.”

The new work by Chang and his associates works on the general comprehension of profound brain networks intended to translate human discourse, showing that they may be more similar to the organic hear-able framework than scientists had expected. Later on, it could direct the improvement of additional computational methods intended to recreate the brain underpinnings of tryouts falsely.

“We are currently attempting to comprehend what man-made intelligence models can be updated to more readily figure out the cerebrum. At the present time, we are simply getting everything rolling, and there is such a long way to go,” said Chang.

More information: Yuanning Li et al. Dissecting neural computations in the human auditory pathway using deep neural networks for speech, Nature Neuroscience (2023). DOI: 10.1038/s41593-023-01468-4