Chart-brain organizations (CBOs) are promising AI models intended to examine information that can be addressed as diagrams. These models accomplished extremely encouraging outcomes in various true applications, including drug disclosure, informal community planning, and recommender frameworks.

As chart-organized information can be profoundly intricate, diagram-based AI models ought to be planned cautiously and thoroughly. Also, these models ought to preferably be run on effective equipment that helps their computational requests without consuming a lot of force.

Scientists at the College of Hong Kong, the Chinese Foundation of Sciences, InnoHK Focuses, and different organizations overall have, as of late, fostered a product equipment framework that joins a GNN design with a resistive memory, a memory arrangement that stores information in a resistive state. Their paper, distributed in Nature Machine Knowledge, shows the capability of new equipment arrangements in view of resistive memories for effectively running chart AI methods.

“The former is due to physically separated memory and processor units, which incur significant energy and time overheads while conducting graph learning due to frequent and enormous data shuttling between these units. The latter is due to the fact that transistor scaling is approaching its physical limit in the 3nm technology node era.”

Haocong Wang, one of the researchers who carried out the study,

“The proficiency of advanced PCs is restricted by the von Neumann bottleneck and the lull of Moore’s regulation,” Shaocong Wang, one of the analysts who did the review, told Tech Xplore. “The previous is a consequence of the truly isolated memory and handling units that cause huge energy and time overheads because of the regular and gigantic information moving between these units while running chart learning. The last option is on the grounds that semiconductor scaling is moving toward its actual cutoff in the time of the 3nm innovation hub.”

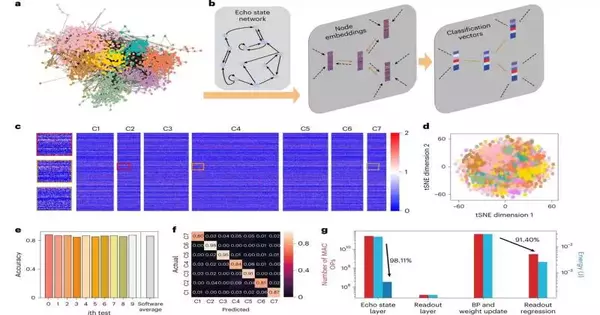

The reverberation state layer for chart inserting The secret condition of a hub in the chart is refreshed with the projection of the actual hub and the past secret condition of the adjoining hubs, both handled with the fix and irregular reverberation state layer executed with an irregular memristive cluster.

Resistive memories are basically tunable resistors, which are gadgets that oppose the entry of electrical flow. These resistor-based memory arrangements have ended up being extremely encouraging for running fake brain organizations (ANNs). This is on the grounds that individual resistive memory cells can both store information and perform calculations, tending toward the limits of the alleged Naumann bottleneck.

“Resistive recollections are likewise profoundly adaptable, holding Moore’s regulation,” Wang said. “Yet, common resistive memories are as yet not sufficient for chart learning, since diagram advancement regularly changes the opposition of resistive memory, which prompts a lot of energy utilization contrasted with the ordinary advanced PC utilizing SRAM and Measure. Also, the opposition change is wrong, which ruins the exact slope refreshing and weight composition. These weaknesses might overcome the upsides of resistive memory for effective chart learning.”

The vital goal of the new work by Wang and his partners was to beat the limits of regular resistive memory arrangements. To do this, they planned a resistive memory-based chart-learning gas pedal that kills the requirement for resistive memory programming while at the same time holding a high proficiency.

They explicitly utilized reverberation state organizations, a supply-figure design in view of a repetitive brain network with a meagerly associated secret layer. The majority of these organizations’ boundaries (i.e., loads) can be fixed according to arbitrary qualities. This implies that they can permit resistive memory to be quickly relevant without the requirement for programming.

“In our review, we tentatively checked this idea for chart realization, which is vital and, truth be told, very broad,” Wang said. “In reality, pictures and successive information, like sounds and messages, can likewise be addressed as charts. Indeed, even transformers, the most cutting-edge and prevailing profound learning models, can be addressed as chart brain organizations.”

The reverberation state chart brain networks created by Wang and his partners include two particular parts, known as the reverberation state and readout layer. The loads of the reverberation state layer are fixed and irregular; hence, they should be prepared more than once or refreshed after some time.

“The reverberation state layer has the capabilities of a chart convolutional layer that refreshes the secret condition of all hubs in the diagram recursively,” Wang said. “Every hub’s secret state is refreshed in view of its own element and the secret conditions of its adjoining hubs in the past time step, both removed with the reverberation state loads. This cycle is rehashed multiple times, and the secret conditions of all hubs are then added into a vector to address the whole chart, which is grouped using the readout layer. This cycle is rehashed multiple times, and afterward the secret conditions of all hubs are added together into a vector as the portrayal of the whole chart, which is grouped by the readout layer.”

The product equipment configuration proposed by Wang and his partners enjoys two eminent benefits. Right off the bat, the reverberation state brain network it depends on requires altogether less preparation. Furthermore, this brain network is effectively executed on an irregular and fixed resistive memory that doesn’t need to be modified.

“Our concentrate’s most eminent accomplishment is the mix of arbitrary resistive memory and reverberation state chart brain organizations (ESGNN), which hold the energy-region proficiency increase in in-memory figuring while likewise using the natural stochasticity of dielectric breakdown to give minimal expense and nanoscale equipment randomization of ESGNN,” Wang said. “In particular, we propose an equipment programming co-improvement plot for chart learning. Such a codesign may move other downstream uses of resistive memory.”

As far as programming goes, Wang and his partners presented an ESGNN that involved countless neurons with irregular and repetitive interconnections. This brain network utilizes iterative arbitrary projections to insert hubs and chart-based information. These projections create directions at the edge of mayhem, empowering effective element extraction while killing the strenuous preparation related to the advancement of regular chart brain organizations.

“On the equipment side, we influence the natural stochasticity of dielectric breakdown in resistive changing to truly execute the irregular projections in ESGNN,” Wang said. “By biasing every one of the resistive cells to the middle of their breakdown voltages, a few cells will encounter dielectric breakdown in the event that their breakdown voltages are lower than the applied voltage, framing irregular resistor clusters to address the information and recursive grid of the ESGNN. Contrasted and pseudo-irregular number age utilizing advanced frameworks, the wellspring of haphazardness here is the stochastic redox responses and particle movements that emerge from the compositional inhomogeneity of resistive memory cells, offering minimal expense and profoundly adaptable arbitrary resistor exhibits for in-memory figuring.”

In initial assessments, the framework made by Wang and his partners accomplished promising outcomes, running ESGNNs more effectively than both advanced and regular resistive memory arrangements. Later on, it very well may be applied to different true issues that require the examination of information that can be addressed as charts.

Wang and his partners feel that their product equipment framework could be applied to an extensive variety of AI issues; hence, they currently plan to keep investigating its true capacity. For example, they wish to survey its exhibition in grouping examination errands, where their reverberation state network executed on memristive clusters could eliminate the requirement for programming while at the same time guaranteeing low power utilization and high exactness.

“The model shown in this work was tried on somewhat small datasets, and we mean to stretch its boundaries with additional perplexing errands,” Wang added. “For example, the ESN can act as a general chart encoder for extraction, expanded with memory to perform not many shot picking up, making it helpful for edge applications. We anticipate investigating these potential outcomes and growing the abilities of the ESN and memristive clusters from now on.”

More information: Shaocong Wang et al, Echo state graph neural networks with analogue random resistive memory arrays, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00609-5