Teleoperation, or the use of a remote control to direct a robot to carry out a task from a faraway location, is now possible thanks to recent advancements in the fields of robotics and artificial intelligence (AI). This could, for example, permit clients to visit historical centers from afar, complete upkeep or specialized undertakings in spaces that are challenging to get to, or go to occasions somewhat more intelligently.

Most existing teleoperation frameworks are intended to be conveyed in unambiguous settings and using a particular robot. Because of this, it’s hard to use them in different real-world settings, which severely limits their potential.

As of late, specialists at NVIDIA and UC San Diego have made AnyTeleop, a PC vision-based teleoperation framework that could be applied to a more extensive scope of situations. AnyTeleop, which was first demonstrated in a paper that was pre-published on arXiv, makes it possible to remotely control a variety of robotic arms and hands to perform various manual tasks.

“Previous research has concentrated on how a human will teleoperate, or guide, the robot—but this approach faces two challenges. To begin, training a cutting-edge model necessitates numerous demonstrations. Second, setups are typically pricey equipment or sensory hardware that is created specifically for a specific robot or deployment location.”

Dieter Fox, senior director of robotics research at NVIDIA,

“An essential target at NVIDIA is investigating the way that people can help robots tackle errands,” Weight Watcher Fox, ranking executive of mechanical technology research at NVIDIA, top of the NVIDIA Advanced mechanics Exploration Lab, teacher at the College of Washington Paul G. Allen School of Software Engineering and Designing, and top of the UW Advanced mechanics and State Assessment Lab, told Tech Xplore.

“Earlier work has zeroed in on how a human will teleoperate, or guide, the robot—yet this approach has two obstructions. To start with, preparing a best-in-class model requires numerous showings. Second, set-ups normally include an expensive device or tactile equipment and are planned exclusively for a specific robot or sending climate,” said Fox.

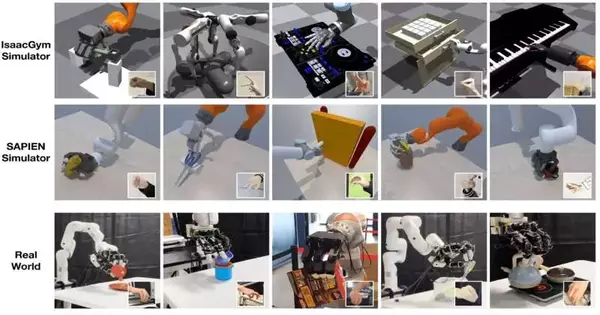

The vital objective of the new work by Fox and his partners was to make a teleoperation framework that is minimally expensive, simple to send, and sums up well across various undertakings, conditions, and mechanical frameworks. To prepare their framework, the scientists teleoperated both virtual robots in reproduced conditions and genuine robots in an actual climate, as this decreased the need to buy and collect numerous robots.

“AnyTeleop is a dream-based teleoperation framework that permits people to utilize their hands to control skillful mechanical hand-arm frameworks,” Fox made sense of. “The framework tracks human hand presents from single or numerous cameras and afterward retargets them to control the fingers of a multi-fingered robot hand. Using a CUDA-powered motion planner, the wrist point is used to control the motion of the robot arm.”

Conversely, with most other teleoperation frameworks presented in past examinations, AnyTeleop can be communicated with various robot arms, robot hands, camera designs, and different mimicked or certifiable conditions. What’s more, it tends to be applied to the two situations where clients are close by and in faraway areas.

The AnyTeleop stage can likewise assist with gathering human exhibition information (i.e., information addressing the developments and activities that people perform while executing explicit manual errands). In turn, this data could be used to better train robots to complete various tasks on their own.

“The significant forward leap of AnyTeleop is its generalizable and effectively deployable plan,” Fox said. “One potential application is to send virtual conditions and virtual robots in the cloud, permitting edge clients with passage-level PCs and cameras (like an iPhone or PC) to teleoperate them. This could eventually alter the information pipeline for analysts and modern designers, showing robots new abilities.”

In starting tests, AnyTeleop was found to beat a current teleoperation framework intended for a particular robot, in any event, when applied to this robot. This demonstrates how useful it is as an enhancement tool for teleoperation applications.

The AnyTeleop system will soon be available as an open-source version from NVIDIA, allowing researchers from all over the world to test it and incorporate it into their robots. This promising new platform has the potential to both facilitate the collection of training data for robotic manipulators and aid in the expansion of teleoperation systems in the future.

“We currently plan to utilize the gathered information to investigate further robot learning,” Fox added. “How to bridge the domain gaps when transferring robot models from simulation to the real world will be a major focus moving forward.

More information: Yuzhe Qin et al, AnyTeleop: A General Vision-Based Dexterous Robot Arm-Hand Teleoperation System, arXiv (2023). DOI: 10.48550/arxiv.2307.04577