Understanding which items are made of the same materials will help a robot work with objects in a kitchen, for example. With this knowledge, the robot would be able to use the same amount of force to pick up a small pat of butter from a dark corner of the counter or an entire stick from inside the brightly lit fridge.

Material selection, or identifying objects in a scene that are made of the same material, is a difficult problem for machines because a material’s appearance can change dramatically depending on the shape of the object or the lighting.

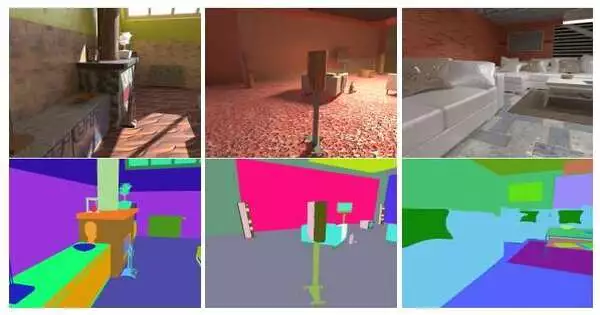

Researchers at MIT and Adobe Exploration have moved toward tackling this test. They fostered a strategy that can recognize all pixels in a picture addressing a given material, which is displayed in a pixel chosen by the client.

The technique is precise in any event when articles have shifting shapes and sizes, and the AI model they created isn’t deceived by shadows or lighting conditions that can cause a similar material to seem unique.

“Knowing what material you’re interacting with is frequently critical. Although two objects may appear similar, their material qualities may differ. Our method can make it easier to select all of the other pixels in an image that are made of the same material,”

Prafull Sharma, an electrical engineering and computer science graduate student

Even though they only used “synthetic” data to train their model, the system works well on real indoor and outdoor scenes it has never seen before because a computer modifies 3D scenes to produce many different images. The methodology can likewise be utilized for recordings. The model is able to identify objects made of the same material throughout the remainder of the video once the user has identified a pixel in the first frame.

This method could be used for image editing or incorporated into computational systems that deduce the parameters of materials in images, in addition to applications in scene understanding for robotics. It could likewise be used for material-based web proposal frameworks. (For instance, a customer might be looking for clothing made from a particular kind of fabric.)

“Often, knowing what you’re interacting with is very important. Although two items might seem to be comparable, they can have different material properties. Prafull Sharma, a graduate student in electrical engineering and computer science who is also the lead author of a paper that discusses this method, states, “Our method can facilitate the selection of all the other pixels in an image that are made from the same material.”

Julien Philip and Michael Gharbi, research scientists at Adobe Research, are Sharma’s co-authors, as are senior authors William T. Freeman, who is a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL) and is the Thomas and Gerd Perkins Professor of Electrical Engineering and Computer Science. Frédo Durand, a member of CSAIL and a professor of electrical engineering and computer science; furthermore, Valentin Deschaintre, an exploration researcher at Adobe Exploration The study will be presented at SIGGRAPH 2023.

The system automatically identifies objects made of the same material throughout the remainder of the video once the user has identified a pixel in the first frame (the red dot on the yellow fabric in the far-left image). Credit: Massachusetts Institute of Technology

Another methodology

Existing strategies for material choice battle to precisely recognize all pixels addressing a similar material. For example, a few strategies center around whole items, yet one article can be made out of numerous materials, similar to a seat with wooden arms and a calfskin seat. Despite the fact that there are thousands of different kinds of wood, other methods may make use of a predetermined set of materials and frequently use broad labels like “wood.”

Instead, Sharma and his coworkers came up with a machine-learning strategy that dynamically evaluates all of an image’s pixels to determine the material similarities between a pixel the user selects and all of the image’s other regions. In the event that a picture contains a table and two seats, and the seat legs and tabletop are made of a similar kind of wood, their model could precisely recognize those comparable locales.

A few obstacles had to be cleared for the researchers to be able to create an AI method for selecting comparable materials. First, their machine-learning model could not be trained on materials that were sufficiently labeled in the existing dataset. The analysts delivered their own engineered dataset of indoor scenes, which included 50,000 pictures and in excess of 16,000 materials arbitrarily applied to each object.

“We needed a dataset where every individual kind of material is checked freely,” Sharma says.

They used a synthetic dataset to train a machine learning model to identify materials that looked similar in real images, but it failed. The researchers realized that the problem was a shift in distribution. This happens when a model is trained on fake data but fails when it is tested on real-world data, which can be very different from the training set.

To tackle this issue, they fabricated their model on top of a pretrained PC vision model, which has seen a large number of genuine pictures. By utilizing the visual features that the model had already learned, they utilized its prior knowledge.

“When using a neural network in machine learning, the representation and the procedure for solving the problem are typically learned simultaneously. This is now separate from us. Our neural network simply focuses on completing the task after receiving the representation from the pretrained model,” he states.

The researchers’ system for identifying similar materials is resistant to changes in lighting, as demonstrated by the burning match heads in this example. Credit: Massachusetts Institute of Technology

Tackling for comparability

The scientists’ model changes the conventional, pretrained visual elements into material-explicit highlights, and it does this in a way that is strong enough to protest shapes or differing lighting conditions.

The material similarity score for each image pixel can then be calculated by the model. The model determines how visually related each subsequent pixel is to the query when a user clicks on one of them. It creates a guide where every pixel is positioned on a scale from 0 to 1 for similarity.

He states, “The user simply clicks on one pixel, and then the model will automatically select all regions that have the same material.”

Since the model is yielding a closeness score for every pixel, the client can calibrate the outcomes by setting a limit, like 90% similitude, and get a guide of the picture with those locales featured. The user can select a pixel in one image and locate the same material in another using the cross-image selection method.

The researchers found that, in comparison to other approaches, their model was able to accurately predict regions of an image that contained the same material. At the point when they estimated how well the forecast contrasted with ground truth, meaning the genuine regions of the picture that involved similar material, their model coordinated with around 92% exactness.

Later on, they need to upgrade the model so it can all the more likely catch the fine subtleties of the articles in a picture, which would support the exactness of their methodology.

“The beauty and functionality of our world are enhanced by rich materials. However, computer vision algorithms typically ignore materials and concentrate primarily on objects. This paper makes a significant commitment to perceiving materials in pictures and video across a wide scope of testing conditions,” says Kavita Bala, Dignitary of the Cornell Thickets School of Figuring and Data Science and Teacher of Software Engineering, who was not engaged with this work. “Designers and end users alike may greatly benefit from this technology. For instance, a mortgage holder can imagine how costly decisions like reupholstering a love seat or changing the covering in a room could show up and can be more sure about their plan decisions in view of these perceptions.”

More information: Materialistic: Selecting Similar Materials in Images. prafullsharma.net/materialisti … tic_camera_ready.pdf