A group of computer-based intelligence specialists at Google’s DeepMind, working with a partner from New York College, has fostered a simulated intelligence framework called AlphaGeometry that has exhibited the capacity to tackle complex math issues at an undeniable level.

In their paper distributed in the journal Nature, the gathering depicts their new artificial intelligence framework and the thoughts they utilized in its turn of events. The group at Nature has likewise distributed a web recording giving an outline of the new simulated intelligence framework.

Demonstrating numerical hypotheses can be a difficult undertaking, and individuals who can do it well are viewed as significant resources for establishments of higher learning and, at times, organizations like Google. So a method for recognizing such people has been laid out—the Global Numerical Olympiad. It is portrayed as the big showdown of science rivalries for secondary school understudies.

In light of the large number of hardships innate in involving math in the overwhelming majority of current applications, for example, the plan of PC frameworks, PC researchers have been expecting simulated intelligence frameworks that can tackle complex numerical statements and additionally demonstrate hypotheses. Sadly, as of not long ago, simulated intelligence frameworks have not performed as well as they should. In this new review, nonetheless, the group at DeepMind has now made a simulated intelligence framework called AlphaGeometry that contends with the degree of gold-decoration winning understudies in the Global Numerical Olympiad.

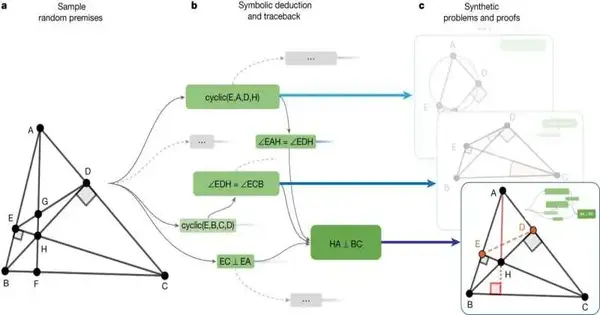

To make AlphaGeometry, the exploration group utilized another methodology. As opposed to endeavoring to show the framework how to demonstrate hypotheses utilizing numerous models, they utilized a brain language model that permitted the framework to prepare itself. This was finished by blending a huge number of known hypotheses and evidence with different degrees of intricacy. They likewise added a representative derivation motor to help the framework learn and take care of progressively complex issues without help from people.

The scientists then tried their new framework by giving it 30 issues looked at by understudies in the Global Numerical Olympiad over the course of the years 2002–2020 and observed that it had the option to settle 25 of them significantly better than earlier simulated intelligence frameworks. They noticed that its exhibition was comparable to the normal gold medalists at the opposition.

The exploration group noticed that the framework is now modified to work with explicit types of calculations; however, it proposes extending its collection to different domains.

More information: Trieu H. Trinh et al, Solving olympiad geometry without human demonstrations, Nature (2024). DOI: 10.1038/s41586-023-06747-5

DeepMind blog post: deepmind.google/discover/blog/ … system-for-geometry/