Before being tested and introduced in actual environments, AI-powered robots are typically trained in simulation environments. Without having to buy hardware, assemble robots, transport them to distant locations, or jeopardize the real-world security of the deployed systems, these environments enable developers to safely test their machine learning techniques on a variety of robots and in numerous potential scenarios.

The field of embodied AI research, which is focused on the creation of autonomous robots, recently received a new simulation platform created by Amazon Alexa AI. The Alexa Arena platform was introduced in a paper that was pre-published on arXiv and is openly accessible on GitHub.

Govind Thattai, the lead scientist for the Arena platform, stated to Tech Xplore that the company’s main goal was to create an interactive embodied AI framework to spur the development of future embodied AI agents. “Several embodied-AI simulation platforms (e.g., Habitat, iGibson, and AI2Thor). These platforms enable embodied agents to move through and interact with objects in simulated environments, but the majority of them lack user-centricity, according to Qiaozi Gao, a co-creator of the Arena framework.

“Our primary goal was to create an interactive Embodied AI framework to catalyze the development of next-generation embodied AI agents,”

Govind Thattai, the lead scientist for Arena platform,

Developers frequently need to conduct real-world experiments, which is typically expensive and time-consuming as the majority of the currently available simulation platforms are not user-centric to collect data for human-robot interactions. As an alternative, some teams decide to create an “inferencing engine,” a computational tool that enables people to directly interact with a simulated environment, though this also necessitates more time and research.

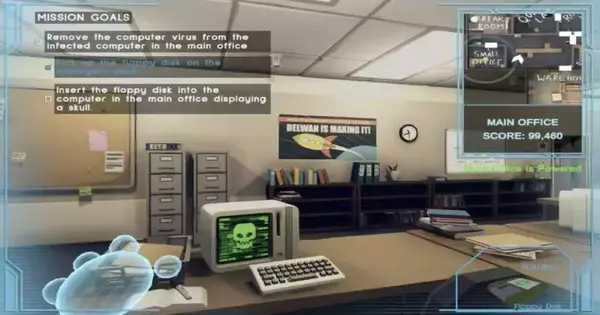

Credit: Gao et al

Embodied agents must consistently interact with their surroundings while also learning from and effectively adapting to other agents or humans. Arena tries to fill in the gaps that would unavoidably arise during the deployment and real-time evaluation of collaborative robots, while current simulation platforms concentrate on task decomposition and navigation.

In order to support the creation and testing of EAI agents as well as close the gap between the creation and deployment phases, Arena has been enhanced with user-centric features. This is accomplished by making humans an essential component of the development and evaluation of EAI.

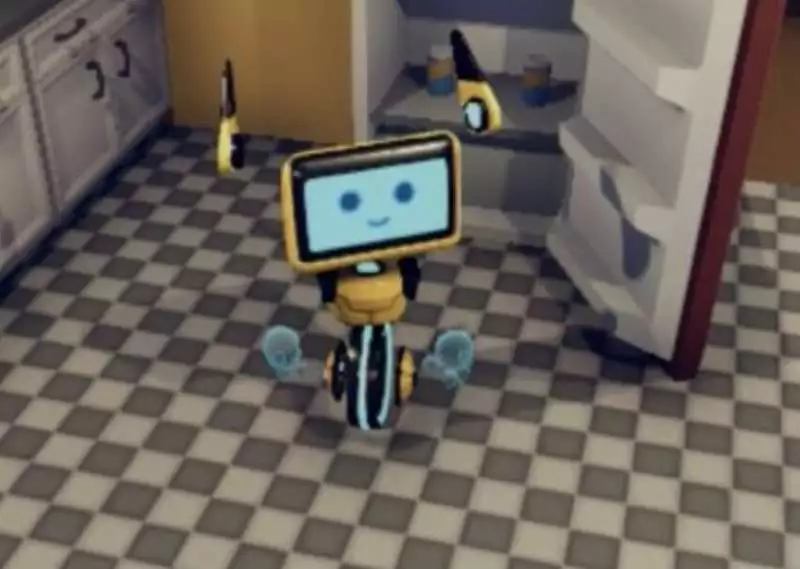

Suhaila Shakiah, a creator of Arena ML components, stated, “We created Alexa Arena to address these challenges.” Our platform offers a framework with user-centric capabilities, such as smooth visuals during robot navigation, continuous background animations and sounds, viewpoints in rooms to simplify room-to-room navigation, and visual hints embedded in the scene that aid human users in generating suitable instructions for task completion. These features improve usability and user experience, enabling the development and assessment of embodied AI with humans in the loop.

Credit: Gao et al

Developers can create and test a variety of embodied AI agents with multimodal capabilities on the Alexa Arena platform. Based on the specific requests made by users, these agents can interact with the pertinent areas or objects in the virtual environment, a capability known as visual grounding. In order to interact with humans and robots, they can also be taught to obey user instructions in natural language.

According to Xiaofeng Gao, an Arena developer, “Alexa Arena pushes the limits of human-robot interaction.”. It provides a user-centered, interactive framework that makes it possible to design robotic tasks and missions that require navigating multiple virtual rooms and real-time object manipulation. Users can converse in natural language with virtual robots in a setting akin to a video game, giving the robots helpful feedback and assisting them in learning and completing their tasks.

For both developers and end users, Alexa Arena’s interface is significantly more straightforward than that of other simulation platforms currently in use. Using built-in hints and features that push the boundaries of human-computer interaction and embodied AI, users can designate specific tasks and missions for the robots in the simulation environment. This makes it simpler and more effective to gather data on human-robot interactions, and it teaches robots how to handle interactive tasks with a variety of different objects and tools.

Credit: Gao et al

The user-centered platform may soon be used by programmers and researchers all over the world to create highly effective embodied AI agents and intelligent robots. In the interim, the team intends to improve Alexa Arena by including new features and simulated scenarios.

Now, Govind continued, “We will continue to improve the Arena platform to support higher and better runtime performances, more scenes, a richer collection of objects, and a wider range of interactions.”. “We’ll also keep making investments in the broader field of embodied AI, working to create the next generation of intelligent robots that can carry out practical tasks and interact with people in a natural way.”.

More information: Qiaozi Gao et al, Alexa Arena: A User-Centric Interactive Platform for Embodied AI, arXiv (2023). DOI: 10.48550/arxiv.2303.01586