We will generally underestimate our feeling of touch in regular settings, yet our capacity must connect with our environmental factors. Envision venturing into the refrigerator to snatch an egg for breakfast. As your fingers contact its shell, you can perceive that the egg is chilly, that its shell is smooth, and how immovably you want to hold it to try not to pound it. These are capacities that robots, even those straightforwardly constrained by people, can battle with.

Another fake skin created at Caltech can now empower robots to detect temperature, strain, and, surprisingly, harmful synthetics through a basic touch.

“Modern robots are more vital in security, agriculture, and production. Is it possible to give these robots a sensation of touch and temperature? Can we teach them to detect compounds such as explosives and nerve agents, as well as biohazards such as pathogenic bacteria and viruses? This is something we’re working on.”

Wei Gao, Caltech’s assistant professor of medical engineering

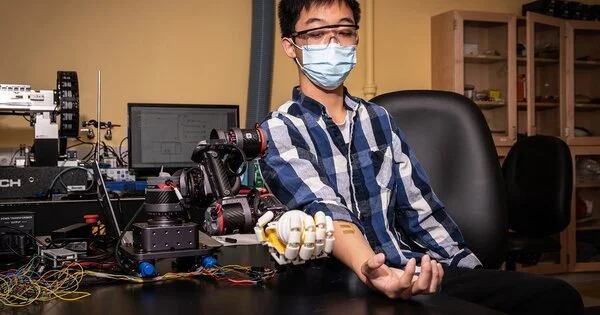

This new skin innovation is essential for a mechanical stage that coordinates the fake skin with an automated arm and sensors that connect to human skin. An AI framework that interfaces the two permits the human client to control the robot with their own development while getting input through their own skin. The multimodal automated detection stage, named M-Bot, was created in the lab of Wei Gao, Caltech’s associate teacher of clinical design, an examiner with Heritage Medical Research Institute, and Ronald and JoAnne Willens Scholar. It entails giving people more precise control over robots while also protecting them from potential dangers.

“Present-day robots are assuming an increasingly more significant part in security, cultivation, and production,” Gao says. “Might we at any point provide these robots with a feeling of touch and a feeling of temperature?” Could we ever appear to be legitimate synthetic compounds like explosives and nerve agents or biohazards like irresistible microorganisms and infections? We’re dealing with this. “

The skin

A comparison of a human hand and a mechanical hand reveals significant differences. Though human fingers are delicate, soft, and meaty, mechanical fingers tend to be hard, metallic, plasticky, or rubbery. The printable skin created in Gao’s lab is a coagulated hydrogel and makes robot fingertips significantly more like our own.

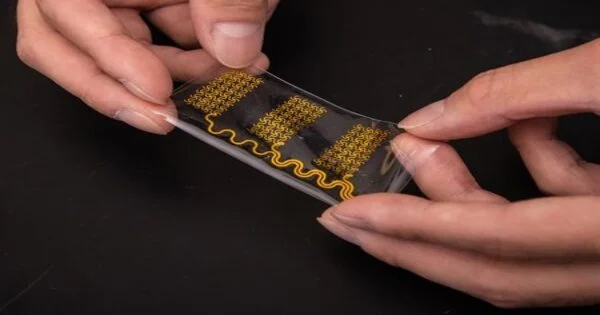

Implanted inside that hydrogel are the sensors that empower the counterfeit skin to distinguish its general surroundings. These sensors are, in a real sense, imprinted onto the skin, similar to how an inkjet printer applies the text to a piece of paper.

“Inkjet printing has this cartridge that launches drops, and those drops are an ink arrangement. They could be an answer that we create rather than normal ink,” Gao says. “We’ve fostered an assortment of inks of nanomaterials for ourselves.”

After printing a platform of silver nanoparticle wires, the specialists can then print layers of micrometer-scale sensors that can be intended to recognize an assortment of things. The way that the sensors are printed makes it faster and simpler for the lab to plan and evaluate new sorts of sensors.

“At the point when we need to recognize one given compound, we ensure the sensor has a high electrochemical reaction to that compound,” Gao says. “Graphene impregnated with platinum”

It quickly and precisely recognizes the sensitive TNT. For an infection, we are printing carbon nanotubes, which have an exceptionally high surface area, and connecting antibodies for the infection to them. This is all mass-produced and versatile. “

An intuitive framework

Gao’s group has coupled this skin to an intuitive framework that permits a human client to control the robot through their own muscle development while likewise getting input to the client’s own skin from the skin of the robot.

This piece of the framework utilizes extra printed parts—for this situation, cathodes are secured to the human administrator’s lower arm. The terminals are like those that are utilized to quantify mind waves, yet they are rather situated to detect the electrical signals created by the administrator’s muscles as they move their hands and wrists. A straightforward flick of the human wrist advises the mechanical arm to go up or down, and a gripping or spreading of the human fingers prompts a comparable activity by the automated hand.

“We utilized AI to change those signs into motions for automated control,” Gao says. “We prepared the model in six unique motions.”

The framework also criticizes human skin as an extremely gentle electrical sensation. Bringing back the case of getting an egg, on the off chance that the administrator was to hold the egg too firmly with the mechanical hand and was at risk of pulverizing its shell, the framework would caution the administrator through what Gao describes as “a little shiver” to the administrator’s skin.

Gao trusts the framework will track down applications in everything from horticulture to security to ecological insurance, permitting the administrators of robots to “feel” how much pesticide is being applied to a field of yields, whether a dubious knapsack left in an air terminal has hints of explosives on it or the area of a contamination source in a stream. First, however, he needs to make a few enhancements.

“I think we have shown proof of the idea,” he says. Be that as it may, we need to work on the steadiness of this automated skin to make it last longer. By streamlining new inks and new materials, we trust this can be utilized for various types of designated recognition. We need to put it on additional strong robots and make them more intelligent and more astute. “

The paper depicting the examination, titled “All-printed delicate human-machine interface for automated physicochemical detection,” shows up in the June 1 issue of Science Robotics.