A couple of UCLA bioengineers and a previous postdoctoral researcher have fostered another class of bionic 3D camera frameworks that can copy flies’ multiview vision and bats’ normal sonar detecting, bringing about complex imaging with an uncommon profundity range that can likewise look over vulnerable sides.

Fueled by computational picture handling, the camera can unravel the size and state of articles concealed around corners or behind different things. The innovation could be integrated into independent vehicles or clinical imaging devices with detecting abilities a long way beyond what is viewed as cutting edge today. This exploration has been published in Nature Communications.

By utilizing a type of echolocation, or sonar, bats can imagine a lively image of their environment in obscurity. Their high-recurrence squeaks bob off their environmental factors and are gotten back by their ears. The tiny contrasts in the amount of time it takes for the reverberation to arrive at the nighttime creatures and the power of the sound let them know continuously where things are, what’s in the way, and the vicinity of likely prey.

“To address that, we developed a novel computational imaging framework, which for the first time enables the acquisition of a wide and deep panoramic view with simple optics and a small array of sensors.”

Liang Gao, an associate professor of bioengineering at the UCLA Samueli School of Engineering.

Numerous bugs have mathematically molded compound eyes, in which each “eye” is made out of hundreds to a huge number of individual units for sight — making it conceivable to see exactly the same thing from various angles. For instance, flies’ bulbous compound eyes give them a close to 360-degree view despite the fact that their eyes have a proper center length, making it hard for them to see anything far away, for example, a flyswatter held overtop.

Roused by these two normal peculiarities found in flies and bats, the UCLA-drove group set off to plan an elite exhibition 3D camera framework with cutting-edge capacities that influence these benefits, yet in addition address nature’s weaknesses.

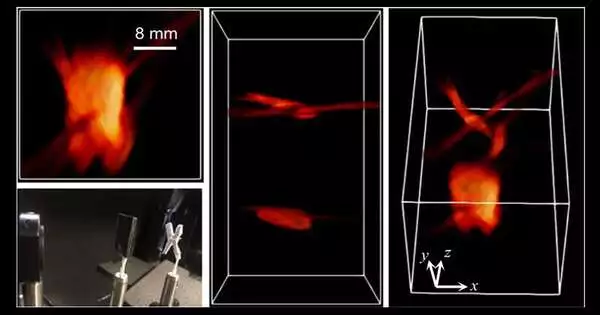

3D imaging through impedance utilizing Compact Light-field Photography, or CLIP.

“While the actual thought has been had a go at, seeing across a scope of distances and around impediments has been a significant obstacle,” said concentrate on pioneer Liang Gao, an academic partner in bioengineering at the UCLA Samueli School of Engineering. “That’s what to address. We fostered a clever computational imaging system, which interestingly empowers the securing of a wide and profound all-encompassing perspective with basic optics and a little cluster of sensors.”

Called “Minimal Light-field Photography,” or CLIP, the structure permits the camera framework to “see” with a lengthy profundity range and around objects. In tests, the analysts showed the way that their framework would be able to “see” stowed away items that are not spotted by regular 3D cameras.

The scientists likewise utilize a kind of LiDAR, or “Light Detection and Ranging,” in which a laser checks the environmental factors to make a 3D guide of the area.

Regular LiDAR, without CLIP, would take a high-goal preview of the scene yet miss stowed away items, much like our natural eyes would.

Utilizing seven LiDAR cameras with CLIP, the cluster takes a lower-goal picture of the scene, processes what individual cameras see, and then remakes the joined scene in high-goal 3D imaging. The scientists showed the camera framework could picture an intricate 3D scene with a few items, all set at various distances.

Gao, who is likewise an individual from the California NanoSystems Institute, said, “In the event that you’re covering one eye and taking a gander at your PC, and there’s an espresso cup just somewhat taken cover behind it, you probably won’t see it, on the grounds that the PC hinders the view.” Yet, in the event that you utilize the two eyes, you’ll see that you’ll get a superior perspective on the item. That is somewhat what’s going on here, yet I presently envision seeing the mug with a bug’s compound eye. Presently, various perspectives on it are conceivable.

As per Gao, CLIP assists the camera with showing and figuring out what’s secret. Joined with LiDAR, the framework can accomplish the bat echolocation impact, so one can detect a secret item by the amount of time it requires for light to return to the camera.

The co-lead creators of the distributed exploration are UCLA bioengineering graduate understudy Yayao Ma, who is an individual from Gao’s Intelligent Optics Laboratory, and Xiaohua Feng, a previous UCLA Samueli postdoc working in Gao’s lab and presently an examination researcher at the Research Center for Humanoid Sensing at the Zhejiang Laboratory in Hangzhou, China.

More information: Xiaohua Feng et al, Compact light field photography towards versatile three-dimensional vision, Nature Communications (2022). DOI: 10.1038/s41467-022-31087-9

Journal information: Nature Communications