The tissue-put together finding of illnesses depends with respect to the visual review of biopsied tissue examples by pathologists utilizing an optical magnifying lens. Prior to putting the tissue test under a magnifying lens for review, unique compound colors are applied to the example for staining, which improves the picture difference and carries tone to different tissue constituents. This compound staining process is arduous and tedious, performed by human specialists. In numerous clinical cases, notwithstanding the usually utilized hematoxylin and eosin (H&E) stain, pathologists need extra unique stains and synthetics to work on the exactness of their findings. In any case, utilizing extra tissue stains and synthetics is slow and brings about additional expenses and deferrals.

In a new work distributed in ACS Photonics, UCLA scientists fostered a computational methodology fueled by man-made brainpower to basically move (refinish) pictures of tissue previously stained with H&E into various stain types without utilizing any synthetics. In addition to saving master expert time, compound staining-related costs, and harmful material created by histology labs, this virtual tissue refinishing strategy is likewise more repeatable than the staining performed by human specialists. Besides, it saves the biopsied tissue for further developed indicative tests to be performed, killing the requirement for a pointless biopsy.

Past techniques to perform virtual stain movement dealt with one significant issue: a tissue slide can be stained once with one sort of stain, and washing away the current stain and putting on another compound stain is truly challenging and seldom rehearsed in clinical settings. This makes getting matched pictures of various stain types extremely testing, which is a fundamental piece of profound learning-based picture interpretation strategies.

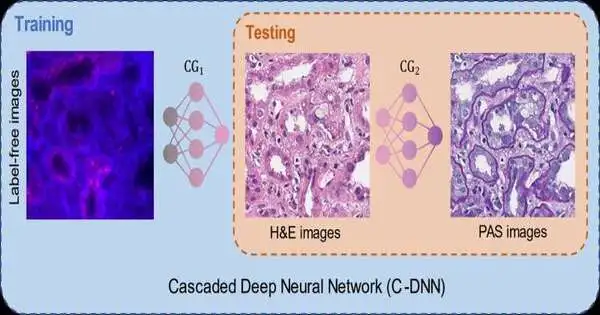

To ease this issue, the UCLA group showed another virtual stain move system utilizing a fountain of two unique profound brain networks cooperating. During the preparation cycle, the main brain network figured out how to basically stain autofluorescence pictures of clean tissue into H&E stain, and the second brain network that is connected to the first figured out how to perform stain move from H&E into another unique stain (PAS). This fluid preparing system enabled the brain organizations to use histochemically stained image data on both H & E and PAS stains, which performed extremely precise stain-to-stain changes and virtual refinishing of existing tissue slides.

This virtual tissue refinishing strategy can be applied to different unique stains utilized in histology and will open new doors in advanced pathology and tissue-based diagnostics.

This examination was driven by Dr. Aydogan Ozcan, Chancellor’s Professor and Volgenau Chair for Engineering Innovation at UCLA Electrical and Computer Engineering and Bioengineering. Different creators of this work incorporate Xilin Yang, Bijie Bai, Yijie Zhang, Yuzhu Li, Kevin de Haan, and Tairan Liu. Dr. Ozcan also works in the medical procedure division at UCLA David Geffen School of Medicine, and he is a partner and the director of the California NanoSystems Institute (CNSI).

More information: Xilin Yang et al, Virtual Stain Transfer in Histology via Cascaded Deep Neural Networks, ACS Photonics (2022). DOI: 10.1021/acsphotonics.2c00932

Journal information: ACS Photonics