Imagine you’re looking through your phone’s photos when you come across an image you don’t immediately recognize. On the couch, something seems to be fuzzy; might it at any point be a cushion or a coat? Of course, it clicks after a few seconds. That wad of cushion is your companion’s feline, Mocha. While a portion of your photographs could be perceived in a moment, for what reason was this feline photograph considerably more troublesome?

MIT Software Engineering and Computerized Reasoning Lab (CSAIL) specialists were amazed to find that notwithstanding the basic significance of understanding visual information in vital regions going from medical care to transportation to family gadgets, the idea of a picture’s acknowledgment trouble for people has been on the whole overlooked.

Datasets have been one of the most important factors in the development of AI that is based on deep learning; however, aside from the fact that bigger is better, very little is known about how data drives development in large-scale deep learning.

“Some images take longer to recognize inherently, and it’s critical to understand the brain’s activity during this process and how it relates to machine learning models. Perhaps our present models are missing complicated brain networks or unique mechanisms that become obvious only when tested with difficult visual inputs. This research is critical for understanding and improving machine vision models.”

Mayo, a lead author of a new paper on the work.

Despite the fact that object recognition models perform well on current datasets, including those specifically designed to challenge machines with debiased images or distribution shifts, humans perform better in real-world applications that require understanding visual data.

This issue continues to happen, to some degree, since we have no direction on the outright trouble of a picture or dataset. It is difficult to objectively assess progress toward human-level performance, to cover the range of human abilities, and to increase the challenge posed by a dataset without controlling for the difficulty of the images used for evaluation.

To fill in this information hole, David Mayo, a MIT Ph.D. understudy in electrical designing and software engineering and a CSAIL partner, dug into the profound universe of picture datasets, investigating why certain pictures are more challenging for people and machines to perceive than others.

“A few pictures innately take more time to perceive, and it’s fundamental to comprehend the mind’s action during this cycle and its connection to AI models. Perhaps our current models lack complex neural circuits or unique mechanisms that can only be observed when tested with challenging visual stimuli. This investigation is vital for understanding and upgrading machine vision models,” says Mayo, the lead creator of another paper on the work.

As a result, a new metric known as the “minimum viewing time” (MVT) was created to measure how difficult it is to recognize an image based on how long it takes a person to view it before they can correctly identify it.

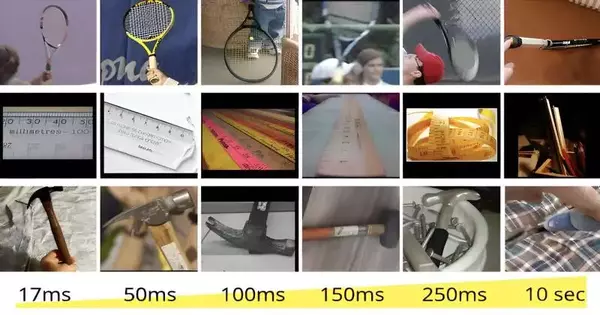

Utilizing a subset of ImageNet, a well-known dataset in AI, and ObjectNet, a dataset intended to test object acknowledgment strength, the group showed pictures to members for changing spans from as short as 17 milliseconds to up to 10 seconds and requested that they pick the right item from a bunch of 50 choices.

The team discovered, after more than 200,000 image presentation trials, that existing test sets, such as ObjectNet, appeared to favor shorter, simpler MVT images. The majority of benchmark performance was derived from images that are simple for humans.

The project found interesting patterns in the performance of models, especially when it came to scaling. The larger models showed extensive enhancement for less difficult pictures but gained less headway on additional difficult pictures. The CLIP models, which incorporate vision and language, stood out because they moved toward recognition that was more human-like.

“Generally, object acknowledgment datasets have been slanted towards less-complex pictures, a training that has prompted an expansion in model execution measurements, not really intelligent of a model’s power or its capacity to handle complex visual errands. Our exploration uncovers that harder pictures represent a more intense test, causing a dissemination shift that is frequently not represented in standard assessments,” says Mayo.

“We delivered picture sets labeled by trouble alongside instruments to consequently register MVT, empowering MVT to be added to existing benchmarks and stretched out to different applications. These incorporate estimating test set trouble prior to sending genuine frameworks, finding brain associates of picture trouble, and propelling item acknowledgment methods to close the hole between benchmark and certifiable execution.”

“One of my greatest focus points is that we currently have one more aspect to assess models on. We need models that can perceive any picture regardless of whether—maybe particularly in the event that—it’s difficult for a human to perceive. We’re quick to measure what this would mean. Our outcomes show that, in addition to the fact that this is not the situation with the present cutting edge, our flow assessment strategies don’t see us when it is the case since standard datasets are so slanted toward simple pictures,” says Jesse Cummings, a MIT graduate understudy in electrical designing and software engineering and co-first creator with Mayo on the paper.

From ObjectNet to MVT

A couple of years prior, the group behind this venture distinguished a critical test in the field of AI: models were battling with out-of-dispersion pictures or pictures that were not very much addressed in the preparation information. Enter ObjectNet, a dataset of photographs taken in real-world settings.

The dataset enlightened the exhibition hole between AI models and human acknowledgment capacities by killing misleading connections present in different benchmarks—for instance, between an article and its experience. As a result of ObjectNet’s illumination of the discrepancy between the performance of machine vision models in real-world applications and on datasets, numerous researchers and developers were encouraged to use it, which in turn led to an improvement in model performance.

Quick forward to the present, and the group has made their exploration a stride further with MVT. This new method compares how models perform when presented with the easiest and hardest images, in contrast to previous approaches that focused solely on absolute performance.

The study also looked into how image difficulty could be explained and tested to see if it was similar to how humans process visual information. Utilizing measurements like c-score, expectation profundity, and ill-disposed power, the group observed that harder pictures are handled diversely by networks. ” According to Mayo, “a comprehensive semantic explanation of image difficulty continues to elude the scientific community, despite there being observable trends, such as easier images being more prototypical.”

In the domain of medical services, for instance, the relevance of understanding visual intricacy turns out to be considerably more articulated. The variety and difficulty of the distribution of the images affect AI models’ ability to interpret medical images like X-rays. The scientists advocate for a careful examination of troubled dissemination customized for experts, guaranteeing man-made intelligence frameworks are assessed in light of master principles as opposed to layman translations.

Mayo and Cummings are, as of now, viewing the neurological underpinnings of visual acknowledgment and testing whether the cerebrum displays differential action while handling simple as opposed to testing pictures. The study hopes to clarify how our brains accurately and efficiently decode the visual world by determining whether complex images recruit additional brain regions not typically associated with visual processing.

Toward human-level performance In the future, the researchers are not just looking into ways to improve AI’s ability to predict image difficulty. The group is chipping away at distinguishing connections with survey time trouble to create more earnest or simpler renditions of pictures.

The researchers acknowledge the study’s limitations, particularly with regard to the separation of object recognition and visual search tasks. The current approach ignores the difficulties posed by cluttered images and instead focuses on object recognition.

According to Mayo, “This comprehensive approach opens new avenues for understanding and advancing the field” and “addresses the long-standing challenge of objectively assessing progress toward human-level performance in object recognition.”

“With the possibility to adjust the Base Review Time trouble metric for various visual assignments, this work prepares for more vigorous, human-like execution in object acknowledgment, guaranteeing that models are genuinely scrutinized and are prepared for the intricacies of certifiable visual comprehension.”

Alan L. Yuille, the Bloomberg Distinguished Professor of Cognitive Science and Computer Science at Johns Hopkins University, who was not involved in the research for the paper, describes it as “a fascinating study of how human perception can be used to identify weaknesses in the ways in which the ways AI vision models are typically benchmarked, which overestimate AI performance by focusing on easy images.” Yuille was not involved in the writing of the paper.

“This will assist with growing more sensible benchmarks, driving not exclusively to enhancements to computer-based intelligence, yet in addition to making more attractive examinations among artificial intelligence and human discernment.”

“It’s generally asserted that PC vision frameworks currently outflank people, and on some benchmark datasets, that is valid,” says human-centered specialized staff member Simon Kornblith, Ph.D. ’17, who was additionally not engaged with this work.

“Notwithstanding, a ton of the trouble in those benchmarks comes from the lack of definition of what’s in the pictures. The average person simply lacks sufficient knowledge to classify various dog breeds. Instead, the focus of this work is on images, which people can only get right given enough time. Computer vision systems typically have a harder time processing these images, but even the best systems are only marginally worse than humans.”

More information: Paper: How hard are computer vision datasets? Calibrating dataset difficulty to viewing time