Researchers should make perpetually modern estimations as innovation psychologists to the nanoscale, as we face worldwide difficulties from the impacts of environmental change.

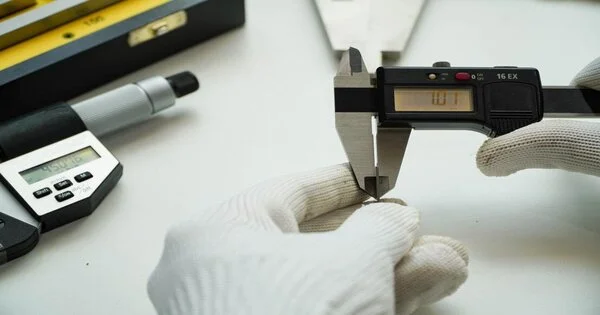

As industry works increasingly more on the nanometer scale (a nanometer is a billionth of a meter), there is a need to gauge all the more dependably and precisely things we can scarcely see. This requires metrology, the study of estimation.

Nano-scale metrology is helpful in daily existence, for instance in quantifying portions of medicine or in the advancement of CPUs for our computerized gadgets.

“Metrology is required anywhere you make measurements or want to compare measurements, Industry requires AFM resolution to determine distances between extremely small structures.”

Virpi Korpelainen, senior scientist at the Technical Research Center of Finland

“Metrology is required wherever you make estimations or, on the other hand, think about estimations,” said Virpi Korpelainen, senior researcher at the Technical Research Center of Finland and National Metrology Institute in Espoo, Finland.

Since the earliest human advancements, normalized and predictable estimations have forever been significant to the smooth working of society. In old times, actual amounts, for example, a body estimation, were utilized.

One of the earliest realized units was the cubit, which was roughly the length of a lower arm. The Romans involved fingers and feet in their estimation frameworks, while the story goes that Henry I of England (around 1068 to 1135) attempted to normalize a yard as the separation from his nose to his thumb.

Standard units

Normalization requests exact definitions and reliable estimations. In light of a legitimate concern for more prominent precision, during the 1790s, the French government commission normalized the meter as the fundamental unit of distance. This set Europe on the road to the normalized global arrangement of base units (SI) which has been developing since.

Beginning around 2018, a few vital meanings of estimation units have been re-imagined. The kilo, the ampere, the kelvin, and the mole are presently founded on principal constants in nature rather than actual models. This is on the grounds that, after some time, the actual models change, as occurred with the model of the kilo, which lost a little measure of mass north of 100 years after it was made. With this new methodology, which was taken on following quite a while of cautious science, the definitions won’t change.

This progress is often fueled by astoundingly complex science known only to metrologists, such as the speed of light in a vacuum (meter), the rate of radioactive decay (time), or the Planck constant (kilogram), all of which are used to adjust key units of estimation under the SI.

“At the point when you purchase an estimating instrument, individuals regularly don’t consider where the scale comes from,” said Korpelainen. This goes for researchers and designers as well.

In the domain of examination researchers, nanoscales are progressively significant in industry. Nanotechnology, microchips, and prescriptions normally depend on exceptionally exact estimations at very small scopes.

Indeed, even the most progressive magnifying lens should be aligned, implying that means should be taken to normalize its estimations of the tiny. Korpelainen and partners around Europe are creating further developed nuclear power magnifying lenses (AFMs) in a continuous undertaking called MetExSPM.

An AFM is a kind of magnifying lens that gets so close to an example that it can nearly uncover its singular particles. “In industry, individuals need detectable estimations for quality control and for purchasing parts from subcontractors,” said Korpelainen.

The project will enable AFM magnifying instruments to make accurate estimates at nanoscale targets using rapid testing, even on generally large examples.

“Industry needs an AFM goal if they have any desire to gauge distances between tiny designs,” Korpelainen said. Research on AFMs has uncovered that estimation blunders are effectively presented at this scale and can be as high as 30%.

The interest in small, modern, high-performing gadgets implies the nanoscale is filling in with significance. She utilized an AFM magnifying instrument and lasers to align accuracy scales for different magnifying lenses.

She additionally organized another venture, 3DNano, to quantify nanoscale 3D articles that are not entirely even all the time. Exact estimations of such articles support the advancement of new innovations in medication, energy capacity, and space investigation.

Radon flux

Dr. Annette Röttger, an atomic physicist at the PTB, Germany’s public metrology establishment, is interested in estimating radon, a radioactive gas with no color, smell, or taste.

Radon is a common occurrence.It starts from rotting uranium underground. In general, the gas is harmless when it escapes into the atmosphere, but it can reach dangerous levels when it accumulates in buildings, potentially causing disease in occupants.

In any case, there is one more explanation why Röttger is keen on estimating radon. She accepts it can work on the estimation of significant ozone depleting substances (GHG).

“For methane and carbon dioxide, you can quantify the sums in the climate definitively, but you can’t gauge the motion of these gases emerging starting from the earliest stage,” said Röttger.

“Transition is the pace of leakage of a gas. Following the amounts of other GHGs, such as methane, that leak out of the ground is a useful estimation.Estimates of methane emerging from the initial variable, with the goal of one location differing from another a few steps away.The progression of radon gas out of the ground intently tracks the progression of methane, a harmful GHG with both normal and human starting points.

At the point when radon gas emanates from the beginning, so do carbon dioxide and methane levels. “Radon is more homogenous,” said Röttger, “and there is a nearby relationship between radon and these ozone-depleting substances.” The exploration undertaking to concentrate on it is called traceRadon.

Radon is estimated using radioactivity, but due to its low focus, it is extremely difficult to measure.”A few gadgets won’t work by any means, so you will get a zero-perusing esteem since you are underneath as much as possible,” said Röttger.

Wetland rewetting

Estimating the break of radon empowers researchers to show the pace of outflows over a scene. This can be valuable to quantify the impacts of environmental relief measures. For instance, research shows that the fast rewetting of depleted peatland stores ozone-harming substances and mitigates environmental change.

However, on the off chance that you go to the difficulty of rewetting an enormous marshland, “You will want to find out whether this worked,” said Röttger. If it works for these GHGs, we should see less radon coming out as well. In the event that we don’t, then, at that point, it didn’t work. “

With more precise adjustment, the task will further develop radon estimations over enormous geological regions. This can also be used to work on radiological early warning frameworks in a European testing network known as the European Radiological Data Exchange Platform (EURDEP).

“We have lots of deceptions (because of radon) and we could try and miss an alert along these lines,” said Röttger. “We can improve this organization, which is progressively significant for radiological crises, with the board’s support.”

Given the power of the environmental emergency, it is urgent to introduce dependable information for strategy producers, added Röttger. This will help extraordinarily in tending to environmental change, ostensibly the greatest danger humankind has faced since the cubit was first utilized as an action in old Egypt quite a long time back.