In another concentrate in Nature Machine Knowledge, specialists Bojian Yin and Sander Bohté from the HBP accomplice Dutch Public Exploration Establishment for Math and Software Engineering (CWI) show a huge step towards man-made reasoning that can be utilized in neighborhood gadgets like cell phones and in VR-like applications while safeguarding protection.

They show how mind-like neurons joined with novel learning strategies empower the preparation of quick and energy-effective spiking brain networks for an enormous scope. Wearable AI, speech recognition, and augmented reality are all potential uses.

Even though the foundation of the current AI revolution is made up of artificial neural networks, these networks are only in part inspired by networks of real, biological neurons, like those in our brains. The brain, on the other hand, is a much larger network that uses less energy and can respond extremely quickly to external events. Spiking neural networks are a special kind of neural network that work more like the neurons in a living organism. Our nervous system’s neurons only occasionally exchange electrical pulses for communication.

“Since the techniques required for this take up a lot of memory on the computer, we can only train tiny network models for a limited number of jobs. Many useful AI applications are now hindered by this.”

Sander Bohté of CWI’s Machine Learning group.

Executed in chips, called neuromorphic equipment, such spiking brain networks hold the promise of bringing artificial intelligence programs closer to clients — on their own gadgets. The robustness, responsiveness, and privacy of these local solutions are advantageous. Speech recognition in toys and appliances, health care monitoring, drone navigation, and local surveillance are just a few examples.

Mimicking the learning brain

Spiking neural networks, like conventional artificial neural networks, require training in order to successfully complete such tasks. Be that as it may, the manner in which such organizations impart information presents serious difficulties. ” We can only train small network models primarily for smaller tasks because the algorithms required for this require a lot of computer memory. According to Sander Bohté, a member of the Machine Learning group at CWI, “this holds back many practical AI applications so far.” He works on hierarchical cognitive processing architectures and learning techniques for the Human Brain Project.

The learning aspect of these algorithms is a significant challenge, and they cannot match our brain’s learning capacity. The cerebrum can undoubtedly advance quickly from new encounters, evolving associations, or even making new ones. In addition, the brain uses less energy and requires fewer examples to learn something.” According to Bojian Yin, “We wanted to develop something that was closer to the way our brain learns.”

Yin explains the procedure: When you make a mistake during a driving lesson, you immediately learn from it. You correct your conduct immediately, not an hour after the fact.” As a result of taking in the new information, you learn. By providing each neuron of the neural network with a piece of information that is constantly updated, we aimed to imitate that. This way, the network doesn’t have to remember all of the previous information and can learn how the information changes. This is a significant departure from the current networks, which must adapt to all previous changes. The current method of learning necessitates a significant amount of memory and energy, as well as enormous computing power.

6 million neurons

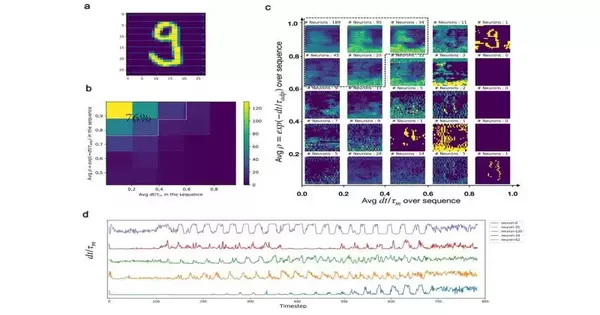

The new web-based gaining calculation makes it conceivable to advance straightforwardly from the information, empowering a lot bigger spiking brain organizations. Along with specialists from TU Eindhoven and exploration accomplice Holst Center, Bohté and Yin showed this in a framework intended for perceiving and finding objects. A video of a busy Amsterdam street is shown by Yin. The basic spiking brain organization, SPYv4, has been prepared so that it can recognize cyclists, people on foot, and vehicles and show precisely where they are.

“Already, we could prepare brain networks with up to 10,000 neurons; now, we can do the same fairly easily for networks with more than 6 million neurons.” Spiking neural networks like our SPYv4 can be trained with this.

And where does all of this lead? Chips that can run these AI programs at very low power are being developed with access to such powerful AI solutions based on spiking neural networks. These chips will eventually appear in a lot of smart devices, like hearing aids and augmented or virtual reality glasses.

More information: Bojian Yin, Accurate online training of dynamical spiking neural networks through Forward Propagation Through Time, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00650-4. www.nature.com/articles/s42256-023-00650-4