Physics doesn’t work when you get something for nothing. In any case, it would seem that by having a similar outlook as a key gamer and with some assistance from an evil presence, further developed energy productivity for complex frameworks like server farms may be conceivable.

Stephen Whitelam of the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) trained nanosystems, which are tiny machines about the size of molecules, to work with greater energy efficiency through computer simulations. Neural networks are a type of machine learning model that mimics human brain processes.

Likewise, the reenactments demonstrated the way that gained conventions could draw heat from the frameworks by continually estimating them to track down the most energy-effective tasks.

“We can get energy out of the framework, or we can store work in the framework,” Whitelam said.

This insight has the potential to be useful, for instance, when it comes to managing extremely large systems like computer data centers. Banks of computers produce a lot of heat that needs to be removed, using even more energy to avoid harming the delicate electronics.

Whitelam carried out the study at Berkeley Lab’s Molecular Foundry, a DOE Office of Science user facility. In response to a question about where his ideas came from, Whitelam stated, “People had used techniques in the machine learning literature to play Atari video games that seemed naturally suited to materials science.” His work is described in a paper that was published in Physical Review X.

Inspiration from Pac-Man and Maxwell’s Demon

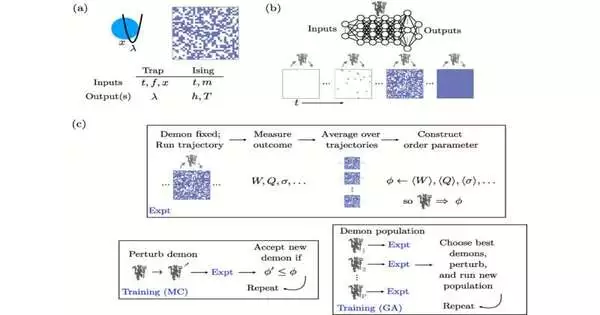

In a computer game like Pac-Man, he made sense of the fact that the point of AI is to pick a specific time for an activity — up, down, left, right, etc.—to be performed. The machine learning algorithms will “learn” over time which are the best moves to make and when to score high. Similar calculations can work for nanoscale frameworks.

Whitelam’s simulations are also a sort of response to Maxwell’s Demon, an old physics experiment. In brief, in 1867, physicist James Clerk Maxwell proposed a gas-filled box with a massless “demon” controlling a trap door in the middle. The demon would open the door so that the gas molecules would be able to move faster to one side of the box and slower to the other.

Ultimately, with all atoms so isolated, the “slow” side of the crate would be cold and the “quick side” would be hot, matching the energy of the particles.

Whitelam stated that the system would function like a heat engine while checking the refrigerator. Importantly, however, because information is equivalent to energy, Maxwell’s Demon does not violate the thermodynamic laws of getting something for nothing. Compared to the heat engine that results, measuring the position and speed of molecules in the box consumes more energy.

Checking the refrigerator

Additionally, heat engines can be useful. Whitelam stated that refrigerators provide a useful analogy. Even though the motor of the refrigerator causes the back of the refrigerator to become hot as the system runs, the food inside stays cold—the desired result.

The machine learning protocol can be compared to the demon in Whitelam’s simulations. During the time spent streamlining, it changes the data drawn from the framework demonstrated into energy as intensity.

In one simulation, Whitelam improved the process of dragging a nanoscale bead through water to unleash the demon. He demonstrated a purported optical snare in which lasers, behaving like tweezers of light, can hold and move a globule around.

Unleashing the demon on a nanoscale system

“The game’s title is: Whitelam advised, “Go from here to there with as little work done on the system as possible.” The dot wiggles under regular variances called Brownian movement as water atoms besiege it. Whitelam demonstrated the way that, in the event that these changes can be estimated, moving the dab should then be possible at the most energy-productive second.

He stated, “Here we’re showing that we can train a neural-network demon to do something similar to Maxwell’s thought experiment but using an optical trap.”

Cooling computers

Whitelam extended the concept beyond computers to include computation and microelectronics. He simulated flipping the state of a nanomagnetic bit between 0 and 1, which is a fundamental computing operation that erases or copies information, using the machine learning protocol.

“Rehash this, and once more.” Eventually, your demon will “learn” how to flip the bit so that it can absorb ambient heat,” he said. He uses the refrigerator analogy once more. You could make a computer that cools down while it runs, sending the heat elsewhere in your data center.

Whitelam said the reproductions are like a testbed for grasping ideas and thoughts. “Moreover, here the thought is simply demonstrating the way that you can play out these conventions, either with little energy cost or energy sucked in at the expense of heading off to some place else, utilizing estimations that could apply in a genuine examination,” he said.

More information: Stephen Whitelam, Demon in the Machine: Learning to Extract Work and Absorb Entropy from Fluctuating Nanosystems, Physical Review X (2023). DOI: 10.1103/PhysRevX.13.021005