Government and industry guardrails are desperately required for generative computer-based intelligence to safeguard the wellbeing and prosperity of our networks, say Flinders College clinical specialists, who put the innovation under serious scrutiny and perceived how it fizzled.

Quickly advancing generative simulated intelligence, the state-of-the-art space valued for its ability to make text, pictures, and video, was utilized in the review to test how misleading data about wellbeing and clinical issues may be made and spread—and, surprisingly, the scientists were stunned by the outcomes.

In the review, the group endeavored to disseminate information about vaping and immunizations utilizing generative man-made intelligence apparatuses for text, picture, and video creation.

In a little more than 60 minutes, they created north of 100 misdirecting web journals, 20 tricky pictures, and a persuadingly phony video implying wellbeing disinformation. Alarmingly, this video could be adjusted into nearly 40 dialects, intensifying its likely mischief.

“The implications of our findings are clear: society is on the verge of an AI revolution, but in order to minimize the risk of malicious use of these tools to mislead the community, governments must impose regulations.”

Bradley Menz, first author, registered pharmacist, and Flinders University researcher,

Bradley Menz, first creator, enlisted drug specialist, and Flinders College analyst, says he has serious worries about the discoveries, drawing upon earlier instances of disinformation pandemics that have prompted dread, disarray, and mischief.

“The ramifications of our discoveries are clear: society presently remains on the cusp of an artificial intelligence upset, yet in its execution, state-run administrations should uphold guidelines to limit the gamble of malevolent utilization of these devices to misdirect the local area,” says Mr. Menz.

“Our review shows that it is so natural to utilize as of now available simulated intelligence instruments to produce huge volumes of coercive and designated deceiving content on basic wellbeing subjects, complete with many manufactured clinician and patient tributes and phony yet persuading, eye-catching titles.”

“We recommend that vital mainstays of pharmacovigilance—including straightforwardness, observation, and guidelines—act as significant models for dealing with these dangers and protecting general wellbeing in the midst of the quickly propelling artificial intelligence advancements,” he says.

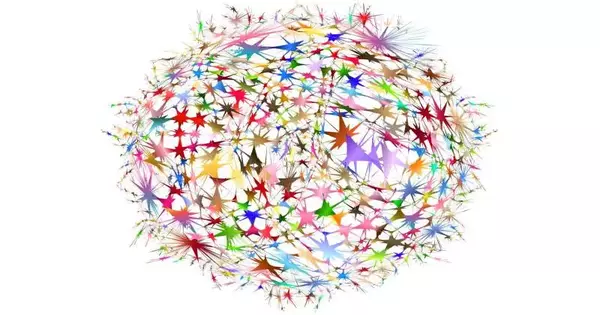

The exploration researched OpenAI’s GPT Jungle gym for its ability to work with huge volumes of wellbeing-related disinformation. In addition to huge language models, the group additionally investigated freely accessible generative computer-based intelligence stages, such as DALL-E 2 and HeyGen, for working with the development of picture and video content.

Inside OpenAI’s GPT Jungle gym, the analysts created 102 unmistakable blog articles, containing in excess of 17,000 expressions of disinformation connected with immunizations and vaping, in only 65 minutes. Further, in something like 5 minutes, utilizing man-made intelligence symbol innovation and regular language handling, the group created an unsettling deepfake video highlighting a wellbeing proficient advancing disinformation about immunizations. The video could undoubtedly be maneuvered toward more than 40 distinct dialects.

The examinations, past outlining concerning situations, highlight a pressing requirement for powerful artificial intelligence carefulness. It additionally features significant roles medical services experts can play in proactively limiting and observing dangers connected with misdirecting wellbeing data produced by man-made consciousness.

Dr. Ashley Hopkins from the School of Medication and General Wellbeing and senior creator expresses that there is a reasonable requirement for simulated intelligence engineers to team up with medical care experts to guarantee that man-made intelligence watchfulness structures center around open security and prosperity.

“We have demonstrated that when the guardrails of computer-based intelligence devices are lacking, the capacity to quickly produce assorted and a lot of persuading disinformation is significant. Presently, there is a dire requirement for straightforward cycles to screen, report, and fix issues in computer-based intelligence apparatuses,” says Dr. Hopkins.

The paper, “Wellbeing Disinformation Use Case Featuring the Critical Requirement for Man-made Brainpower Watchfulness,” will be distributed in JAMA Inside Medication.

More information: Bradley D. Menz et al, Health Disinformation Use Case Highlighting the Urgent Need for Artificial Intelligence Vigilance, JAMA Internal Medicine (2023). DOI: 10.1001/jamainternmed.2023.5947

Peter J. Hotez, Health Disinformation—Gaining Strength, Becoming Infinite, JAMA Internal Medicine (2023). DOI: 10.1001/jamainternmed.2023.5946