Human minds process heaps of data. When wine enthusiasts taste another wine, brain networks in their minds cycle a variety of information from each taste. Neurotransmitters in their neurons fire, gauging the significance of each piece of information—corrosiveness, fruitiness, sharpness—prior to giving it to the next layer of neurons in the organization. As data streams in, the cerebrum parses out the kind of wine.

Researchers need man-made reasoning (AI) frameworks to be refined into information epicureans as well, so they plan PC renditions of brain organizations to process and examine data. In many tasks, artificial intelligence is making up for lost time for the human brain, but it typically consumes far more energy to do the same things.Our minds make these computations while consuming an expected normal of 20 watts of force. An AI framework can be utilized a large number of times. This hardware can also fail, making AI slower, less productive, and less viable than our brains.An enormous field of AI research is searching for less energy-concentrated other options.

In a review published in the journal Physical Review Applied, researchers at the National Institute of Standards and Technology (NIST) and their teammates have fostered another sort of equipment for AI that could utilize less energy and work all the more rapidly — and it has already completed a virtual wine sampling assessment.

“It’s a virtual wine tasting, but the tasting is done by analytical equipment, which is more efficient but less pleasurable than tasting it yourself,”

NIST physicist Brian Hoskins.

Similarly, as with conventional PC frameworks, AI includes both actual equipment circuits and programming. Computer-based intelligence framework equipment frequently contains countless ordinary silicon chips that are energy-parched collectively. Training one cutting-edge business normal language processor, for instance, consumes about 190 megawatt hours (MWh) of electrical energy, generally the sum that 16 individuals in the U.S. use in a whole year. What’s more, that is before the AI does a day of work hands-on it was prepared for.

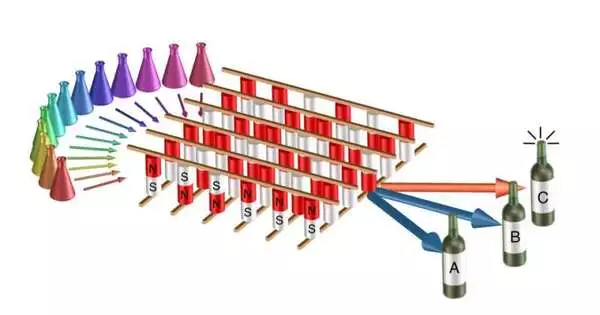

A less energy-serious methodology is to utilize different sorts of equipment to make AI’s brain organizations, and examination groups are looking for options. An attractive passage intersection (MTJ) is one device that shows promise because it is good at the types of math that a brain network uses and requires only a few tastes of energy.Other novel gadgets in view of MTJs have been shown to utilize a few times less energy than their customary equipment partners. MTJs can also work all the more rapidly on the grounds that they store information in a similar spot where they do their calculations, dissimilar to ordinary chips that store information somewhere else. Maybe the best part is that MTJs are now significant monetarily. They have long served as the read-compose heads of hard circle drives and are now used as intelligent PC recollections.

Although the specialists trust the energy effectiveness of MTJs in light of their past exhibition in hard drives and different gadgets, energy utilization was not the focal point of the current review. They had to be aware, in any case, whether a variety of MTJs really might fill in as a brain organization. To find out, they took it for a virtual wine tasting.

Researchers with NIST’s Hardware for AI program and their University of Maryland partners created and modified an extremely basic brain network from MTJs given by their colleagues at Western Digital’s Research Center in San Jose, California.

Very much like any wine authority, the AI framework is expected to prepare its virtual sense of taste. The group prepared the organization by utilizing 148 of the wines from a dataset of 178 produced using three kinds of grapes. Each virtual wine had 13 qualities to consider, for example, liquor level, variety, flavonoids, debris, alkalinity and magnesium. Every trademark was doled out a value somewhere in the range of 0 and 1 for the organization to consider while recognizing one wine from the others.

“It’s a virtual wine tasting, but the tasting is finished by scientific hardware that is more effective yet less fun than tasting it yourself,” said NIST physicist Brian Hoskins.

Then it was given a virtual wine sampling test on the full dataset, which included 30 wines it hadn’t seen previously. The framework passed with a 95.3% achievement rate. Out of the 30 wines it hadn’t prepared, it just committed two errors. The scientists looked at this as a decent sign.

“Getting 95.3% lets us know that this is working,” said NIST physicist Jabez McClelland.

The point isn’t to fabricate an AI sommelier. Rather, this early achievement demonstrates the way that a variety of MTJ gadgets might actually be increased and used to assemble new AI frameworks. While how much energy an AI framework utilizes relies upon its parts, involving MTJs as neural connections could radically decrease its energy use significantly while perhaps not more, which could enable lower power use in applications like “savvy” clothing, smaller than normal robots, or sensors that cycle information at the source.

“Almost certainly, critical energy investment funds over ordinary programming-based approaches will be acknowledged by executing enormous brain networks utilizing this sort of exhibit,” said McClelland.

More information: Jonathan M. Goodwill et al, Implementation of a Binary Neural Network on a Passive Array of Magnetic Tunnel Junctions, Physical Review Applied (2022). DOI: 10.1103/PhysRevApplied.18.014039