Many PC frameworks that people connect to require information about specific parts of the world, or models, to function.These frameworks must be prepared, frequently expecting to figure out how to perceive objects from video or picture information. This information regularly contains pointless substances that lessen the precision of models. Thus, scientists figured out how to integrate normal hand signals into the educational system. Along these lines, clients can more effectively show machines objects, and the machines can likewise learn more.

You’ve likely heard the term AI previously, yet would you say you know about machine education? AI occurs in the background when a PC utilizes input information to frame models that can later be utilized to carry out helpful roles. Yet, machine education is the fairly less investigated piece of the cycle, which manages how the PC gets its feedback information regardless.

“In a typical object training scenario, individuals can hold an object up to a camera and move it about so a computer can evaluate it from all angles to build up a model,”

Graduate student Zhongyi Zhou.

On account of visual frameworks, for instance, ones that can perceive objects, individuals need to show objects to a PC so it can find out about them. Yet, there are downsides to the manner in which this is normally done that analysts from the College of Tokyo’s Intuitive Wise Frameworks Lab tried to move along.

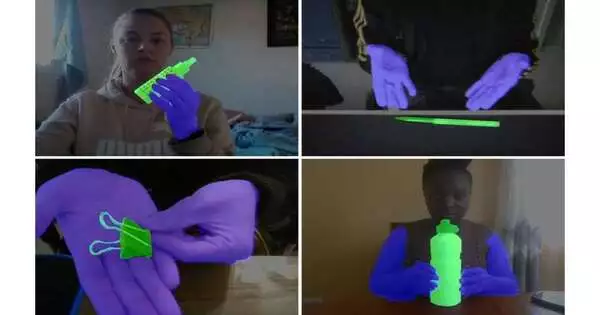

The model made with HuTics permits LookHere to utilize motions and hand positions to give additional settings to the framework to select and recognize the item, featured in red. Credit: 2022 Yatani and Zhou

“In a normal item preparation situation, individuals can hold an item up to a camera and move it around so a PC can dissect it from all points to develop a model,” said graduate understudy Zhongyi Zhou.

“In any case, machines miss the mark on advanced capacity to detach objects from their surroundings, so the models they make can accidentally incorporate pointless data from the foundations of the preparation pictures. This frequently implies clients should invest energy in refining the created models, which can be a fairly specialized and tedious task. We figured there should be a superior approach to doing this that is better for the two clients and PCs, and with our new framework, LookHere, I think we have tracked down it. “

Zhou, working with academic partner Koji Yatani, made LookHere to resolve two key issues in machine education: first, the issue of showing proficiency, meaning limiting the clients’ time and second, the requirement for specialized information. Also, second, of learning proficiency — how to guarantee better information gain for machines to make models from.

LookHere accomplishes these by accomplishing something novel and shockingly natural. It integrates the hand tokens of clients into how a picture is handled before the machine integrates it into its model, known as HuTics. For instance, a client can highlight or present an item to the camera in a manner that stresses its importance in contrast with different components in the scene. This is precisely the way that individuals could show objects to one another. Also, by killing unessential subtleties, because of the additional accentuation to what’s really significant in the picture, the PC acquires better information for its models.

“The thought is very clear, yet the execution was extremely difficult,” said Zhou. “Everybody is unique, and there is no standard arrangement of hand signals. Thus, we initially gathered 2,040 model recordings of 170 individuals introducing objects to the camera into HuTics. These resources were explained to check what was essential for the item and which parts of the picture were only the individual’s hands.

“LookHere was prepared with HuTics, and when contrasted with other items acknowledged drawing near, can more readily figure out which parts of an approaching picture ought to be utilized to assemble its models. To ensure it’s as open as possible, clients can utilize their cell phones to work with LookHere, and the genuine handling is finished on distant servers. We likewise delivered our source code and informational index so others can expand upon it assuming they wish. “

Figuring in the decreased interest in clients’ time that LookHere bears the cost of individuals, Zhou and Yatani found that it can develop models multiple times quicker than a few existing frameworks. As of now, LookHere manages to show machines actual articles and it involves only visual information for input. Yet, in principle, the idea can be extended to utilize different sorts of information, like sound or logical information. Also, models produced using that information would profit from comparable upgrades in precision as well.

The exploration was distributed as a feature of the 35th Yearly ACM Conference on UI Programming and Innovation.

More information: Zhongyi Zhou et al, Gesture-aware Interactive Machine Teaching with In-situ Object Annotations, The 35th Annual ACM Symposium on User Interface Software and Technology (2022). DOI: 10.1145/3526113.3545648