Matching a face to a voice, or the sight and sound of speech, in early childhood is crucial for later language development.

Intersensory processing, or this ability, is necessary for learning new words. A recent study, which was published in the journal Infancy, found that vocabulary and language outcomes at 18 months, 2 years, and 3 years can be predicted by the degree of success at intersensory processing at 6 months.

“Adults are very good at this, but infants need to learn how to connect what they see and hear. Adults are very good at this.” ” Lead author Elizabeth V. Edgar, who conducted the study as a psychology doctoral student at FIU and is currently a postdoctoral fellow at the Yale Child Study Center, said, “It’s a tremendous job, and they do it very early in their development.” Our discoveries show that intersensory handling has its own autonomous commitment to language, far beyond other laid-out indicators, including guardian language input and financial status.”

Across three years, Edgar and a group at FIU brain research teacher Lorraine E. Bahrick’s Baby Improvement Lab tried intersensory handling rate and exactness in 103 newborn children between the ages of 90 days and 3 years of age, utilizing the Intersensory Handling Proficiency Convention (IPEP). Bahrick, co-investigator FIU Research Assistant Professor of Psychology James Torrence Todd, and others created this tool.

“Adults are very good at this, but infants must learn to connect what they see to what they hear. It’s an incredible job that they do at such a young age.”

Lead author Elizabeth V. Edgar,

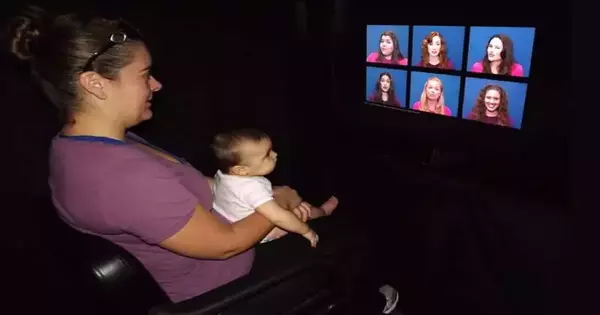

The IPEP consists of a number of brief video trials that are intended to present distraction or simulate the “noisiness” of selecting a speaker from a crowd. Six women’s faces are shown simultaneously in separate boxes on the screen for each trial. It would appear that each woman is speaking.

On the other hand, during each trial, only one of the women speaking is accompanied by the soundtrack. The researchers were able to determine whether the babies made the match and how long they watched the matching face and voice using an eye tracker that tracks pupil movement.

The data were then compared to language outcomes at various developmental stages, such as the number of unique and total words used by children. At 18 months, 2 years, and 3 years old, the findings showed that infants who looked at the correct speaker for a longer period of time had better language development.

When considering the nature of speech, the connection between intersensory processing and language becomes more apparent. Sure enough, it’s a sound. However, lip movements, facial expressions, and gestures accompany it as well. Speaking involves both hearing and seeing. Particularly, baby talk is a truly multisensory experience. Playful gestures are made by a parent or caregiver, perhaps while naming a favorite toy. When a baby is able to be more selective with their attention and cut through distractions to match a voice to a face or a sound to an object, this sets the stage for learning the words that correspond to specific world objects.

“Better specific consideration regarding varying media discourse at the outset might permit babies to make the most of early word learning open doors, for example, object naming, given via parental figures during associations,” Bahrick said.

Edgar emphasized that this research serves as a reminder to parents or caregivers that babies learn language by coordinating what they see and hear.

This indicates that pointing in the direction of the topic at hand or rearranging an object while uttering its name can be helpful. Edgar explained, “The object-sound synchrony helps show that this word belongs with this thing.” This is very important in early development and lays the groundwork for later, more complex language skills, as our studies demonstrate.”

More information: Elizabeth V. Edgar et al, Intersensory processing of faces and voices at 6 months predicts language outcomes at 18, 24, and 36 months of age, Infancy (2023). DOI: 10.1111/infa.12533