Man-made brainpower calculations are rapidly becoming part of our daily lives. Machine learning will soon or already underpin many systems that require strong security. Facial recognition, banking, military targeting applications, robots, and autonomous vehicles are just a few examples of these systems.

An important question arises from this: how secure are these AI calculations against vindictive assaults?

My colleagues at the University of Melbourne and I discuss a potential solution to the vulnerability of machine learning models in an article that was recently published in Nature Machine Intelligence.

According to our hypothesis, new algorithms with a high degree of resistance to adversarial attacks could be developed by incorporating quantum computing into these models.

The risks of information control assaults

AI calculations can be strikingly exact and effective for some errands. They are especially helpful for categorizing and locating image features. However, they are also extremely susceptible to attacks aimed at manipulating data, which can pose significant security threats.

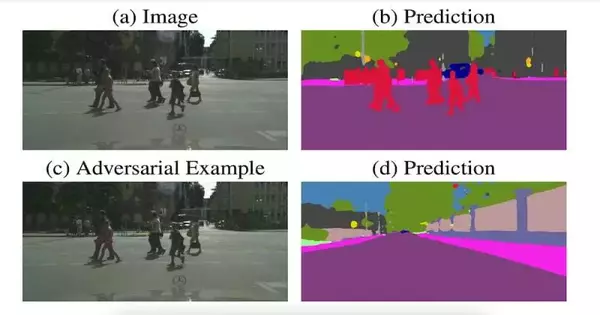

There are a number of ways that data manipulation attacks, which involve manipulating image data in a very subtle way, can be launched. By combining corrupt data with a training dataset used to train an algorithm, an attack could be launched, leading the algorithm to learn things it shouldn’t.

In situations where the AI system continues to train the underlying algorithms while it is in use, manipulated data can also be injected during the testing phase (after training has been completed).

These kinds of attacks can even be carried out by people in the real world. A sticker on a stop sign could deceive an autonomous vehicle’s AI into thinking it is a speed limit sign. Or, on the other hand, on the bleeding edges, troops could wear regalia that can trick man-made intelligence-based drones into distinguishing them as scene highlights.

In either case, data manipulation attacks can have serious repercussions. For instance, in the event that a self-driving vehicle utilizes an AI calculation that has been compromised, it might mistakenly assume there are no people out and about when there are.

How quantum computing can help In our article, we discuss the potential development of secure algorithms known as quantum machine learning models by combining quantum computing and machine learning.

These algorithms are carefully constructed to take advantage of unique quantum properties that would enable them to locate particular patterns in image data that are difficult to alter. The end product would be robust algorithms that are safe from even the most potent attacks. Additionally, they wouldn’t necessitate the costly “adversarial training” that is currently utilized to instruct algorithms on how to withstand such attacks.

Beyond this, quantum machine learning may make it possible to train algorithms more quickly and learn features with greater precision.

So how might it function?

The present old-style PCs work by putting away and handling data as “pieces”, or paired digits, the littlest unit of information a PC can process. Bits are represented as binary numbers, specifically 0 and 1, in classical computers, which operate in accordance with the rules of classical physics.

In contrast, quantum computing adheres to the principles of quantum physics. Qubits, or quantum bits, are used in quantum computers to store and process data. Qubits can be one, zero, or a combination of both. A quantum framework that exists in numerous states on the double is supposed to be in a superposition state. Quantum PCs can be utilized to plan smart calculations that exploit this property.

Quantum computing, on the other hand, has the potential to have both positive and negative effects when it comes to protecting machine learning models.

Quantum machine learning models will, on the one hand, provide crucial security for numerous sensitive applications. On the other hand, quantum PCs could be utilized to produce strong, ill-disposed assaults, able to effectively delude even cutting-edge regular AI models.

Pushing ahead, we’ll have to truly consider the most ideal ways to safeguard our frameworks. A significant security risk would be posed by an adversary with access to early quantum computers.

Due to limitations in the current generation of quantum processors, the evidence suggests that quantum machine learning is still years away from becoming a reality.

The present quantum PCs are generally small (with less than 500 qubits), and their error rates are high. Qubit fabrication flaws, control circuitry flaws, and information loss due to environmental interaction—known as “quantum decoherence”—are all possible causes of errors.

Still, quantum software and hardware have made a lot of progress in recent years. As per late quantum equipment guides, it’s expected that quantum gadgets made before very long will have hundreds to thousands of qubits.

A wide range of industries that rely on machine learning and AI tools should be protected by these devices, which should be able to run powerful quantum machine learning models.

Quantum technologies are receiving an increasing amount of investment from both the public and private sectors all over the world.

This month, the Australian government sent off the Public Quantum Technique, pointing toward developing the country’s quantum industry and commercializing quantum advancements. The CSIRO estimates that by 2030, Australia’s quantum industry could be worth approximately A$2.2 billion.

Journal information:Nature Machine Intelligence