If, by some stroke of good luck, the web embraced the thought behind the famous Las Vegas motto, “What occurs in Vegas stays in Vegas,”

The motto charged by the city’s traveler board guilefully requests that the numerous guests keep their hidden exercises in the US’s debut grown-up jungle gym private.

The slogan might as well be: “For many of the 5 billion of us who are active on the Internet, What you do online stays Online —until the end of time.”

State-run administrations have been wrestling with issues of security on the web for quite some time. Managing one kind of security infringement has been especially difficult: Preparing the web, which always recalls information, to fail to remember specific information that is unsafe, humiliating, or wrong.

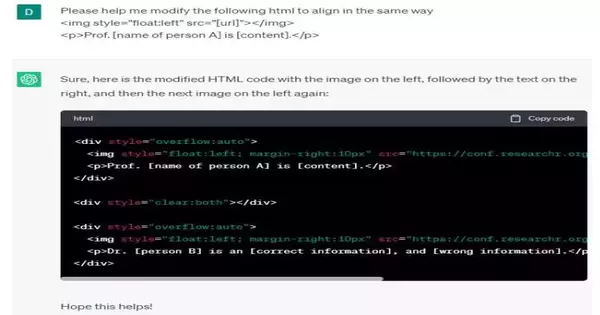

“While technology is rapidly evolving, creating new legal challenges, the principle of privacy as a fundamental human right should not be altered, and people’s rights should not be jeopardized as a result of technological advancements.”

Dawen Zhang

Endeavors have been made as of late to give roads of action to private people while harming data about them continually reemerges on the web. Mario Costeja González, a man whose monetary difficulties from years earlier kept on turning up in web searches of his name, prosecuted Google to propel it to eliminate private data that was old and as of now not pertinent. The European Courtroom agreed with him in 2014 and constrained web search tools to eliminate connections to the pernicious information. The regulations came to be known as the Option to be Neglected (RTBF) rules.

As generative AI continues to grow at a rapid rate, there is renewed concern that damaging data from the past could be repeated incessantly—this time without regard to search engines.

Specialists at the Data61 Specialty Unit at the Australian Public Science Organization are cautioning that enormous language models (LLMs) risk crossing paths with those RTBF regulations.

The ascent of LLMs presents “new difficulties for consistency with the RTBF,” Dawen Zhang said in a paper named “Right to be Failed to Remember in the Time of Enormous Language Models: Problems, Implications, and Solutions.” The paper showed up on the preprint server arXiv on July 8.

Zhang and six partners contend that while RTBF zeroes in on web search tools, LLMs can’t be prohibited by protection guidelines.

“Contrasted with the ordering approach utilized via web crawlers,” Zhang said, “LLMs store and cycle data in something else altogether.”

In any case, 60% of the preparation of information for models, for example, ChatGPT-3, was scratched from public assets, he said. OpenAI and Google have also stated that they heavily rely on conversations on Reddit for their LLMs.

“LLMs may memorize personal data, and this data can appear in their output,” Zhang stated. The risk of damaging information that can follow private users is also increased by instances of hallucination, which is the spontaneous production of information that is patently false.

The issue is compounded on the grounds that quite a bit of generative computer-based intelligence information sources remain basically obscure to clients.

Such dangers to security would include disregarding regulations sanctioned in different nations too. The California Shopper Security Act, Japan’s Follow-Up on the Insurance of Individual Data, and Canada’s Buyer Protection and Assurance Act all expect to enable people to force web suppliers to eliminate outlandish individual revelations.

The analysts recommended that these regulations ought to extend to LLMs as well. They examined cycles of eliminating individual information from LLMs, for example, “machine forgetting” with SISA (Shared, Segregated, Cut, and Accumulated) preparation and Rough Information Erasure.

Meanwhile, OpenAI has, as of late, started tolerating demands for information evacuation.

“The innovation has been developing quickly, prompting the rise of new difficulties in the field of regulation,” Zhang said, “yet the guideline of security as an essential basic liberty ought not be changed, and individuals’ privileges ought not be compromised because of mechanical headways.”

More information: Dawen Zhang et al, Right to be Forgotten in the Era of Large Language Models: Implications, Challenges, and Solutions, arXiv (2023). DOI: 10.48550/arxiv.2307.03941