When is an apple not an apple? On the off chance that you’re a PC, the response is the point at which it’s been sliced down the middle.

While huge progress has been made in PC vision over the past couple of years, training a PC to recognize objects as they change shape stays tricky in the field, especially with computerized reasoning (computer-based intelligence) frameworks. Presently, software engineering analysts at the College of Maryland are handling the issue utilizing objects that we adjust every day—foods grown from the ground.

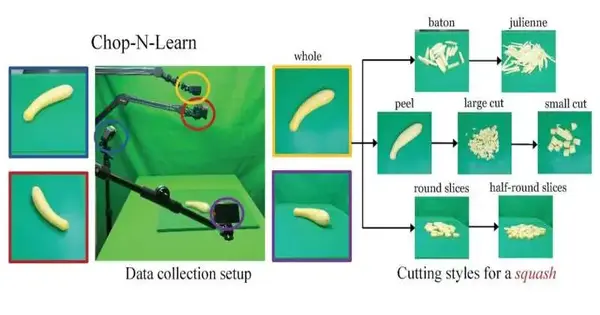

Their item is Cleave and Learn, a dataset that shows AI frameworks to perceive produce in different structures—even as it is being stripped, cut, or slashed into pieces.

The task was introduced recently at the 2023 Worldwide Meeting on PC Vision in Paris.

“While you and I can imagine how a sliced apple or orange would appear in comparison to a whole fruit, machine learning models require a large amount of data to learn how to interpret that. We wanted to devise a strategy to assist the computer in imagining unknown events in the same manner that people do.”

Nirat Saini, a fifth-year computer science doctoral student and lead author of the paper.

“You and I can picture how a cut Mac or orange would look contrasted with an entire natural product, yet AI models require bunches of information to figure out how to decipher that,” said Nirat Saini, a fifth-year software engineering doctoral understudy and lead creator of the paper. “We expected to concoct a technique to assist the PC with envisioning inconspicuous situations the same way that people do.”

To create the datasets, Saini and individual software engineering doctoral understudies Hanyu Wang and Archana Swaminathan recorded themselves slashing 20 sorts of products of the soil in seven styles, utilizing camcorders set up at four points.

The range of points, individuals, and food-preparing styles are vital for an extensive informational index, said Saini.

“Somebody might strip their apple or potato prior to cleaving it, while others don’t. The PC will perceive that in an unexpected way,” she said.

Notwithstanding Saini, Wang, and Swaminathan, the Cleave and Learn group incorporates software engineering doctoral understudies Vinoj Jayasundara and Bo He; Kamal Gupta, Ph.D. ’23, presently at Tesla Optimus; and their counsel, Abhinav Shrivastava, an associate teacher of software engineering.

“Having the option to perceive objects as they are going through various changes is essential for building long-haul video figuring-out frameworks,” said Shrivastava, who additionally has an arrangement with the College of Maryland Organization for Cutting Edge PC Studies. “We accept our dataset is a decent beginning to gaining genuine headway on the fundamental essence of this issue.”

For the time being, Shrivastava said, the Slash and Learn dataset will add to the headway of picture and video undertakings like 3D reproduction, video age, and the rundown and parsing of long-haul video.

Those advances might one day, at any point, broaderly affect applications like security highlights in driverless vehicles or assisting authorities with recognizing public wellbeing dangers, he said.

And keeping in mind that it’s not the prompt objective, Shrivastava said, Slash and Learn could add to the improvement of a mechanical culinary expert that could transform produce into good feasts in your kitchen on order.

Provided by University of Maryland