As shown by forward leaps in different fields of man-made reasoning (AI, for example, picture handling, savvy medical care, self-driving vehicles, and shrewd urban areas), this is without a doubt the brilliant time of profound learning. In the next ten years or so, AI and calculating frameworks will be outfitted with the capacity to learn and figure in the way that people do — to deal with continuous data progression and connect with this present reality.

In any case, current AI models experience the ill effects of an exhibition misfortune when they are prepared continuously with new data. This is on the grounds that each time new information is created, it is composed on top of existing information, thus deleting past data. This impact is known as “disastrous neglect.” A problem arises from the security pliancy issue, where the AI model needs to refresh its memory to constantly conform to the new data and, simultaneously, keep up with the strength of its ongoing information. This issue keeps cutting-edge AI from constantly gaining from true data.

Edge figuring frameworks permit processing to be moved from the distributed storage and server farms to the first source, for example, gadgets associated with the Internet of Things (IoTs). Applying nonstop advancing effectively on asset restricted edge figuring frameworks remains a test, albeit numerous constant learning models have been proposed to tackle this issue. Customary models require high figurative power and a huge memory limit.

“This approach avoids referencing data from earlier studies in order to conserve knowledge within trained models and reduce performance loss when new tasks are introduced. As a result, we conserve a significant quantity of energy.”

Assistant Professor Loke

Another kind of code to understand an energy-effective nonstop learning framework has been planned by a group of scientists from the Singapore University of Technology and Design (SUTD), including Shao-Xiang Go, Qiang Wang, Bo Wang, Yu Jiang, and Natasa Bajalovic. The group was driven by the head agent, Assistant Professor Desmond Loke from SUTD. The concentrate by these analysts, “Nonstop Learning Electrical Conduction in Resistive-Switching-Memory Materials,” was published in the journal Advanced Theory and Simulations.

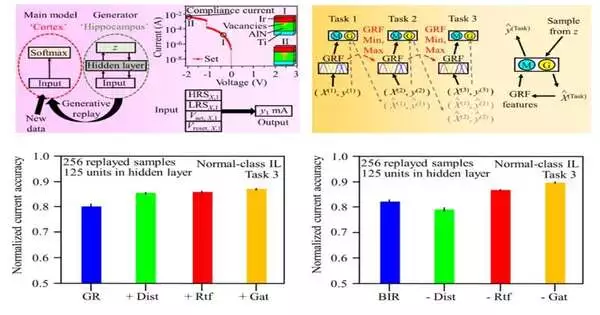

The group proposed Brain-Inspired Replay (BIR), a model roused by the mind that performs nonstop advancing normally. The BIR model, in view of the utilization of a fake brain organization and a variational autoencoder, mimics the elements of the human mind and can perform well in class-steady learning circumstances without putting away information. The analysts likewise utilized the BIR model to address conductive fiber development involving electrical flow in advanced memory frameworks.

“In this model, information is saved inside prepared models to limit execution misfortune upon the presentation of extra errands, without the need to allude to information from past works,” made sense to Assistant Professor Loke. “Thus, this recovers us a significant measure of energy.”

Besides, a cutting edge exactness of 89% on moving consistency to current learning errands without putting away information was accomplished, which is twice higher than that of customary nonstop learning models, as well as high energy proficiency, “he added.

To permit the model to handle on-the-spot data in reality freely, the group intends to increase the movable ability of the model in the following period of their examination.

“As the limited scope demonstrates, this exploration is still in its early stages,” said Assistant Professor Loke. “The reception of this approach is supposed to permit edge AI frameworks to advance freely without human control.”

More information: Shao‐Xiang Go et al, Continual Learning Electrical Conduction in Resistive‐Switching‐Memory Materials, Advanced Theory and Simulations (2022). DOI: 10.1002/adts.202200226