Specialists at the College of California, Davis, have fostered a web application to assist ranchers and industry laborers with utilizing drones and other uncrewed elevated vehicles, or UAVs, to produce the most ideal information. By assisting ranchers with utilizing assets all the more productively, this headway could assist them with adjusting to a world with a changing environment that requires them to take care of billions.

Academic administrator Alireza Pourreza, overseer of the UC Davis Computerized Agribusiness Lab, and postdoctoral specialist Hamid Jafarbiglu, who as of late finished his doctorate in natural frameworks design under Pourreza, planned the When2Fly application to make drones more capable and precise. In particular, the stage assists drone clients with staying away from glare-like regions called areas of interest that can destroy gathered information.

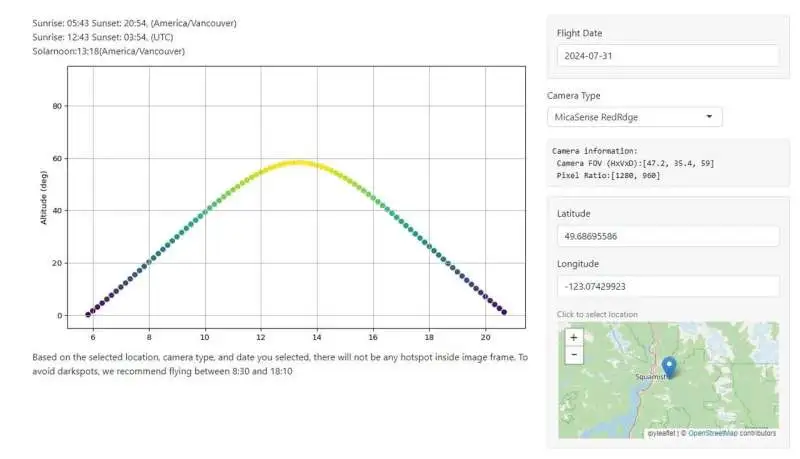

Drone clients select the date they intend to fly, the kind of camera they are utilizing, and their area either by choosing a point on a guide or by entering facilitates. The application then shows the best seasons of that particular day to gather crop information from a robot.

“In conventional crop management, we manage the entire field uniformly, assuming that every single plant will produce a uniform amount of yield and require a uniform amount of input, which is not an accurate assumption. We need to understand our crops’ spatial variability in order to identify and solve concerns in a timely and exact manner, and drones are these fantastic instruments that producers can utilize, but they must be correctly used.”

Associate Professor Alireza Pourreza, director of the UC Davis Digital Agriculture Lab.

Jafarbiglu and Pourreza said that utilizing this application for drone imaging and information assortment is critical to working on cultivating effectiveness and reducing farming’s carbon footprint. Getting the best information—like which part of a plantation could require more nitrogen or less water or what trees are being impacted by illness—permits makers to dispense assets all the more productively and really.

“In traditional harvest, we deal with the whole field consistently, expecting each and every plant to deliver a uniform measure of yield, and they require a uniform measure of information, which is definitely not an exact supposition,” said Pourreza. “We really want to have an understanding of our harvests’ spatial inconstancy to have the option to recognize and resolve issues conveniently and definitively, and drones are these astounding devices that are available to producers, yet they need to know how to appropriately utilize them.”

Dispersing the sun-powered early afternoon conviction

In 2019, Jafarbiglu was attempting to separate information from ethereal pictures of pecan and almond plantations and other specialty crops when he understood something was off with the information.

“Regardless of how precisely we adjusted every one of the pieces of information, we have yet to achieve great outcomes,” said Jafarbiglu. “I took this to Alireza, and I said, ‘I feel there’s a bonus in the information that we don’t know about and that we’re not making up for.’ I chose to actually take a look at everything.”

Jafarbiglu pored through the 100 terabytes of pictures gathered over the course of three years. He saw that after the pictures had been adjusted, there were glaring, radiant white places where they should look level and uniform.

In any case, it couldn’t be brightness on the grounds that the sun was behind the robot taking the picture. So Jafarbiglu assessed writing, returning to the 1980s, looking for different instances of this peculiarity. In addition to the fact that he tracked down notices of it, scientists had also coined a term for it: area of interest.

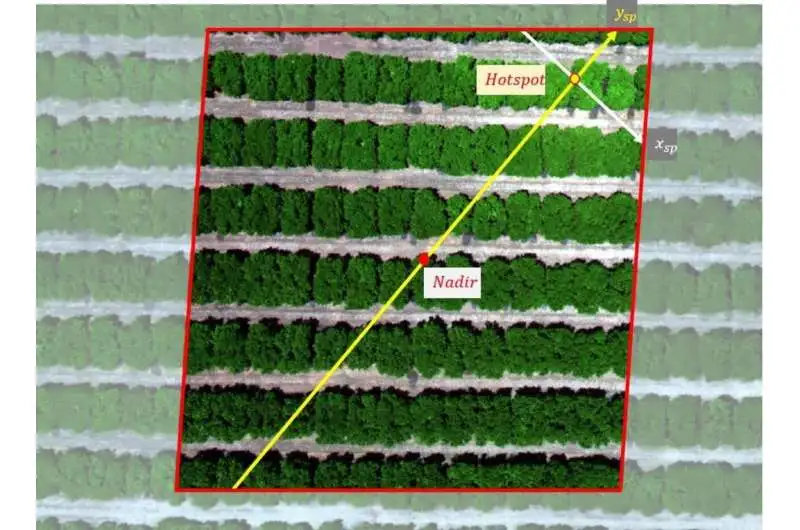

Illustration of robot picture information with area of interest Credit: ISPRS Diary of Photogrammetry and Remote Detecting (2023). DOI: 10.1016/j.isprsjprs.2022.12.002

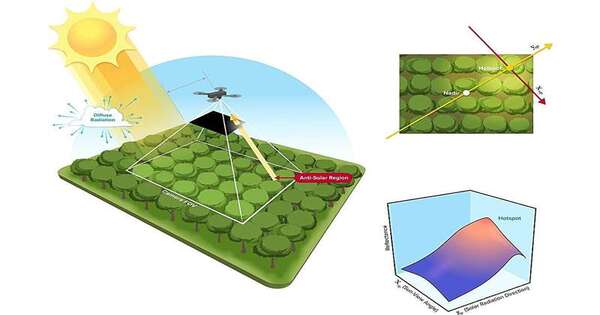

An area of interest happens when the sun and UAV are arranged so that the robot is between the visible region of the camera’s focal point framework and the sun. The robot takes photographs of the Earth, and the subsequent pictures show a steady expansion in brilliance toward a specific region. That brilliant point is the area of interest.

The areas of interest are an issue, Jafarbiglu said, on the grounds that while gathering UAV information in horticulture, where an elevated degree of cross-over is required, noticed contrasts in the adjusted pictures need to come exclusively from plant contrasts.

For instance, each plant might show up in at least 20 pictures, each from differing view points. In certain pictures, the plant may be near the area of interest, while in others it could be arranged further away, so the reflectance might differ in view of the plant’s separation from the area of interest and spatial area at the edge, not in light of any of the plant’s inborn properties. On the off chance that this multitude of pictures are consolidated into a mosaic and information is separated, the dependability of the information would be compromised, rendering it pointless.

Pourreza and Jafarbiglu found that the areas of interest reliably happened when robots were taking pictures in the sun-powered early afternoon in mid-summer, which many accept is the best opportunity to fly robots. It’s a conspicuous supposition: the sun is at its most elevated point over the Earth, varieties in light are negligible, while perhaps not consistent, and fewer shadows are apparent in the pictures.

In any case, some of the time that neutralizes the robot is in light of the fact that the sun’s mathematical relationship to the Earth differs in view of area and season, expanding the possibility of having an area of interest inside the picture outline when the sun is higher overhead.

Illustration of the result from the application prescribing times to fly in Canada Credit: ISPRS Diary of Photogrammetry and Remote Detecting (2023). DOI: 10.1016/j.isprsjprs.2022.12.002

“In high-scope areas, for example, Canada, you have no issue; you can fly whenever. However, at that point in low-scope districts, for example, California, you will have a smidgen of an issue as a result of the sun point,” Pourreza said.

“Then, at that point, as you draw nearer to the equator, the issue gets greater and greater. For instance, the best season for a trip in Northern California and Southern California will be unique. Then, at that point, you go to summer in Guatemala, and essentially, from 10:30 a.m. to very nearly 2 p.m., you shouldn’t fly, contingent upon the field-situated control of the camera. It’s the very inverse of the regular conviction that wherever we ought to fly at sunlight-based early afternoon.”

Develop innovation and feed the planet.

Drones are not by any means the only devices that can utilize this disclosure. Troy Magney, an associate teacher of plant sciences at UC Davis, fundamentally utilizes pinnacles to check fields and gather plant reflectance information from different survey points. He reached Jafarbiglu subsequent to perusing his examination, distributed in February in the ISPRS Diary of Photogrammetry and Remote Detecting, since he was seeing a comparable issue in the remote detection of plants and noticed that it’s frequently overlooked by end clients.

“The work that Hamid and Ali have done will be valuable to a great many scientists, both at the pinnacle and the robot scale, and assist them with interpreting how the situation is playing out, whether it’s an adjustment of vegetation or an adjustment of simply the rakish effect of the sign,” he said.

For Pourreza, the When2Fly application addresses a significant step in the right direction in sending innovation to tackle difficulties in horticulture, including a definitive problem: taking care of a developing populace with restricted assets.

“California is substantially higher than different states and different nations with innovation, yet our farming in the Focal Valley utilizes advances from 30 to a long time ago,” said Pourreza.

“My examination is centered around detecting, yet there are different regions like the 5G network and distributed computing to computerize the information assortment and investigation interaction and make it continuous. This information can assist cultivators with making informed choices that can prompt an effective food creation framework. When2Fly is a significant component of that.”

More information: Hamid Jafarbiglu et al, Impact of sun-view geometry on canopy spectral reflectance variability, ISPRS Journal of Photogrammetry and Remote Sensing (2023). DOI: 10.1016/j.isprsjprs.2022.12.002