Alzheimer’s disease has been on the rise in recent years all over the world, and it is rarely diagnosed at an early stage when it can still be effectively controlled. KTU researchers used artificial intelligence to see if human-computer interfaces could be modified to help people with memory impairments recognize a visible object in front of them.

According to Rytis Maskelinas, a researcher at Kaunas University of Technology’s Department of Multimedia Engineering, the classification of information visible on the face is a daily human function: “While communicating, the face “tells” us the context of the conversation, especially from an emotional point of view, but can we identify visual stimuli based on brain signals?”

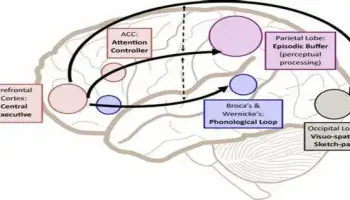

Human facial visual processing is complex. We can perceive information such as a person’s identity or emotional state by analyzing their faces. The study’s goal was to examine a person’s ability to process contextual information from the face and determine how they respond to it.

Face can indicate the first symptoms of the disease

Many studies, according to Maskelinas, show that brain diseases can potentially be studied by examining facial muscle and eye movements, because degenerative brain disorders affect not only memory and cognitive functions, but also the cranial nervous system associated with the above facial (especially eye) movements.

Dovil Komolovait, a graduate of the KTU Faculty of Mathematics and Natural Sciences and co-author of the study, stated that the study clarified whether a patient with Alzheimer’s disease visually processes visible faces in the brain in the same way that individuals without the disease do.

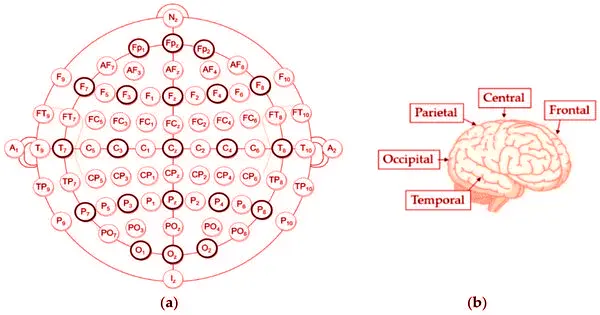

The study makes use of data from an electroencephalograph, which measures electrical impulses in the brain. A person with Alzheimer’s typically has significantly noisier brain signals than a healthy person, emphasizing that this correlates with a reason that makes it more difficult for a person to focus and be attentive when experiencing Alzheimer’s symptoms.

Dovil Komolovait

“The study makes use of data from an electroencephalograph, which measures electrical impulses in the brain,” explains Komolovait, who is currently pursuing a master’s degree in Artificial Intelligence at the Faculty of Informatics. The experiment was carried out on two groups of people in this study: healthy and Alzheimer’s patients.

“A person with Alzheimer’s typically has significantly noisier brain signals than a healthy person,” says Komolovait, emphasizing that this correlates with a reason that makes it more difficult for a person to focus and be attentive when experiencing Alzheimer’s symptoms.

Photos of people’s faces were shown during the study

“Older age is one of the main risk factors for dementia, and since the effects of gender were noticed in brain waves, the study is more accurate when only one gender group is chosen,” said the researchers.

Each participant in the study completed experiments lasting up to an hour, during which photos of human faces were shown. According to the researcher, these photos were chosen based on several criteria, including the following: in the analysis of the influence of emotions, neutral and fearful faces are shown, and in the analysis of the familiarity factor, known and randomly chosen people are indicated to the study participants.

In order to understand whether a person sees and understands a face correctly, the participants of the study were asked to press a button after each stimulus to indicate whether the face shown is inverted or correct.

“Even at this stage, an Alzheimer’s patient makes mistakes, so it is important to determine whether the impairment of the object is due to memory or vision processes,” says the researcher.

Inspired by real-life interactions with Alzheimer’s patients

Maskelinas explains that his involvement with Alzheimer’s disease began with a collaboration with the Huntington’s Disease Association, which exposed him to the reality of these various neurodegenerative diseases.

In addition, the researcher had direct contact with Alzheimer’s patients: “I observed that the diagnosis is usually confirmed too late, after the brain has already suffered irreversible damage. Although there is no effective treatment for this disease, it can be slowed and maintained by gaining some healthy years of life.”

Today, we can see how human-computer interaction is being used to help people with physical disabilities. Controlling a robotic hand with a “thought,” or a paralyzed person writing a text by imagining letters, is not a novel idea. Trying to understand the human brain remains one of the most difficult tasks that exists today.

The researchers used data from standard electroencephalograph equipment in this study, but Maskelinas emphasizes that in order to create a practical tool, data from invasive microelectrodes, which can more accurately measure the activity of neurons, would be preferable. This would significantly improve the AI model’s quality.

“Of course, in addition to the technical requirements, a community environment focused on making life easier for people with Alzheimer’s disease should exist. Still, in my opinion, after five years, I believe we will see technologies focused on improving physical function, with a focus on people affected by brain diseases coming later “Maskelinas says

According to master’s student Komolovait, a clinical examination with the assistance of colleagues in the field of medicine is required, so this stage of the process would be lengthy: “A certification process is also required if we want to use this test as a medical tool.”