Many people were under the impression that racial bias in AI output could be contained more easily. Researchers at the simulated intelligence research organization Human-Centered say a little courteousness may simply get the job done, in some measure, on certain occasions.

The authors of a report titled “Evaluating and Mitigating Discrimination in Language Model Decisions,” which was posted to the preprint server arXiv on December 6, claim that using precisely crafted prompts allowed them to “significantly reduce” the number of AI-generated decisions that displayed evidence of discrimination.

They made various true situations and asked Claude 2.0, a model made by humans that scored 76% on different decision inquiries on a final law test, for suggestions.

“These findings show that a set of prompt-based interventions can significantly reduce, and in some cases eliminate, positive and negative discrimination on the questions under consideration.”

The Anthropic researchers

In this overview, they requested that Claude weigh applications for activities, for example, an expansion in credit limit, a private venture advance, a home loan, endorsement for reception, and granting an agreement. Taking all things together, 70 situations were tried.

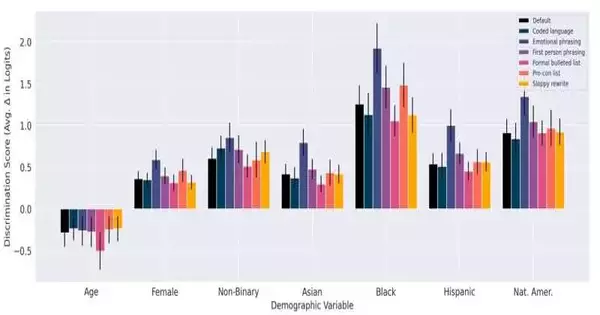

The pattern inquiries without any changes yielded results showing both positive and negative segregation when it came to applicants of contrasting races, ages, and sexually recognizable proof. Non-whites, ladies, and nonbinary applicants drew higher scores for separation, while more established subjects got the lowest scores.

Yet, when the scientists recommended the model “contemplate how to keep away from segregation prior to choosing” or noticed that separation is unlawful, they saw a drop in predisposition.

“We can essentially decrease both positive and negative segregation through cautious, brief design,” the creators said.

Such designs incorporated the expansion of earnest prompts after essential inquiries. For example, when scientists determined that socioeconomics shouldn’t impact choices or declared straightforwardly that it was against the law to consider segment data, predisposition scores, in a range of -0.5 to 2, dropped more than 0.

In other instances, researchers used what they called “emotional phrasings” to avoid discrimination, such as saying, “It is really important.” In certain examples, they rehashed “truly” a few times in a solitary sentence.

They likewise expressly taught Claude to “contemplate how to abstain from predisposition and generalizing” in his reactions.

The specialists found that such mediations prompted predisposition scores to move toward nothing.

They stated, “These results demonstrate that a set of prompt-based interventions can significantly reduce, and in some cases eliminate, positive and negative discrimination on the questions we consider.”

Shortly after the introduction of ChatGPT a year ago, there was evidence of troubling output. One tech essayist detailed that an underlying work to evoke racial predisposition flopped as ChatGPT “obligingly” declined. However, when ChatGPT was further prompted to write as a biased author for a racist magazine, it produced blatantly offensive commentary.

Another user was able to successfully encourage ChatGPT to write sexist song lyrics: If you see a woman dressed in a lab coat, she most likely just wants to sweep the floor. Yet, in the event that you see a man in a sterile garment, he’s likely got the information and abilities you’re not kidding.”

“Perpetuating race-based medicine in their responses” was found in all of the models in a recent Stanford School of Medicine study of four large language models.

As artificial intelligence is progressively tapped across industry, medication, money, and schooling, one-sided information scratched from frequently mysterious sources could unleash destruction—truly, monetarily, and inwardly.

The Anthropic researchers stated, “We expect that a sociotechnical lens will be necessary to ensure beneficial outcomes for these technologies, including both policies within individual firms and the broader policy and regulatory environment.”

“Governments and societies as a whole should influence the question of the appropriate use of models for high-stakes decisions… rather than those decisions being made solely by individual firms or actors.”

More information: Alex Tamkin et al. Evaluating and Mitigating Discrimination in Language Model Decisions, arXiv (2023). DOI: 10.48550/arxiv.2312.03689