The ability of neural networks, such as those present in the human brain, to adapt and learn from their environment without explicit external supervision is referred to as self-organized learning. This sort of learning is frequently related to Donald Hebb’s 1949 concept of Hebbian plasticity. According to Hebbian plasticity, when two neurons are triggered repeatedly in close temporal proximity, the strength of the link (synapse) between them is reinforced. This is frequently expressed as “cells that fire together, wire together.”

The self-organization of neurons as they ‘learn’ follows a mathematical theory known as the free energy principle, according to researchers. The idea precisely anticipated how real neural networks remodel spontaneously during learning, as well as how changing neuronal excitability can interrupt the process.

An international partnership of researchers from Japan’s RIKEN Center for Brain Science (CBS), the University of Tokyo, and the University College London has shown that the self-organization of neurons as they “learn” follows a mathematical theory known as the free energy principle.

Our results suggest that the free-energy principle is the self-organizing principle of biological neural networks. It predicted how learning occurred in response to specific sensory inputs and how it was disrupted by drug-induced changes in network excitability.

Takuya Isomura

The idea precisely anticipated how real neural networks remodel spontaneously to discriminate incoming information, as well as how changing neuronal excitability might disrupt the process. As a result, the findings have significance for developing animal-like artificial intelligence as well as understanding cases of impaired learning. The findings were published in Nature Communications.

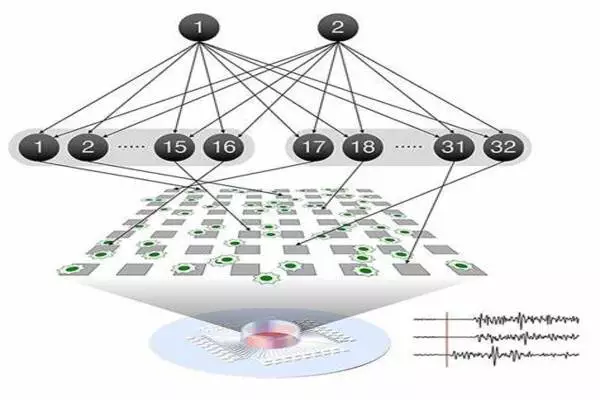

When we learn to discriminate between voices, faces, and odors, networks of neurons in our brains spontaneously organize themselves to differentiate between the many sources of incoming information. This mechanism, which involves modifying the strength of connections between neurons, is the foundation of all brain learning. Takuya Isomura of RIKEN CBS and his worldwide colleagues have anticipated that this form of network self-organization adheres to the mathematical laws defining the free energy principle. This theory was tested with neurons extracted from the brains of rat embryos and grown in a culture plate on top of a grid of small electrodes in the latest study.

Once you can discriminate between two experiences, such as voices, you will see that some of your neurons respond to one voice while others respond to the other. This is the result of learning, which is the rearrangement of neural networks. In their culture experiment, the researchers recreated this process by stimulating the neurons in a precise way that blended two independent hidden sources using the grid of electrodes beneath the neural network.

After 100 training sessions, the neurons automatically became selective – some responding very strongly to source #1 and very weakly to source #2, and others responding in the reverse. Drugs that either raise or lower neuron excitability disrupt the learning process when added to the culture beforehand. This shows that the cultured neurons do just what neurons are thought to do in the working brain.

According to the free energy principle, this form of self-organization will always follow a pattern that minimizes the system’s free energy. The team used real brain data to reverse engineer a predictive model based on it to see if this concept is the driving force behind neural network learning. The data from the first ten electrode training sessions was then fed into the model, which was then utilized to predict the next 90 sessions.

The model properly anticipated the responses of neurons as well as the level of connection between neurons at each phase. This means that only knowing the initial state of the neurons is sufficient to predict how the network will evolve over time when learning occurs.

“Our results suggest that the free-energy principle is the self-organizing principle of biological neural networks,” Isomura explains. “It predicted how learning occurred in response to specific sensory inputs and how it was disrupted by drug-induced changes in network excitability.”

“Although it will take some time,” Isomura says, “our technique will eventually allow modeling the circuit mechanisms of psychiatric disorders as well as the effects of drugs such as anxiolytics and psychedelics. Generic mechanisms for acquiring predictive models can also be used to develop next-generation artificial intelligence that learn in the same way that real neural networks do.”