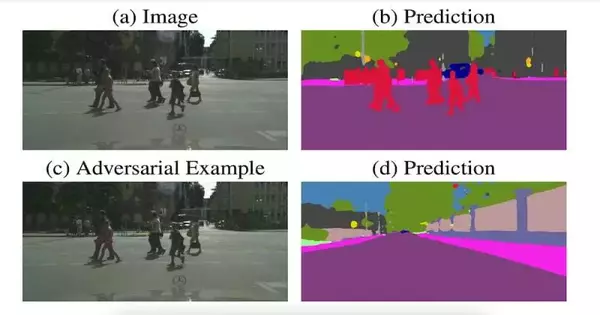

Man-made brainpower calculations are rapidly becoming part of our daily lives. Machine learning will soon or already underpin many systems that require strong security. Facial recognition, banking, military targeting applications, robots, and autonomous vehicles are just a few examples of these systems. An important question arises from this: how secure are these AI calculations against vindictive assaults? My colleagues at the University of Melbourne and I discuss a potential solution to the vulnerability of machine learning models in an article that was recently published in Nature Machine Intelligence. According to our hypothesis, new algorithms with a high degree of resistance

Quantum Physics

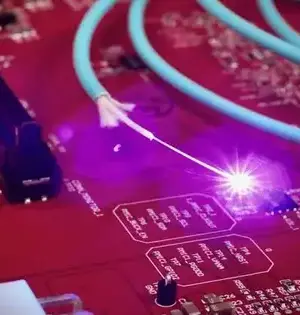

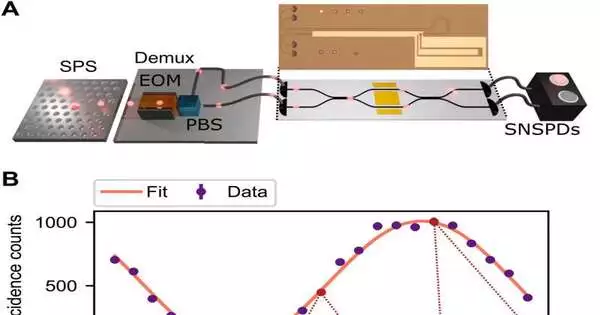

Versatile photonic quantum figuring designs require photonic handling gadgets. Such stages depend on low-misfortune, fast, reconfigurable circuits and close, deterministic asset state generators. An integrated photonic platform based on thin-film lithium niobate was developed by Patrik Sund and a group of researchers at the center of hybrid quantum networks at the University of Copenhagen and the University of Münster and published in Science Advances. Using quantum dots in nanophotonic waveguides, the researchers integrated the platform with deterministic solid-state single photon sources. They experimentally realized a variety of important photonic quantum information processing functionalities on high-speed circuits and processed the generated

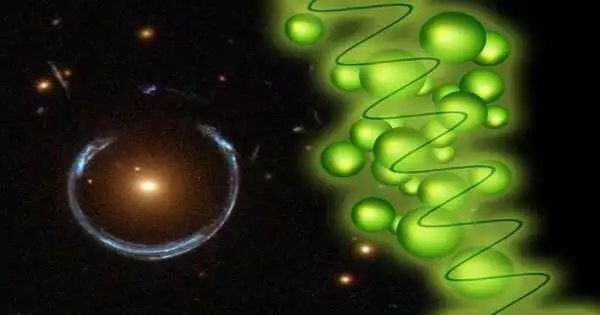

The hypothesis of relativity functions admirably when you need to make sense of infinite-scale peculiarities —for example, the gravitational waves made when dark openings impact. Particle-scale phenomena like the behavior of individual electrons in an atom are well described by quantum theory. However, the two have not yet been combined in a way that is completely satisfactory. The search for a "quantum theory of gravity" is regarded as one of the most important scientific mysteries that remains unsolved. This is part of the way, on the grounds that the arithmetic in this field is profoundly confounded. Simultaneously, performing appropriate experiments

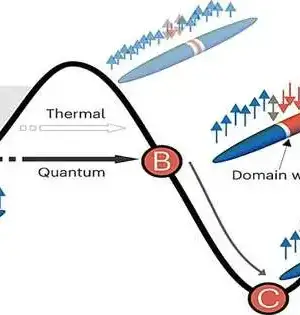

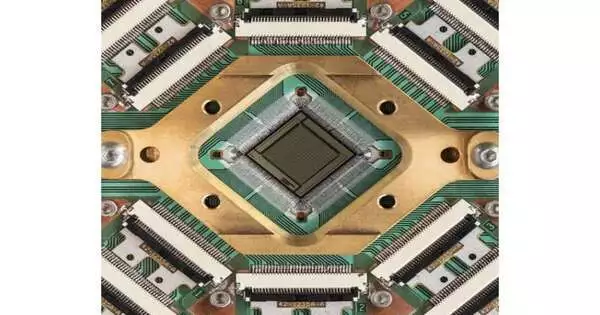

Researchers and businesses around the world have been working for decades to create increasingly sophisticated quantum computers. Realizing "quantum advantage," also known as creating systems that will outperform classical computers on particular tasks, is the primary goal of their efforts. A new quantum computing system that outperforms classical computing systems on optimization problems was recently developed by a research team at D-Wave Quantum Inc., a Canadian company that specializes in quantum computing. This framework, presented in a paper in Nature, depends on a programmable twist glass with 5,000 qubits (the quantum reciprocals of pieces in old style figuring). "This work

Further evidence against Einstein’s theory of local causation comes from entangled quantum circuits.

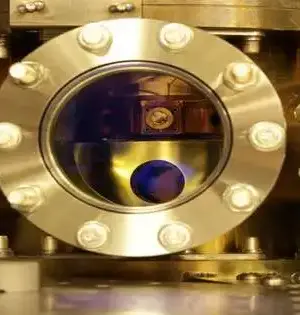

A Bell test that leaves no room for error has been carried out by a group of researchers led by Andreas Wallraff, Professor of Solid State Physics at ETH Zurich, to disprove Albert Einstein's idea of "local causality" in response to quantum mechanics. The researchers have provided additional support for quantum mechanics by demonstrating that quantum mechanical objects that are far apart can be much more strongly correlated than is possible in conventional systems. The fact that the researchers were able to use superconducting circuits, which are thought to be promising candidates for building powerful quantum computers, for the very

The electron emission of metals can be precisely measured and controlled down to a few attoseconds by superimposing two different frequency and strength laser fields. This is the case, according to physicists from the Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), the University of Rostock, and the University of Konstanz. Electronic circuits that are a million times faster than they are now are possible as a result of the findings, which may provide new insights into quantum mechanics. The findings were recently published by the researchers in the scientific journal Nature. Electrons can be released from metal surfaces by light. Alexandre Edmond Becquerel made

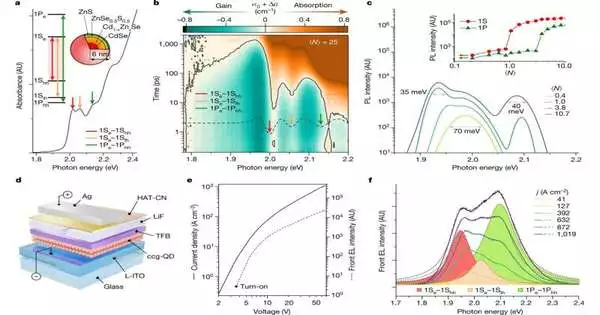

With electrically driven devices based on solution-cast semiconductor nanocrystals, which are tiny specks of semiconductor matter created through chemical synthesis and are frequently referred to as colloidal quantum dots, Los Alamos scientists have achieved light amplification, a result that has been decades in the making. A new class of electrically pumped laser diodes—highly flexible, solution-processable laser diodes that can be prepared on any crystalline or non-crystalline substrate without the need for sophisticated vacuum-based growth techniques or a highly controlled clean room environment—is made possible by this demonstration, which was published in Nature. Victor Klimov, Laboratory Fellow and project leader for

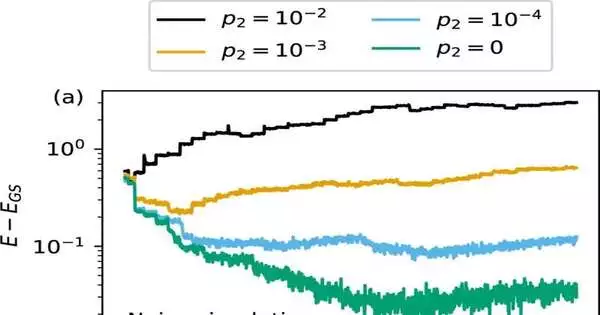

An adaptive algorithm for simulating materials was demonstrated by a group of scientists from the Ames National Laboratory of the U.S. Department of Energy as a means of advancing the role of quantum computing in materials research. Utilizing an adaptive algorithm, quantum computers are able to produce solutions in a timely and precise manner, giving them potential capabilities far beyond those of current computers. The way quantum computers work is very different from how we currently use computers. They are made of quantum bits, or qubits, which are capable of encoding a lot more information than the bits in computers

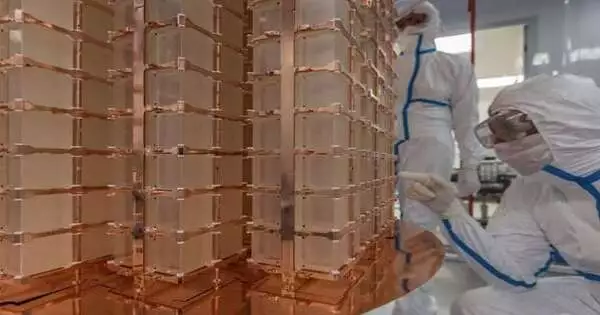

We do not yet know a great deal about neutrinos. Neutrinos are exceptionally light, chargeless, and tricky particles that are engaged in a cycle called beta rot. The universe's origin of matter may be revealed by comprehending this process. Beta rot is a sort of radioactive rot that includes a neutron changing over into a proton, transmitting an electron, and an antineutrino. Beta decay occurs approximately a dozen times per second in bananas, for example, and is extremely common. There could likewise be a super intriguing sort of beta rot that radiates two electrons but no neutrinos. This neutrinoless double

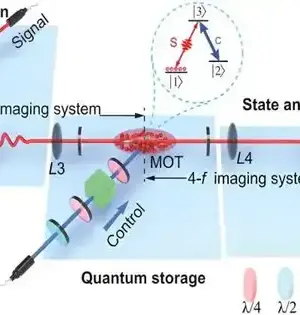

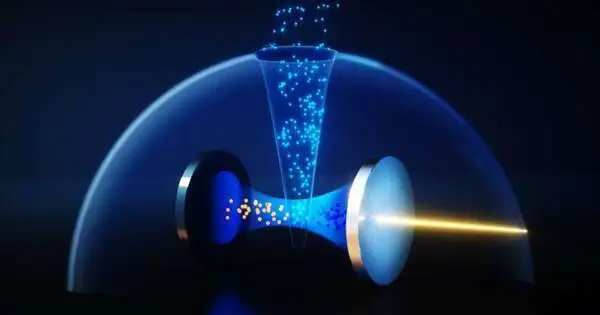

"Collectively induced transparency" (CIT) is a new phenomenon that causes groups of atoms to suddenly stop reflecting light at specific frequencies. By laser-blasting ytterbium atoms inside an optical cavity, which is essentially a small box for light, CIT was discovered. As the frequency of the light is changed, a transparency window appears in which the light simply passes through the cavity unimpeded, despite the fact that the laser's light will initially bounce off the atoms. "We never knew this straightforwardness window existed," says Caltech's Andrei Faraon (BS '04), William L. Valentine Teacher of Applied Physical Science and Electrical Designing, and