Consider how you use your hands when you’re in a restaurant using a variety of silverware and glassware, or at home at night pressing buttons on your TV remote control. All of these abilities rely on touch, whether you’re selecting an item from the menu or watching TV. Our hands and fingers are highly sensitive and highly skilled machines.

Robotics experts have long sought to imbue robot hands with “true” dexterity, but the endeavor has been frustratingly elusive. More dexterous operations like assembly, insertion, reorientation, packaging, etc. have remained the domain of human handling. Robot grippers and suction cups can pick and position goods.

The subject of robotic manipulation is, however, evolving very quickly because to advancements in both sensing technology and machine-learning approaches to analyze the detected data.

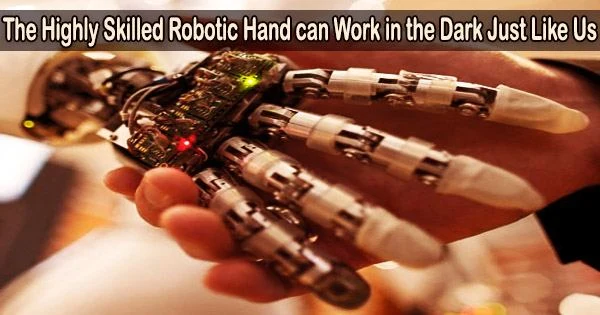

Highly dexterous robot hand even works in the dark

Columbia Engineering researchers have demonstrated a highly dexterous robot hand that combines a sophisticated sense of touch with motor learning algorithms to reach a high level of dexterity.

As a demonstration of skill, the team chose a difficult manipulation task: executing an arbitrarily large rotation of an unevenly shaped grasped object in hand while always maintaining the object in a stable, secure hold.

Because a portion of the fingers must constantly be repositioned while the remaining fingers must maintain the object’s stability, this is an extremely challenging process. Not only was the hand able to do this task, but it also did so entirely through touch sensing and without any visual cues at all.

The hand also demonstrated new degrees of dexterity and was unaffected by lighting, occlusion, or other similar problems because it operated without any external cameras. Additionally, because the hand does not rely on vision to move things, it is able to do so in low-light situations and even in complete darkness, which would confuse vision-based algorithms.

The directional goal for the field remains assistive robotics in the home, the ultimate proving ground for real dexterity. In this study, we’ve shown that robot hands can also be highly dexterous based on touch sensing alone. Once we also add visual feedback into the mix along with touch, we hope to be able to achieve even more dexterity, and one day start approaching the replication of the human hand.

Professor Matei Ciocarlie

“While our demonstration was on a proof-of-concept task, meant to illustrate the capabilities of the hand, we believe that this level of dexterity will open up entirely new applications for robotic manipulation in the real world,” said Matei Ciocarlie, associate professor in the Departments of Mechanical Engineering and Computer Science. “Some of the more immediate uses might be in logistics and material handling, helping ease up supply chain problems like the ones that have plagued our economy in recent years, and in advanced manufacturing and assembly in factories.”

Leveraging optics-based tactile fingers

In earlier work, Ciocarlie’s group collaborated with Ioannis Kymissis, professor of electrical engineering, to develop a new generation of optics-based tactile robot fingers. These were the first robot fingers to completely cover a complicated multi-curved surface and achieve contact localization with sub-millimeter accuracy.

Additionally, the fingers’ low wire count and small packaging made it simple to integrate them into whole robot hands.

Teaching the hand to perform complex tasks

For this new work, led by CIocarlie’s doctoral researcher, Gagan Khandate, the researchers designed and built a robot hand with five fingers and 15 independently actuated joints each finger was equipped with the team’s touch-sensing technology.

The following stage involved testing the tactile hand’s capacity to carry out challenging manipulation tasks. They achieved this by utilizing novel motor learning techniques, which allow robots to acquire new physical skills through repetition.

To effectively explore potential motor methods, they employed a technique called deep reinforcement learning, supplemented by fresh algorithms they created.

Robot completed approximately one year of practice in only hours of real-time

Without any eyesight, the team’s tactile and proprioceptive data served as the sole input to the motor learning algorithms. Thanks to contemporary physics simulators and highly parallel computers, the robot completed roughly a year of practice in only a few hours of real-time. The researchers next applied the simulation-trained manipulation skills to the actual robot hand, which was successful in developing the desired level of dexterity.

Ciocarlie noted that “the directional goal for the field remains assistive robotics in the home, the ultimate proving ground for real dexterity. In this study, we’ve shown that robot hands can also be highly dexterous based on touch sensing alone. Once we also add visual feedback into the mix along with touch, we hope to be able to achieve even more dexterity, and one day start approaching the replication of the human hand.”

Ultimate goal: joining abstract intelligence with embodied intelligence

Ultimately, Ciocarlie observed, a physical robot being useful in the real world needs both abstract, semantic intelligence (to understand conceptually how the world works), and embodied intelligence (the skill to physically interact with the world).

Large language models such as OpenAI’s GPT-4 or Google’s PALM aim to provide the former, while dexterity in manipulation as achieved in this study represents complementary advances in the latter.

For instance, ChatGPT will type up a step-by-step plan in response to a question on how to build a sandwich, but it requires a skillful robot to use that plan and actually make the meal.

Researchers believe that physically adept robots will be able to employ semantic intelligence on physical tasks in the actual world, potentially even in our homes, by removing it from the solely virtual realm of the Internet.

The paper has been accepted for publication at the upcoming Robotics: Science and Systems Conference (Daegu, Korea, July 10-14, 2023), and is currently available as a preprint.