Mechanical technology and independent vehicles are among the most rapidly developing spaces in the innovative scene, possibly making work and transportation more secure and productive. Since the two robots and self-driving vehicles need to see their environmental elements precisely, 3D item identification techniques are a functioning review region.

Most 3D article identification strategies utilize LiDAR sensors to establish 3D point boundaries for their current circumstances. Basically, LiDAR sensors use laser shafts to sweep and gauge the distances of articles and surfaces around the source quickly. In any case, utilizing LiDAR information alone can prompt blunders because of the great responsiveness of LiDAR to clamor, particularly in antagonistic weather patterns like during precipitation.

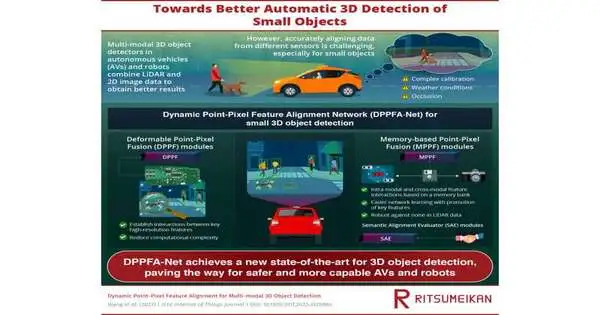

To handle this issue, researchers have created multi-modular 3D article recognition techniques that join 3D LiDAR information with 2D RGB pictures taken by standard cameras. While the combination of 2D pictures and 3D LiDAR information prompts more precise 3D identification results, it actually faces its own arrangement of difficulties, with the exact discovery of little articles staying troublesome.

“Our research could help robots better learn and adapt to their working environs, enabling for more precise sensing of microscopic targets.”

Professor Hiroyuki Tomiyama from Ritsumeikan University, Japan,

The issue fundamentally lies in satisfactorily adjusting the semantic data removed autonomously from the 2D and 3D datasets, which is hard because of issues like loose alignment or impediment.

In this setting, an examination group led by Teacher Hiroyuki Tomiyama from Ritsumeikan College, Japan, has fostered an imaginative way to deal with making multi-modular 3D item identification more precise and strong. The proposed plot, called “Dynamic Point-Pixel Element Arrangement Organization” (DPPFA−Net), is depicted in their paper distributed in the IEEE Web of Things Diary.

The model involves a plan of numerous occurrences of three novel modules: the Memory-based Point-Pixel Combination (MPPF) module, the Deformable Point-Pixel Combination (DPPF) module, and the Semantic Arrangement Evaluator (SAE) module.

The MPPF module is entrusted with performing express connections between intra-modular elements (2D with 2D and 3D with 3D) and cross-modular highlights (2D with 3D). The utilization of the 2D picture as a memory bank lessens the trouble in network learning and makes the framework stronger against commotion in D-point mists. Additionally, it advances the utilization of additional complete and discriminative highlights.

Conversely, the DPPF module performs cooperations just at pixels in key positions, which are resolved by means of a brilliant examining technique. This considers the combination of high goals with low computational intricacy. At last, the SAE module guarantees semantic arrangement between the two information portrayals during the combination cycle, which mitigates the issue of component equivocalness.

The specialists tried DPPFA-Net by contrasting it with the top entertainers for the generally utilized KITTI Vision Benchmark. Strikingly, the proposed network accomplished normal accuracy enhancements as high as 7.18% under various commotion conditions. To further test their model’s capacities, the group created a new uproarious dataset by presenting counterfeit multi-modular commotion as precipitation to the KITTI dataset.

The outcomes show that the proposed network performed better compared to existing models even with extreme impediments as well as under different degrees of antagonistic weather patterns. “Our broad trials on the KITTI dataset and testing multi-modular uproarious cases uncover that DPPFA-Net arrives at another cutting edge,” says Prof. Tomiyama.

Strikingly, there are different ways in which precise 3D item recognition techniques could work in our lives. Self-driving vehicles, which depend on such strategies, can possibly diminish mishaps and further develop traffic flow and wellbeing. Moreover, the ramifications in the field of mechanical technology ought not be put into words. “Our review could work with a superior comprehension and transformation of robots to their work spaces, permitting a more exact impression of little targets,” makes sense of Prof. Tomiyama.

“Such progressions will assist with working on the abilities of robots in different applications.” One more use for 3D item discovery networks is pre-marking crude information for profound learning discernment frameworks. This would fundamentally lessen the expense of manual comment, speeding up improvements in the field.

More information: Juncheng Wang et al, Dynamic Point-Pixel Feature Alignment for Multi-modal 3D Object Detection, IEEE Internet of Things Journal (2023). DOI: 10.1109/JIOT.2023.3329884