Ongoing advancements in the field of AI (machine learning) have extraordinarily changed the nature of programmed interpretation devices. As of now, these devices are fundamentally used to decipher essential sentences as well as short messages or informal reports.

Scholarly texts, like books or brief tales, are still completely deciphered by master human interpreters, who are knowledgeable about getting a handle on theoretical and complex implications and interpreting them in another dialect. While a few studies have looked into the capability of computational models for deciphering scholarly texts, progress in this area is still limited.

Scientists at UMass Amherst have as of late done a review investigating the nature of scholarly text interpretations delivered by machines by contrasting them with the same text interpretations made by people. Their findings, which were pre-distributed on arXiv, address some of the shortcomings of existing computational models for translating unfamiliar texts into English.

“Machine translation (MT) has the potential to supplement human translators’ labor by enhancing both training methods and overall efficiency. Because translators must balance semantic equivalence, readability, and critical interpretability in the target language, literary translation is less limited than more standard MT situations. This feature, combined with the extensive discourse-level background inherent in literary texts, makes literary MT more difficult to model and evaluate computationally.”

Katherine Thai and her colleagues

“Machine interpretation (MT) has the potential to supplement human interpretation by further developing both preparation methodology and general effectiveness,” Katherine Thai and her colleagues wrote in their paper.”Scholarly interpretation is less required than more conventional MT settings because interpreters must adjust meaning equality, comprehensibility, and basic interpretability in the target language.”This property, combined with the complex talk level setting present in artistic messages, makes abstract MT more difficult to computationally show and assess.

The vital goal of the new work by Thai and her partners was to more readily comprehend the manners in which cutting-edge MT devices actually bomb in the interpretation of artistic texts when contrasted with human interpretations. Their expectation was that this would assist with recognizing explicit regions that designers ought to zero in on to work on these models’ exhibition.

“We gather a dataset (PAR3) of non-English language books in the public space, each adjusted at the section level to both human and programmed English interpretations,” Thai and her partners made sense of in their paper.

PAR3, the new dataset ordered by the scientists for the extent of their review, contains 121,000 passages removed from 118 books initially written in various dialects other than English. For every one of these sections, the dataset incorporates a few different human interpretations as well as an interpretation created by Google Decipher.

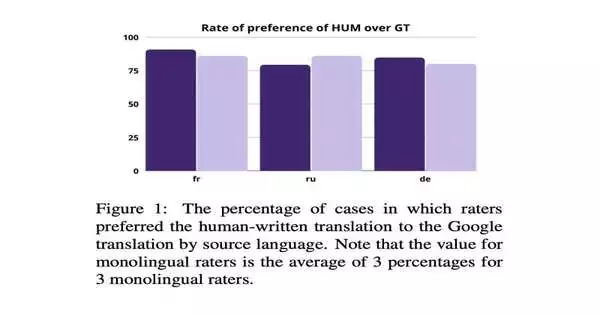

The specialists thought about the nature of human interpretations of these abstract passages compared to the ones delivered by Google Decipher, involving normal measurements for assessing MT devices. Simultaneously, they asked master human interpreters which interpretations they liked, while likewise inciting them to recognize issues with their least-favored interpretation.

“Utilizing PAR3, we find that master scholarly interpreters favor reference human interpretations over machine-deciphered passages at a pace of 84%, while the best-in-class programmed MT measurements don’t correspond with those inclinations,” Thai and her partners wrote in their paper. “The specialists note that MT yields contain mistranslations, yet in addition they talk about upsetting mistakes and complex irregularities.”

Basically, the discoveries accumulated by Thai and her partners propose that measurements to assess MT (e.g., BLEU, BLEURT, and BLONDE) probably won’t be especially viable, as human interpreters disagreed with their forecasts. Evidently, the input they assembled from human interpreters additionally permitted the scientists to recognize explicit issues with interpretations made by Google Decipher.

Involving the human specialists’ input as a rule, the group at last made a programmed post-altering model in view of GPT-3, a profound learning approach presented by an exploration bunch at OpenAI. They found that master human interpreters favored the scholarly interpretations delivered by this model at a pace of 69%.

Later on, the discoveries of this study could illuminate new examinations investigating the utilization of MT apparatuses to interpret artistic texts. Moreover, the PAR3 dataset assembled by Thai and her associates, which is presently openly accessible on GitHub, could be utilized by different groups to prepare or evaluate their language models.

“By and large, our work uncovers new difficulties to advance in scholarly MT, and we trust that the public arrival of PAR3 will urge analysts to handle them,” the specialists deduced in their paper.

More information: Katherine Thai et al, Exploring Document-Level Literary Machine Translation with Parallel Paragraphs from World Literature, arXiv (2022). DOI: 10.48550/arxiv.2210.14250

Journal information: arXiv