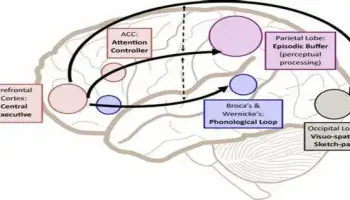

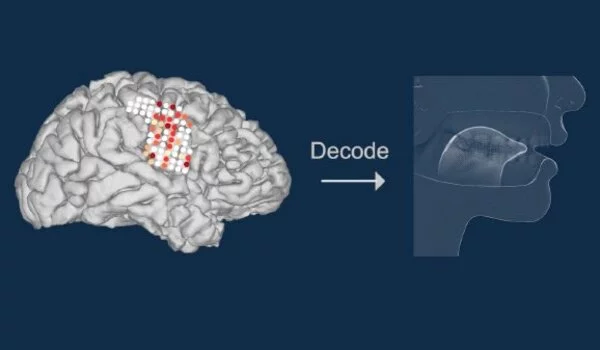

Brain-computer interfaces (BCIs) or brain-machine interfaces (BMIs) are cutting-edge fields of research and technology that convert brain impulses into speech via implants and AI. Individuals with speech difficulties, such as those who have lost the capacity to speak due to paralysis or neurological diseases, will benefit greatly from this technology.

Researchers have successfully converted brain signals into audible speech. They were able to predict the words people wanted to utter with an accuracy of 92 to 100% by decoding signals from the brain using a mix of implants and AI.

Radboud University and UMC Utrecht researchers have succeeded in converting brain impulses into audible speech. They were able to predict the words people wanted to utter with an accuracy of 92 to 100% by decoding signals from the brain using a mix of implants and AI. This month, their findings were published in the Journal of Neural Engineering.

According to main author Julia Berezutskaya, a researcher at Radboud University’s Donders Institute for Brain, Cognition, and Behavior and UMC Utrecht, the study indicates a positive advancement in the field of Brain-Computer Interfaces. Berezutskaya and colleagues at UMC Utrecht and Radboud University used brain implants to infer what individuals were saying in epileptic patients.

We were then able to establish direct mapping between brain activity on the one hand, and speech on the other hand. We also used advanced artificial intelligence models to translate that brain activity directly into audible speech.

Julia Berezutskaya

Bringing back voices

‘Ultimately, we intend to make this technology available to patients who are paralyzed and unable to communicate in a locked-in state,’ adds Berezutskaya. ‘These folks lose the ability to move their muscles and, as a result, speak. We can analyze brain activity and give them a voice again by constructing a brain-computer interface.’

The researchers invited non-paralyzed persons with temporary brain implants to utter a number of words out loud while their brain activity was measured for the experiment in their new study.

Berezutskaya: ‘We were then able to establish a direct mapping between brain activity on the one hand, and speech on the other hand. We also used advanced artificial intelligence models to translate that brain activity directly into audible speech. That means we weren’t just able to guess what people were saying, but we could immediately transform those words into intelligible, understandable sounds. In addition, the reconstructed speech even sounded like the original speaker in their tone of voice and manner of speaking.’

Researchers all across the world are trying to figure out how to distinguish words and sentences in brain patterns. The researchers were able to reconstruct understandable speech from tiny samples, demonstrating that their models can uncover the complicated mapping between brain activity and speech even with insufficient data.

They also conducted listening tests with participants to see how distinguishable the synthesized words were. The positive results of those tests indicate that the technology not only properly identifies words, but also conveys those words audibly and understandably, just like a human voice.

Limitations

‘For the time being, there are a number of limits,’ says Berezutskaya. ‘In these experiments, we asked people to say twelve words aloud, and those were the words we tried to detect. In general, guessing individual words is less difficult than predicting full phrases. Large language models utilized in AI research could be useful in the future.

Our goal is to predict complete sentences and paragraphs of what people are trying to say based just on their brain activity. To get there, we’ll need more tests, more powerful implants, greater datasets, and advanced AI models. All of these processes will take time, but we appear to be on the right track.’