In fact, machine learning models have been crucial in helping us understand how the brain perceives and interprets communication sounds. Researchers have gained insights into the intricate mechanisms underlying auditory perception by analyzing large datasets of neural activity and employing sophisticated algorithms.

To comprehend how the brain distinguishes between communication sounds regardless of accents and background noise, scientists studied how guinea pigs communicated. The University of Pittsburgh’s auditory neuroscientists describe a machine learning model that explains how the brain interprets communication sounds like animal calls or spoken words in a paper that was just published in Communications Biology.

The study’s algorithm simulates how social animals, like marmoset monkeys and guinea pigs, use sound-processing networks in their brains to distinguish between different sound categories, like calls for food, mating, or danger, and act on them.

Understanding the nuances and complexities of the neuronal processing that underlies sound recognition is made possible by the study. The knowledge gained from this research paves the way for bettering hearing aids and understanding, and eventually treating, disorders that affect speech recognition.

More or less everyone we know will lose some of their hearing at some point in their lives, either as a result of aging or exposure to noise. Understanding the biology of sound recognition and finding ways to improve it is important.

Srivatsun Sadagopan

“More or less everyone we know will lose some of their hearing at some point in their lives, either as a result of aging or exposure to noise. Understanding the biology of sound recognition and finding ways to improve it is important,” said senior author and Pitt assistant professor of neurobiology Srivatsun Sadagopan, Ph.D. “But the process of vocal communication is fascinating in and of itself. The ways our brains interact with one another and can take ideas and convey them through sound is nothing short of magical.”

Everyday sounds that humans and animals hear range from the cacophony of the jungle to the hum inside a busy restaurant. Animals and humans are able to communicate and understand one another, including the pitch of their voice or accent, despite the noise pollution that surrounds us. If someone says “hello,” for instance, we know what they mean regardless of whether they have an American or British accent, whether they are a man or a woman, or whether they are speaking in a busy intersection or a quiet room.

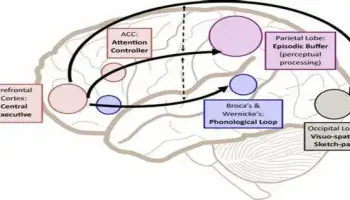

The team started with the intuition that the way the human brain recognizes and captures the meaning of communication sounds may be similar to how it recognizes faces compared with other objects. Faces are highly diverse but have some common characteristics.

Instead of matching every face that we encounter to some perfect “template” face, our brain picks up on useful features, such as the eyes, nose and mouth, and their relative positions, and creates a mental map of these small characteristics that define a face.

The team demonstrated in a number of studies that such minute characteristics might also make up communication sounds. In order to distinguish between the various sounds made by social animals, the researchers first constructed a machine learning model of sound processing. They observed the brain activity of guinea pigs listening to their kin’s communication sounds to see if brain responses matched the model. When they heard a noise that had features present in particular types of these sounds, similar to the machine learning model, neurons in regions of the brain that process sounds lit up with a flurry of electrical activity.

They then wanted to check the performance of the model against the real-life behavior of the animals.

Guinea pigs were put in an enclosure and exposed to different categories of sounds — squeaks and grunts that are categorized as distinct sound signals. Researchers then trained the guinea pigs to walk over to different corners of the enclosure and receive fruit rewards depending on which category of sound was played.

Then, they made the tasks more difficult by using sound-altering software to speed up or slow down, raise or lower the pitch, add noise and echoes, or all of the above to guinea pig calls in order to mimic the way humans recognize the meaning of words spoken by people with different accents.

The animals continued to perform well despite artificial echoes or noise, and they were able to perform the task as consistently as if the calls they heard were unaltered. Even better, the computer model accurately predicted their actions as well as the underlying activation of sound-processing neurons in the brain.

The accuracy of the model from animal speech is now being translated into human speech by the researchers as a next step.

There are significantly better speech recognition models available from an engineering perspective. Our model is distinctive in that it closely matches behavior and brain activity, providing us with additional biological understanding. According to the lead author Satyabrata Parida, Ph.D., postdoctoral fellow at Pitt’s department of neurobiology, “in the future, these insights can be used to help people with neurodevelopmental conditions or to help engineer better hearing aids.”