An AI calculation showed the capacity to handle information that surpasses a PC’s accessible memory by recognizing a huge informational collection’s vital elements and separating them into reasonable clusters that don’t gag PC equipment. Created at Los Alamos Public Research facility, the calculation set a worldwide best for factorizing enormous informational indexes during a trial on Oak Edge Public Lab’s Highest point, the world’s fifth-quickest supercomputer.

Similarly effective on workstations and supercomputers, the profoundly versatile calculation tackles equipment bottlenecks that keep handling data from information rich applications in malignant growth research, satellite symbolism, web-based entertainment organizations, public safety science and quake research, to give some examples.

“We fostered an ‘out-of-memory’ execution of the non-negative network factorization strategy that permits you to factorize bigger informational collections than beforehand conceivable on a given equipment,” said Ismael Boureima, a computational physicist at Los Alamos Public Research center. Boureima is first creator of the paper in The Diary of Supercomputing on the record-breaking calculation.

“The non-negative matrix factorization method can now be used to bigger data sets than was previously feasible on the available hardware thanks to a new out-of-memory implementation.”

Ismael Boureima, a computational physicist at Los Alamos National Laboratory.

“Our execution just separates the huge information into more modest units that can be handled with the accessible assets. Subsequently, it’s a valuable device for staying aware of dramatically developing informational collections.”

“Conventional information examination requests that information fit inside memory requirements. Our methodology challenges this idea,” said Manish Bhattarai, an AI researcher at Los Alamos and co-creator of the paper.

“We have presented an out-of-memory arrangement. At the point when the information volume surpasses the accessible memory, our calculation separates it into more modest sections. It processes these portions each in turn, cycling them all through the memory. This procedure furnishes us with the novel capacity to oversee and examine incredibly huge informational collections productively.”

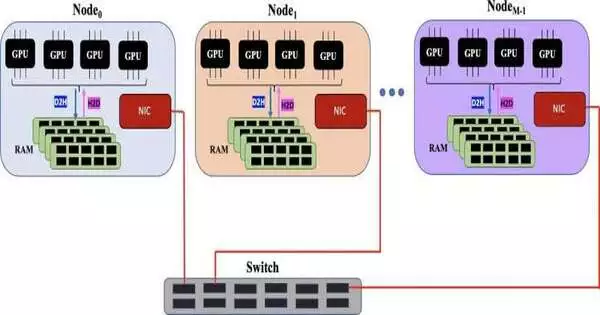

The circulated calculation for current and heterogeneous elite execution PC frameworks can be helpful on equipment as little as a personal computer, or as huge and complicated as Chicoma, Highest point or the impending Venado supercomputers, Boureima said.

“The inquiry is no longer whether it is feasible to factorize a bigger grid, rather how is the factorization going to require,” Boureima said.

The Los Alamos execution exploits equipment elements, for example, GPUs to speed up calculation and quick interconnect to move information between PCs effectively. Simultaneously, the calculation productively finishes numerous undertakings at the same time.

Non-negative grid factorization is one more portion of the elite presentation calculations created under the SmartTensors project at Los Alamos.

In AI, non-negative grid factorization can be utilized as a type of unaided figuring out how to pull significance from information, Boureima said. “That is vital for AI and information examination in light of the fact that the calculation can recognize logical dormant highlights in the information that have a specific importance to the client.”

The record-breaking run

In the record-breaking run by the Los Alamos group, the calculation handled a 340-terabyte thick grid and a 11-exabyte scanty framework, utilizing 25,000 GPUs.

“We’re arriving at exabyte factorization, which no other person has done, as far as anyone is concerned,” said Boian Alexandrov, a co-creator of the new paper and a hypothetical physicist at Los Alamos who drove the group that fostered the SmartTensors man-made reasoning stage.

Decaying or considering information is a specific information mining strategy pointed toward removing relevant data, improving on the information into justifiable configurations.

Bhattarai further underscored the versatility of their calculation, commenting, “Conversely, customary strategies frequently wrestle with bottlenecks, essentially because of the slack in information move between a PC’s processors and its memory.”

“We additionally showed you don’t be guaranteed to require enormous PCs,” Boureima said. “Scaling to 25,000 GPUs is perfect in the event that you can manage the cost of it, however our calculation will be helpful on PCs for something you were unable to process previously.”

More information: Ismael Boureima et al, Distributed out-of-memory NMF on CPU/GPU architectures, The Journal of Supercomputing (2023). DOI: 10.1007/s11227-023-05587-4