A researcher from the Graduate School of Engineering at Osaka University proposed a mathematical scale to measure the expressiveness of mechanical android faces. By zeroing in on the scope of twisting of the face rather than the quantity of mechanical actuators, the new framework can more precisely gauge how much robots can copy real human feelings. This work, published in Advanced Robotics, may assist in growing more precise robots that can quickly pass on data.

On your next outing to the shopping center, you head to the data work area to request bearings to another store. Yet, amazingly, an android is monitoring the work area. Despite the fact that it appears to be science fiction, the current situation may not be so far off in the future.In any case, one snag to this is the absence of a standard system for estimating the expressiveness of android faces. It would be particularly helpful if the file could be applied similarly to the two people and androids.

“This technology is likely to contribute in the development of androids with expressive power comparable to that of humans,”

Hisashi Ishihara

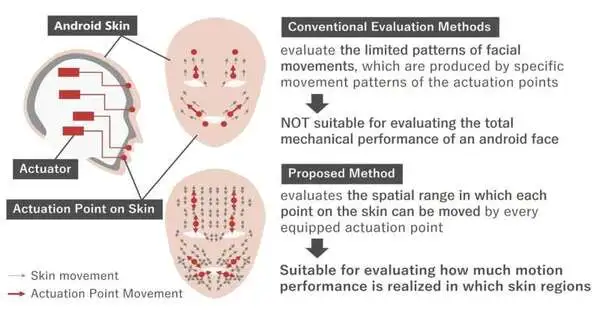

Another assessment strategy was proposed at Osaka University to exactly gauge the mechanical exhibition of android robot faces. Although looks are significant for sending data during social connections, how much mechanical actuators can repeat human feelings can shift incredibly.

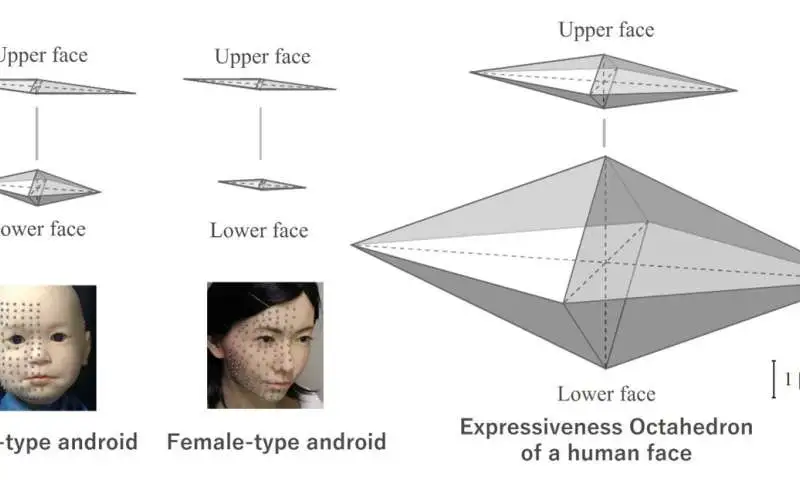

“The objective is to comprehend the way that an expressive android face can measure up to people,” creator Hisashi Ishihara says. While past assessment strategies zeroed in on unambiguous composed facial developments, the new strategy utilizes the spatial reach over which each skin part can move for the mathematical sign of “expressiveness.” That is, rather than relying on the number of facial examples produced by the mechanical actuators that control the developments or evaluating the nature of these examples, the new file was checked out at the full spatial range of movement opened by each point on the face.

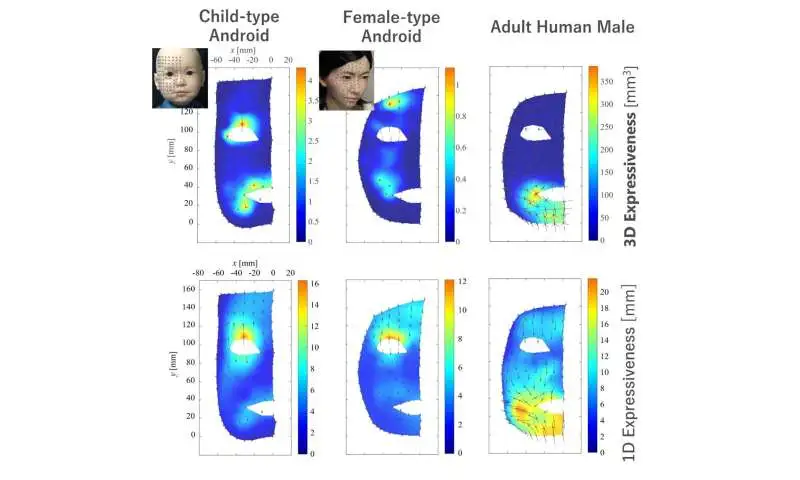

For this review, two androids — one addressing a kid and one addressing a grown-up female — were examined, alongside three grown-up humans. An optical movement catch framework was used to estimate removals of approximately 100 facial focuses for each subject. It was found that the expressiveness of the androids was altogether different from that of people, particularly in the lower areas of their appearances. Truth be told, the likely scope of movement for the androids was exclusively around 20% of that of people, even in the most merciful assessment.

“This strategy is supposed to support the advancement of androids with expressive power that rivals what people are able to do,” Ishihara says. Future examination of this assessment strategy might assist android engineers in making robots with expanded expressiveness.

More information: Hisashi Ishihara, Objective evaluation of mechanical expressiveness in android and human faces, Advanced Robotics (2022). DOI: 10.1080/01691864.2022.2103389