The surgical staff has reached a deadlock as a patient lies on the operating table. The intestinal rupture is not visible. Check to see if we missed a view of any intestinal sections in the last 15 minutes’ worth of visual feed, a surgeon says aloud. The patient’s prior scans are reviewed by an artificially intelligent medical assistant, who also starts highlighting real-time video streams of the procedure. It notifies the team when a step in the procedure has been missed and reads pertinent medical literature when surgeons come across a rare anatomical phenomenon.

With the help of artificial intelligence, physicians from all specialties may soon be able to quickly review a patient’s entire medical record in the context of all online medical data and published medical literature. Only now, with the newest generation of AI models, is this potential versatility in the doctor’s office even conceivable.

According to Jure Leskovec, a computer science professor at Stanford Engineering, “We see a paradigm shift coming in the field of medical AI.”. “In the past, medical AI models could only take on very specific, limited aspects of the healthcare puzzle. In this high-stakes field, we are now entering a new era where larger puzzle pieces are much more important.

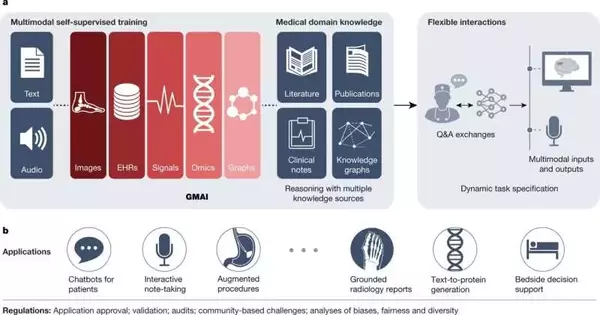

Generalist medical artificial intelligence, or GMAI, is a new class of medical AI models that are knowledgeable, adaptable, and reusable across numerous medical applications and data types, according to Stanford researchers and their collaborators. Their analysis of this development is presented in Nature’s April 12 issue.

Leskovec and his colleagues detail how GMAI will be able to interpret various combinations of data from imaging, electronic health records, lab results, genomics, and medical text much more effectively than competing models like ChatGPT. These GMAI models will talk about things, suggest things, make sketches, and annotate pictures.

According to co-first author and MD Michael Moor, the hyper-specialization of human doctors and the sporadic and slow flow of information are major causes of inefficiencies and mistakes in the modern medical system. Because they would be more skilled across specialties rather than just being experts in one, generalist medical AI models could have a significant impact.”.

No borders for medicine

A chest X-ray can be scanned to look for signs of pneumonia, for example, but most of the more than 500 AI clinical medicine models that have been approved by the FDA only perform one or two specific tasks. However, recent developments in foundation model research offer the potential to address a wider range of difficult problems. According to Leskovec, “the exciting and ground-breaking part is that generalist medical AI models will be able to ingest different types of medical information—for example, imaging studies, lab results, and genomics data—to then perform tasks that we instruct them to do on the fly.

The way that medical AI will function is expected to change significantly, said Moor. “Next, we will have devices that can perform perhaps a thousand tasks, some of which were not even foreseen during model development, as opposed to just one.”.

The authors, who also include Harlan Krumholz from Yale, Oishi Banerjee and Pranav Rajpurkar from Harvard University, Zahra Shakeri Hossein Abad from the University of Toronto, and Eric Topol from the Scripps Research Translational Institute, describe how GMAI could handle a range of applications, from chatbots with patients to note-taking to bedside decision support for doctors.

The authors suggest that in the radiology department, models could create radiology reports that visually highlight abnormalities while taking the patient’s medical history into account. By speaking with GMAI models, radiologists could better comprehend cases: “Can you highlight any new multiple sclerosis lesions that were not present in the previous image?”.

The researchers outline additional needs and qualifications that must be met for GMAI to mature into a reliable technology in their paper. They emphasize that in order to interact with authorized users, the model must consume all of the user’s personal medical information as well as previous medical knowledge. The next step is for it to be able to converse with a patient, much like a triage nurse or doctor, in order to gather fresh information and data or suggest different treatment options.

Worries about potential growth.

The co-authors discuss the effects of a model with the capacity to learn more than 1,000 medical assignments in their research paper. Verification is, in our opinion, the main issue facing generalist models in medicine. How can we be sure that the model is accurate and not just concocting data? asked Leskovec.

They highlight the ChatGPT language model’s flaws that have already been found. The Pope in a designer puffy coat is also funny in an AI-generated image. However, Moor noted, “verification becomes extremely crucial in high-stakes scenarios where the AI system makes life-or-death decisions.”.

According to the authors, maintaining privacy is also essential. The online community has already found ways to circumvent the current security measures, which is a huge problem, according to Moor, who called models like ChatGPT and GPT-4.

A significant challenge for GMAI is distinguishing between the data and social biases, said Leskovec. GMAI models require the capacity to concentrate on signals that are causal for a specific disease and disregard erroneous signals that only frequently correlate with the outcome. Moor cites preliminary research that indicates larger models typically display more social biases than smaller models, assuming that model size will only continue to increase. “It is the owners and developers of such models and the vendors’ responsibility to really make sure that those biases are identified and addressed early on, especially if they’re deploying them in hospitals,” said Moor.

Leskovec concurred, stating that although the current technology is very promising, there are still many gaps. “The question is, can we identify current missing pieces, like verification of facts, understanding of biases, and explainability or justification of answers, so that we can give an agenda for the community on how to make progress toward fully realizing the profound potential of GMAI?” the researcher asks.

More information: Michael Moor et al, Foundation models for generalist medical artificial intelligence, Nature (2023). DOI: 10.1038/s41586-023-05881-4