For over 30 years, the models that specialists and government organizations have used to gauge seismic tremors and consequential convulsions have remained to a great extent unaltered. While these more established models function admirably with restricted information, they battle with the gigantic seismology datasets that are presently accessible.

To address this constraint, a group of specialists at the College of California, St. Nick Cruz, and the Specialized College of Munich made another model that utilizes profound figuring out how to conjecture post-quake tremors: the Repetitive Seismic Tremor Estimate (RECAST). In a paper distributed today in Geophysical Exploration Letters, the researchers show how the profound learning model is more adaptable and versatile than the quake gauge models presently utilized.

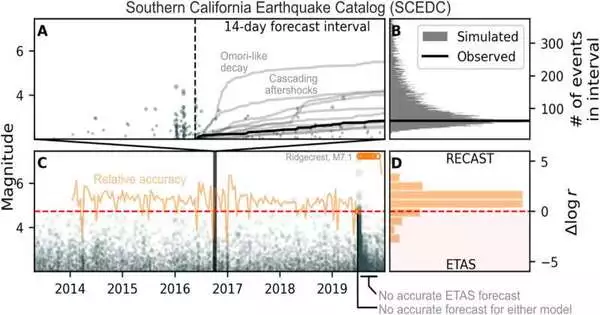

The new model beats the flow model, known as the Plague Type Delayed Repercussion Succession (ETAS) model, for seismic tremor inventories of around 10,000 occasions and more.

“The ETAS model approach was created for the observations we had in the 1980s and 1990s, when we were attempting to create accurate forecasts based on a limited number of observations.”

Kelian Dascher-Cousineau, the lead author of the paper

“The ETAS model methodology was intended for the perceptions that we had during the 80s and 90s when we were attempting to construct solid conjectures in light of not very many perceptions,” said Kelian Dascher-Cousineau, the lead creator of the paper, who as of late finished his Ph.D. at UC Santa Cruz. “Today’s a totally different scene. Presently, with more delicate hardware and bigger information stockpiling capacities, earthquake inventories are a lot bigger and more nitty-gritty.

“We’ve begun to have million-tremor indexes, and the old model basically couldn’t deal with that measure of information,” said Emily Brodsky, a teacher of earth and planetary sciences at UC Santa Cruz and co-creator of the paper. As a matter of fact, one of the principal difficulties of the review was not planning the new reworked model itself but rather getting the more established ETAS model to deal with tremendous informational collections.

“The ETAS model is somewhat fragile, and it has a great deal of exceptionally unobtrusive and fussy manners by which it can come up short,” said Dascher-Cousineau. “In this way, we invested a ton of energy ensuring we weren’t wrecking our benchmark, contrasted with a real-life model turn of events.”

To keep applying profound learning models to delayed repercussion gauging, Dascher-Cousineau says the field needs a superior framework for benchmarking. To show the capabilities of the RECAST model, the gathering previously utilized an ETAS model to mimic a quake list. Subsequent to working with the engineered information, the specialists tried the RECAST model utilizing genuine information from the Southern California earthquake inventory.

They found that the RECAST model—which can, basically, figure out how to learn—performed somewhat better compared to the ETAS model at gauging delayed repercussions, especially as the amount of information expanded. The computational exertion and time were likewise altogether better for bigger inventories.

This isn’t whenever researchers first took a stab at utilizing AI to gauge tremors, yet as of not long ago, the innovation was not exactly prepared, said Dascher-Cousineau. New advances in AI make the RECAST model more precise and effectively versatile for various seismic tremor lists.

The model’s adaptability could open up additional opportunities for seismic tremor monitoring. With the capacity to adjust to a lot of new information, models that utilize profound gains might actually consolidate data from different districts on the double to improve gauges about inadequately concentrated regions.

“We could possibly prepare for New Zealand, Japan, and California and have a model that is really great for determining some places where the information probably won’t be as plentiful,” said Dascher-Cousineau.

Utilizing profound learning models will likewise ultimately permit analysts to extend the sort of information they use to estimate seismicity.

“We’re recording ground movement constantly,” said Brodsky. “So a higher level is to really utilize that data, not stress over it regardless of whether we are in general calling it a quake or a seismic tremor, yet to utilize everything.”

Meanwhile, the scientists trust the model to spark conversations about the potential outcomes of the new innovation.

“It has all of this potential associated with it,” said Dascher-Cousineau. “Since it is planned like that,

More information: Kelian Dascher‐Cousineau et al, Using Deep Learning for Flexible and Scalable Earthquake Forecasting, Geophysical Research Letters (2023). DOI: 10.1029/2023GL103909