As more people use their smartphones to view movies, edit videos, read the news, and keep up with social media, these devices have evolved to accommodate larger screens and more processing power for more demanding activities.

Unwieldy phones have the disadvantage of requiring a second hand or voice commands to operate, which can be awkward and inconvenient.

In response, researchers at Carnegie Mellon University’s Human-Computer Interaction Institute (HCII) are working on a tool called EyeMU, which combines gaze control and simple hand motions to allow users to do tasks on a smartphone.

“The eyes have what you would call the Midas touch problem, You can’t have a situation in which something happens on the phone everywhere you look. Too many applications would open.”

said Chris Harrison, an associate professor in the HCII and director of the Future Interfaces Group.

“‘Is there a more natural technique to utilize to engage with the phone?’ we wondered. And looking at something is a prerequisite for a lot of what we do. ” Karan Ahuja, a human-computer interaction doctoral student, agreed.

Although gaze analysis and prediction aren’t new, getting a satisfactory degree of capability on a smartphone would be a significant step forward.

“The eyes have a Midas touch problem,” Chris Harrison, an associate professor in the HCII and director of the Future Interfaces Group, explained. “There can’t be a situation where something happens on the phone every time you turn around. There would be way too many applications open. “

This difficulty can be solved with software that precisely tracks the eyes. Andy Kong, a computer science senior, has been fascinated by eye-tracking technology since his first days at CMU. He found commercial versions too expensive, so he designed a program that tracked the user’s eyes and moved the cursor across the screen using the laptop’s built-in camera—a critical first step toward EyeMU.

Right now, phones only reply when we ask for something, whether through speech, taps, or button clicks, Kong explained. Imagine how much more beneficial it would be if we could forecast what the user desired by analyzing their looks or other biometrics if the phone was widely used now.

It was difficult to simplify the package so that it could run quickly on a smartphone.

This is due to a lack of resources. You must ensure that your algorithms are sufficiently fast, “Ahuja remarked. “Your eye will move along if it takes too long.”

At last year’s International Conference on Multimodal Interaction, Kong, the paper’s primary author, presented the team’s findings alongside Ahuja, Harrison, and Assistant Professor of HCII Mayank Goel. Kong, an undergraduate researcher, had peer-reviewed work accepted for a big conference, which was a huge accomplishment.

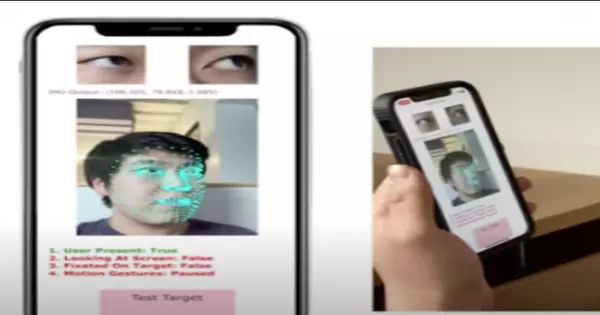

Kong and Ahuja improved that early prototype by studying the gaze patterns of users looking at different sections of the screen and rendering the mapping data using Google’s Face Mesh tool. The team then created a gaze predictor, which uses the smartphone’s front-facing camera to lock in and register what the user is looking at as the target.

By integrating the gaze predictor with the smartphone’s built-in motion sensors to enable commands, the team was able to make the tool more productive. For example, a user may secure a notification as a target by looking at it long enough, then flicking the phone to the left to dismiss it or to the right to respond to it. Similarly, while holding a tall cappuccino in one hand, a user might pull the phone closer to expand an image or move the phone aside to deactivate the gaze control.

“Big tech companies like Google and Apple have gotten fairly close to gaze prediction,” Harrison said, “but simply glancing at something won’t get you there.” The key breakthrough in this study is the integration of a second modality, such as flicking the phone left or right, in conjunction with gaze prediction. That is what makes it so effective. In retrospect, it seems so obvious, yet it’s a brilliant idea that makes EyeMU a lot more intuitive. “