Neural networks, a kind of machine-learning model, are used to assist humans with a wide range of tasks, from determining whether a person’s credit score is high enough to qualify for a loan to determining whether a patient has a particular disease. However, the workings of these models remain largely unknown to researchers. It is still unclear whether a specific model is the best option for a given task.

MIT scientists have discovered some solutions. They studied neural networks and demonstrated that, when given a large amount of labeled training data, it is possible to design neural networks so that they are “optimal,” which minimizes the possibility of incorrectly classifying patients or borrowers. These networks require a particular architecture in order to operate at their best.

The researchers discovered that, in some circumstances, the building blocks that allow a neural network to be optimal are not the ones that practitioners use. According to the researchers, these novel and previously unconsidered optimal building blocks were discovered through the new analysis.

“It turns out that if you apply the normal activation methods that people use in practice and keep increasing the network depth, you get pretty bad performance. We demonstrate that if you build with diverse activation functions, your network will improve over time as you collect more data.”

Lead author Adityanarayanan Radhakrishnan, an EECS graduate student.

They describe these ideal building blocks, known as activation functions, and demonstrate how to use them to create neural networks that perform better on any dataset in a paper that was just published in the Proceedings of the National Academy of Sciences. The outcomes persist even as the neural networks get very large. According to senior author Caroline Uhler, a professor in the Department of Electrical Engineering and Computer Science (EECS), this work may aid developers in choosing the proper activation function, enabling them to create neural networks that classify data more accurately across a variety of application areas.

“Although these are novel activation functions that have never been used before, they are straightforward functions that anyone could use to solve a specific issue. The value of having theoretical justifications is clearly demonstrated by this work. According to Uhler, who is also co-director of the Eric and Wendy Schmidt Center at the Broad Institute of MIT and Harvard and a researcher at MIT’s Laboratory for Information and Decision Systems (LIDS) and Institute for Data, Systems, and Society (IDSS), pursuing a principled understanding of these models “can actually lead you to new activation functions that you would otherwise never have thought of.”.

Adityanarayanan Radhakrishnan, an EECS graduate student and Eric and Wendy Schmidt Center Fellow, and Mikhail Belkin, a professor at the University of California, San Diego’s Haliciolu Data Science Institute, are the paper’s lead authors in addition to Uhler.

investigation of activation.

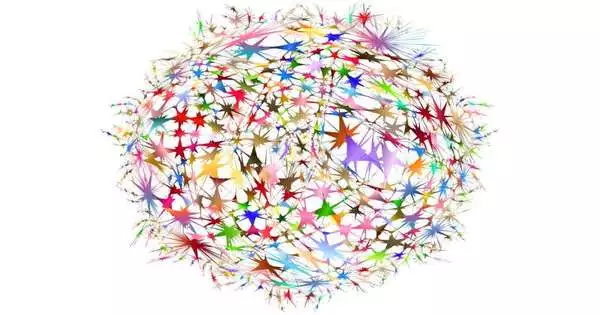

A machine-learning model called a neural network is somewhat based on the human brain. Data is processed by a network of interconnected neurons in many layers. Millions of examples from a dataset are used to train a network to perform a task.

For instance, a network that has been trained to categorize images into groups, such as dogs and cats, is given an image that has been encoded as numbers. Up until a single number is produced, the network performs a series of intricate multiplication operations, layer by layer. The network categorizes the image as a dog if that number is positive and a cat if it is negative.

Activation processes aid the network’s ability to recognize intricate patterns in the input data. Before data are sent to the next layer, they accomplish this by applying a transformation to the output of the previous layer. One activation function is chosen to be used when researchers construct a neural network. Additionally, they decide on the network’s depth (the number of layers) and width (the number of neurons in each layer).

It turns out that if you keep increasing the network’s depth while using the common activation functions that people use in practice, the performance gets progressively worse. We demonstrate that if you design with various activation functions, your network will get better and better as you gather more data,” says Radhakrishnan.

He and his team looked at a scenario where a neural network is trained to perform classification tasks and is infinitely deep and wide, i.e., the network is built by continuously adding more layers and nodes. The network learns to categorize data inputs using classification to do this.

“A clear picture.”

There are only three ways that this type of network can learn to classify inputs, the researchers found after conducting a thorough analysis. One approach categorizes inputs based on the majority of inputs in the training data; for example, if there are more dogs than cats, it will decide that every new input is a dog. Another method classifies by selecting the label (dog or cat) of the training data point that most closely resembles the new input.

In the third method, a weighted average of all the training data points that are comparable to a new input is used to categorize it. According to their analysis, only this approach among the other two yields the best results. They discovered a collection of activation processes that consistently employ this superior classification technique.

One of the most unexpected findings was that, whichever activation function you select, one of these three classifiers will always be used. You can use our formulas to find out explicitly which of these three it will be. He claims that a very clear image is presented.

They put this theory to the test on various classification benchmarking tasks and discovered that it frequently resulted in better performance. According to Radhakrishnan, neural network designers could make better classification decisions by using their formulas to choose an activation function.

In the future, the researchers hope to apply what they have learned to analyze scenarios where they have a finite amount of data and networks that are not infinitely wide or deep. In cases where the data don’t have labels, they also want to use this analysis.

“In order to successfully deploy deep learning models in a mission-critical environment, it is important that they be theoretically grounded. Building architectural systems in a theoretically sound manner that leads to better results in practice is something that this approach shows promise for achieving.

More information: Adityanarayanan Radhakrishnan et al, Wide and deep neural networks achieve consistency for classification, Proceedings of the National Academy of Sciences (2023). DOI: 10.1073/pnas.2208779120