Computerized reasoning and AI have made huge headway in the past couple of years, including the new launch of ChatGPT and craftsmanship generators; however, one thing that is as yet remarkable is an energy-efficient method for creating and storing long- and momentary memories at a structure factor that is equivalent to a human mind. A group of specialists in the McKelvey School of Design at Washington College in St. Louis has fostered an energy-productive method for solidifying long-haul memories on a small chip.

Shantanu Chakrabartty, the Clifford W. Murphy Teacher in the Preston M. Green Branch of Electrical and Frameworks Designing, and individuals from his lab fostered a moderately basic gadget that copies the elements of the cerebrum’s neurotransmitters, associations between neurons that permit signs to pass data. The fake neurotransmitters utilized in numerous advanced simulated intelligence frameworks are somewhat straightforward, though organic neurotransmitters might possibly store complex memories because of a dazzling transaction between various compound pathways.

Chakrabartty’s group demonstrated how their fake neural connection could also copy a portion of these elements that can enable computer-based intelligence frameworks to consistently learn new tasks without forgetting how to perform old ones.The aftereffects of the examination were distributed Jan. 13 in Wildernesses in Neuroscience.

“This artificial synapse device can solve or implement some of these continuous learning challenges in which the gadget does not forget what it has previously learnt. It is now possible to have both long-term and short-term memory on the same gadget.”

Shantanu Chakrabartty,

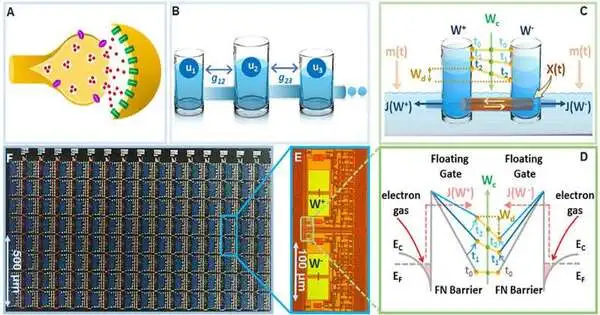

To do this, Chakrabartty’s group fabricated a gadget that works like two coupled supplies of electrons, where the electrons can stream between the two chambers through an intersection, or counterfeit neural connection. To make that intersection, they utilized quantum burrowing, a peculiarity that allows an electron to go through a boundary mysteriously. In particular, they utilized Fowler-Nordheim (FN) quantum tunneling, in which electrons go through a three-sided obstruction and simultaneously change the state of the hindrance. FN burrowing provides a much simpler and more energy-efficient association than existing strategies that are excessively difficult for PC demonstrating.

“The excellence of this is that we have some control over this gadget up to a solitary electron since we exactly planned this quantum mechanical hindrance,” Chakrabartty said.

Chakrabartty and doctoral understudies Mustafizur Rahman and Subhankar Bose planned a model exhibit of 128 of these hourglass gadgets on a chip under a millimeter in size.

“Our work shows that the activity of the FN neural connection is close to ideal with regards to the synaptic lifetime and explicit union properties,” Chakrabartty said. “This fake neural connection gadget can tackle or carry out a portion of these ceaseless learning undertakings where the gadget doesn’t fail to remember what it has realized previously.” It now considers both long-term and short-term memory on the same device.

Chakrabartty stated that because the device uses a couple of electrons all at once, it uses almost no energy in general.

“The vast majority of these PCs utilized for AI errands transport a ton of electrons from the battery, store them on a capacitor, and then, at that point, dump them out and don’t reuse them,” Chakrabartty said. “In our model, we fix the aggregate sum of electrons in advance and don’t have to infuse extra energy in light of the fact that the electrons stream out by the very nature of physical science itself.” “By ensuring that a couple of electrons stream at a time, we can make this gadget work for significant stretches of time.”

The work is essential for the research Chakrabartty and his lab individuals are doing to make man-made intelligence more reasonable. The energy expected for current simulated intelligence calculations is developing dramatically, with the up-and-coming generation of models expecting near 200 terajoules to prepare one framework. Furthermore, these frameworks are way off the mark for arriving at the limit of the human mind, which has nearly 1,000 trillion neural connections.

“At the present moment, we don’t know how to prepare frameworks with even a portion of a trillion boundaries, and current methodologies are not energy-manageable,” he said. “Assuming we stay on the course that we are on, either something new needs to end up giving sufficient energy or we need to sort out some way to prepare these enormous models utilizing these energy-productive, dynamic-memory gadgets.”

More information: Mustafizur Rahman et al, On-device synaptic memory consolidation using Fowler-Nordheim quantum-tunneling, Frontiers in Neuroscience (2023). DOI: 10.3389/fnins.2022.1050585